Machine Learning - CMU

Phd program in machine learning.

Carnegie Mellon University's doctoral program in Machine Learning is designed to train students to become tomorrow's leaders through a combination of interdisciplinary coursework, hands-on applications, and cutting-edge research. Graduates of the Ph.D. program in Machine Learning will be uniquely positioned to pioneer new developments in the field, and to be leaders in both industry and academia.

Understanding the most effective ways of using the vast amounts of data that are now being stored is a significant challenge to society, and therefore to science and technology, as it seeks to obtain a return on the huge investment that is being made in computerization and data collection. Advances in the development of automated techniques for data analysis and decision making requires interdisciplinary work in areas such as machine learning algorithms and foundations, statistics, complexity theory, optimization, data mining, etc.

The Ph.D. Program in Machine Learning is for students who are interested in research in Machine Learning. For questions and concerns, please contact us .

The PhD program is a full-time in-person committment and is not offered on-line or part-time.

PhD Requirements

Requirements for the phd in machine learning.

- Completion of required courses , (6 Core Courses + 1 Elective)

- Mastery of proficiencies in Teaching and Presentation skills.

- Successful defense of a Ph.D. thesis.

Teaching Ph.D. students are required to serve as Teaching Assistants for two semesters in Machine Learning courses (10-xxx), beginning in their second year. This fulfills their Teaching Skills requirement.

Conference Presentation Skills During their second or third year, Ph.D. students must give a talk at least 30 minutes long, and invite members of the Speaking Skills committee to attend and evaluate it.

Research It is expected that all Ph.D. students engage in active research from their first semester. Moreover, advisor selection occurs in the first month of entering the Ph.D. program, with the option to change at a later time. Roughly half of a student's time should be allocated to research and lab work, and half to courses until these are completed.

Master of Science in Machine Learning Research - along the way to your PhD Degree.

Other Requirements In addition, students must follow all university policies and procedures .

Rules for the MLD PhD Thesis Committee (applicable to all ML PhDs): The committee should be assembled by the student and their advisor, and approved by the PhD Program Director(s). It must include:

- At least one MLD Core Faculty member

- At least one additional MLD Core or Affiliated Faculty member

- At least one External Member, usually meaning external to CMU

- A total of at least four members, including the advisor who is the committee chair

Financial Support

Application Information

For applicants applying in Fall 2024 for a start date of August 2025 in the Machine Learning PhD program, GRE Scores are OPTIONAL. The committee uses GRE scores to gauge quantitative skills, and to a lesser extent, also verbal skills.

Proof of English Language Proficiency If you will be studying on an F-1 or J-1 visa, and English is not a native language for you (native language…meaning spoken at home and from birth), we are required to formally evaluate your English proficiency. We require applicants who will be studying on an F-1 or J-1 visa, and for whom English is not a native language, to demonstrate English proficiency via one of these standardized tests: TOEFL (preferred), IELTS, or Duolingo. We discourage the use of the "TOEFL ITP Plus for China," since speaking is not scored. We do not issue waivers for non-native speakers of English. In particular, we do not issue waivers based on previous study at a U.S. high school, college, or university. We also do not issue waivers based on previous study at an English-language high school, college, or university outside of the United States. No amount of educational experience in English, regardless of which country it occurred in, will result in a test waiver.

Submit valid, recent scores: If as described above you are required to submit proof of English proficiency, your TOEFL, IELTS or Duolingo test scores will be considered valid as follows: If you have not received a bachelor’s degree in the U.S., you will need to submit an English proficiency score no older than two years. (scores from exams taken before Sept. 1, 2023, will not be accepted.) If you are currently working on or have received a bachelor's and/or a master's degree in the U.S., you may submit an expired test score up to five years old. (scores from exams taken before Sept. 1, 2019, will not be accepted.)

Graduate Online Application

- Admissions application opens September 4, 2024

- Early Application Deadline – November 20, 2024 (3:00 p.m. EST)

- Final Application Deadline - December 11, 2024 (3:00 p.m. EST)

Artificial Intelligence

- Skip to content

MastersInAI.org

PhD in Artificial Intelligence Programs

On This Page:

Universities offer a variety of Doctor of Philosophy (Ph.D.) programs related to Artificial Intelligence (AI.) Some of these are titled as Ph.D.s in AI, whereas most are Ph.D.s in Computer Science or related engineering disciplines with a specialization or focus in AI. Admissions requirements usually include a related bachelor’s degree and, sometimes, a master’s degree. Moreover, most Ph.D. programs expect academic excellence and strong recommendations. The AI Ph.D. programs take three to five or more years, depending on if you have a master’s and the complexity of your dissertation. People with Ph.D.s in AI usually go on to tenure track professorships, postdoctoral research positions, or high-level software engineering positions.

What Are Artificial Intelligence Ph.D. Programs?

Ph.D. programs in AI focus on mastering advanced theoretical subjects, such as decision theory, algorithms, optimization, and stochastic processes. Artificial intelligence covers anything where a computer behaves, rationalizes, or learns like a human. Ph.D.s are usually the endpoint to a long educational career. By the time scholars earn Ph.D.s, they have probably been in school for well over 20 years.

People with an AI Ph.D. degree are capable of formulating and executing novel research into the subtopics of AI. Some of the subtopics include:

- Environment adaptation in self-driving vehicles

- Natural language processing in robotics

- Cheating detection in higher education

- Diagnosing and treating diseased in healthcare

AI Ph.D. programs require candidates to focus most of their coursework and research on AI topics. Most culminate in a dissertation of published research. Many AI Ph.D. recipients’ dissertations are published in peer-reviewed journals or presented at industry-leading conferences. They go on to lead careers as experts in AI technology.

Types of Artificial Intelligence Ph.D. Programs

Most AI Ph.D. programs are a Ph.D. in Computer Science with a concentration in AI. These degrees involve general, advanced level computer science courses for the first year or two and then specialize in AI courses and research for the remainder of the curriculum.

AI Ph.D.s offered in other colleges like Computer Engineering, Systems Engineering, Mechanical Engineering, or Electrical Engineering are similar to Ph.D.s in Computer Science. They often involve similar coursework and research. For instance, colleges like Indiana University Bloomington’s Computing and Engineering have departments specializing in AI or Intelligent Engineering. Some colleges, however, may focus more on a specific discipline. For example, a Ph.D. in Mechanical Engineering with an AI focus is more likely to involve electric vehicles than targeted online advertising.

Some AI programs fall under a Computational Linguistics specialization, like CUNY . These programs emphasize the natural language processing aspect of AI. Computational Linguistics programs still involve significant computer science and engineering but also require advanced knowledge in language and speech.

Other unique programs offer a joint Ph.D. with non-engineering disciplines, such as Carnegie Mellon’s Joint Ph.D. in Machine Learning and Public Policy, Statistics, or Neural Computation .

How Ph.D. in Artificial Intelligence Programs Work

Ph.D. programs usually take three to six years to complete. For example, Harvard lays out a three+ year track where the last year(s) is spent completing your research and defending your dissertation. Many Ph.D. programs have a residency requirement where you must take classes on-campus for one to three years. Moreover, most universities, such as Brandeis , require Ph.D. students to grade and/or teach for one to four semesters. Despite these requirements, several Ph.D. programs allow for part-time or full-time students, like Drexel .

Admissions Requirements

Ph.D. programs in AI admit the strongest students. Most applications require a resume, transcripts, letters of recommendation, and a statement of interest. Many programs require a minimum undergraduate GPA of 3.0 or higher, although some allow for statements of explanation if you have a lower GPA due to illness or other excusable causes for a low GPA.

Many universities, like Cornell , recently made the GRE either optional or not required because the GRE provides little prediction into the success of research and represents a COVID-19 risk. These programs may require the GRE again in the future. However, many schools still require the IELTS/TOEFL for international applicants.

Curriculum and Coursework

The curriculum for AI Ph.D.s varies based on the applicants’ prior education for many universities. Some programs allow applicants to receive credit for relevant master’s programs completed prior to admission. The programs require about 30 hours of advanced research and classes. Other programs do not give credit for master’s programs completed elsewhere. These require over 60 hours of electives, in addition to the 30-hours of fundamental and core classes in addition to the advanced courses.

For programs with more specific specialties, the courses are usually narrowly focused. For example, Duke’s Robotics track requires ten classes, at least three of which are focused on AI as it relates to robotics. Others allow for non-AI-specific courses such as computer networks.

Many Ph.D. programs have strict GPA requirements to remain in the program. For example, Northeastern requires PhD candidates to maintain at least a 3.5 GPA. Other programs automatically dismiss students with too many Cs in courses.

Common specializations include:

- Computational Linguistics

- Automotive Systems

- Data Science

Artificial Intelligence Dissertations

Most Ph.D. programs require a dissertation. The dissertation takes at least two years to research and write, usually starting in the second or third year of the Ph.D. curriculum. Moreover, many programs require an oral presentation or defense of the dissertation. Some universities give an award for the best dissertation of the year. For example, Boston University gave a best dissertation award to Hao Chen for the dissertation entitled “ Improving Data Center Efficiency Through Smart Grid Integration and Intelligent Analytics .”

A couple of programs require publications, like Capitol Technology , or additional course electives, like LIU . For example, The Ohio State University requires 27 hours of graded coursework and three hours with an advisor for non-thesis path candidates. Thesis-path candidates only have to take 18 hours of graded coursework but must spend 12 hours with their advisors.

Are There Online Ph.D. in Artificial Intelligence Programs?

Officially, the majority of AI Ph.D. programs are in-person. Only one university, Capitol Technology University , allows for a fully online program. This is one of the most expensive Ph.D.s in the field, costing about $60,000. However, it is also one of the most flexible programs. It allows you to complete your coursework on your own schedule, perhaps even while working. Moreover, it allows for either a dissertation path or a publication path. The coursework is fully focused on AI research and writing, thus eliminating requirements for more general courses like algorithms or networks.

One detail you should consider is that the Capitol Technology Ph.D. program is heavily driven by a faculty mentor. This is someone you will need consistent contact with and open communication. The website only lists the director, so there is a significant element of uncertainty on how the program will work for you. But doctoral candidates who are self-driven and have a solid idea of their research path have a higher likelihood of succeeding.

If you need flexibility in your Ph.D. program, you may find some professors at traditional universities will work with you on how you meet and conduct the research, or you may find an alternative degree program that is online. Although a Ph.D. program may not be officially online, you may be able to spend just a semester or two on campus and then perform the rest of the Ph.D. requirements remotely. This is most likely possible if the university has an online master’s program where you can take classes. For example, the Georgia Institute of Technology does not have a residency requirement, has an online master’s of computer science program , and some professors will work flexibly with doctoral candidates with whom they have a close relationship.

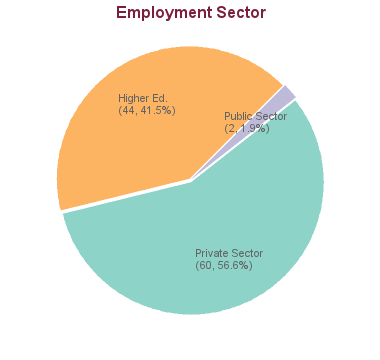

What Jobs Can You Get with a Ph.D. in Artificial Intelligence?

Many Ph.D. graduates work as tenure track professors at universities with AI classes. Others work as postdoc research scientists at universities. Both of these roles are expected to conduct research and publish, but professors have more of an expectation to teach, as well. Universities usually have a small number of these positions available. Moreover, postdoc research positions tend to only last for a limited amount of time.

Other engineers with AI-focused-Ph.D.s conduct research and do software development in the private sector at AI-intensive companies. For example, Google uses AI in many departments. Its assistant uses natural language processing to interface with users through voice. Moreover, Google uses AI to generate news feeds for users. Google, and other industry leaders, have a strong preference for engineers with Ph.D.s. This career path is often highly sought by new Ph.D. recipients.

Another private sector industry shifting to AI is vehicle manufacturing. For example, self-driving cars use significant AI to make ethical and legal decisions while operating. Another example is that electric vehicles use AI techniques to optimize performance and power usage.

Some AI Ph.D. recipients become c-suite executives, such as Chief Technology Officers (CTO). For example, Dr. Ted Gaubert has a Ph.D. in engineering and works as a CTO for an AI-intensive company. Another CTO, Dr. David Talby , revolutionized AI with a new natural language processing library, Spark. CTO positions in AI-focused companies often have decades of experience in the AI field.

How Much Do Ph.D. in Artificial Intelligence Programs Cost?

The tuition for many Ph.D. programs is paid through fellowships, graduate research assistantships, and teaching assistantships. For example, Harvard provides full support for Ph.D. candidates. Some programs mandate teaching or research to attend based on the assumption that Ph.D. candidates need financial assistance.

Fellowships are often reserved for applicants with an exceptional academic and research background. These are usually named for eminent alumni, professors, or other scholars associated with the university. Receiving such a fellowship is a highly respected honor.

For programs that do not provide full assistance, the usual cost is about $500 to $1,000 per credit hour, plus university fees. On the low end, Northern Illinois University charges about $557 per credit hour . With 30 to 60 hours required, this means the programs cost about $30,000 to over $60,000 out of pocket. Typically, Ph.D. programs that do not provide funding for any Ph.D. candidates are less reputable or provide other benefits, such as flexibility, online programs, or fewer requirements.

How Much Does a Ph.D. in AI Make?

Engineers with AI Ph.D.s earn well into the six-figure range in the private sector. For example, OpenAI , a non-profit, pays its top researchers over $400,000 per year. Amazon pays its data scientists with Ph.D.s over $200,000 in salary. Directors and executives with Ph.D.s often earn over $1,000,000 in private industry.

When considering working in the private industry, professionals usually compare offers based on total compensation, not just salary. Many companies offer large stock and bonus packages to Ph.D.-level engineers and scientists.

Startups sometimes pay less in salary, but much more in stock options. For example, the salary may be $50,000 to $100,000, but when the startup goes public, you may end up with hundreds of thousands in stock options. This creates a sense of ownership and investment in the success of the startup.

Computer science professors and postdoctoral researchers earn about $90,000 to $160,000 from universities. However, they increase their competition by writing books, speaking at conferences, and advising companies. Startups often employ professors for advice on the feasibility and design of their technology.

Schools with PhD in Artificial Intelligence Programs

Arizona state university.

School of Computing and Augmented Intelligence

Tempe, Arizona

Ph.D. in Computer Science (Artificial Intelligence Research)

Ph.d. in computing and information sciences (artificial intelligence research), university of california-riverside.

Department of Electrical and Computer Engineering

Riverside, California

Ph.D. in Electrical Engineering - Intelligent Systems Research Area

University of california-san diego.

Electrical and Computer Engineering Department

La Jolla, California

Ph.D. in Intelligent Systems, Robotics and Control

Colorado state university-fort collins.

The Graduate School

Fort Collins, Colorado

Ph.D. in Computer Science - Artificial Intelligence Research Area

University of colorado boulder.

Paul M. Rady Mechanical Engineering

Boulder, Colorado

PhD in Robotics and Systems Design

District of columbia, georgetown university.

Department of Linguistics

Washington, District of Columbia

Doctor of Philosophy (Ph.D.) in Linguistics - Computational Linguistics

The university of west florida.

Department of Intelligent Systems and Robotics

Pensacola, Florida

Ph.D. in Intelligent Systems and Robotics

University of central florida.

Department of Electrical & Computer Engineering

Orlando, Florida

Doctorate in Computer Engineering - Intelligent Systems and Machine Learning

Georgia institute of technology.

Colleges of Computing, Engineering, and Sciences

Atlanta, Georgia

Ph.D. in Machine Learning

Northern illinois university.

Dekalb, Illinois

Ph.D. in Computer Science - Artificial Intelligence Area of Emphasis

Ph.d. in computer science - machine learning area of emphasis, northwestern university.

McCormick School of Engineering

Evanston, Illinois

PhD in Computer Science - Artificial Intelligence and Machine Learning Research Group

Indiana university bloomington.

Department of Intelligent Systems Engineering

Bloomington, Indiana

Ph.D. in Intelligent Systems Engineering

Ph.d. in linguistics - computational linguistics concentration, capitol technology university.

Doctoral Programs Department

Laurel, Maryland

Doctor of Philosophy (PhD) in Artificial Intelligence

Offered Online

Johns Hopkins University

Whiting School of Engineering

Baltimore, Maryland

Doctor of Philosophy in Mechanical Engineering - Robotics

Massachusetts, boston university.

College of Engineering

Boston, Massachusetts

PhD in Computer Engineering - Data Science and Intelligent Systems Research Area

Phd in systems engineering - automation, robotics, and control, brandeis university.

Department of Computer Science

Waltham, Massachusetts

Ph.D. in Computer Science - Computational Linguistics

Harvard university.

School of Engineering and Applied Sciences

Cambridge, Massachusetts

Ph.D. in Applied Mathematics

Northeastern university.

Khoury College of Computer Science

Ph.D. in Computer Science - Artificial Intelligence Area

University of michigan-ann arbor.

Electrical Engineering and Computer Science Department

Ann Arbor, Michigan

PhD in Electrical and Computer Engineering - Robotics

University of nebraska at omaha.

College of Information Science & Technology

Omaha, Nebraska

PhD in Information Technology - Artificial Intelligence Concentration

University of nevada-reno.

Computer Science and Engineering Department

Reno, Nevada

Ph.D. in Computer Science & Engineering - Intelligent and Autonomous Systems Research

Rutgers university.

New Brunswick, New Jersey

Ph.D. in Linguistics with Computational Linguistics Certificate

Stevens institute of technology.

Schaefer School Of Engineering & Science

Hoboken, New Jersey

Ph.D. in Computer Engineering

Ph.d. in electrical engineering - applied artificial intelligence, ph.d. in electrical engineering - robotics and smart systems research, cornell university.

Ithaca, New York

Linguistics Ph.D. - Computational Linguistics

Ph.d.in computer science, cuny graduate school and university center.

New York, New York

Ph.D. in Linguistics - Computational Linguistics

Long island university-brooklyn campus.

Graduate Department

Brooklyn, New York

Dual PharmD/M.S. in Artificial Intelligence

Rochester institute of technology.

Golisano College of Computing and Information Sciences

Rochester, New York

North Carolina

Duke university.

Duke Robotics

Durham, North Carolina

Ph.D in ECE - Robotics Track

Ph.d. in mems - robotics track, ohio state university-main campus.

Department of Mechanical and Aerospace Engineering

Columbus, Ohio

PhD in Mechanical Engineering - Automotive Systems and Mobility (Connected and Automated Vehicles)

University of cincinnati.

College of Engineering and Applied Science

Cincinnati, Ohio

PhD in Computer Science and Engineering - Intelligent Systems Group

Oregon state university.

Corvallis, Oregon

Ph.D. in Artificial Intelligence

Pennsylvania, carnegie mellon university.

Machine Learning Department

Pittsburgh, Pennsylvania

PhD in Machine Learning & Public Policy

Phd in neural computation & machine learning, phd in statistics & machine learning, phd program in machine learning, drexel university.

Philadelphia, Pennsylvania

Doctorate in Mechanical Engineering - Robotics and Autonomy

Temple university.

Computer & Information Sciences Department

PhD in Computer and Information Science - Artificial Intelligence

University of pittsburgh-pittsburgh campus.

School of Computing and Information

Ph.D. in Intelligent Systems

The university of texas at austin.

Austin, Texas

Ph.D. with Graduate Portfolio Program in Robotics

The university of texas at dallas.

Erik Jonsson School of Engineering and Computer Science

Richardson, Texas

University of Utah

Mechanical Engineering Department

Salt Lake City, Utah

Doctor of Philosophy - Robotics Track

University of washington-seattle campus.

Seattle, Washington

Ph.D. in Machine Learning and Big Data

- Accreditations

- Facts and Figures

- Faculty Positions

- Maps and Directions

- Student Mentoring Program

- Current Students

- Engineering Honors

- Global Programs

- Admissions and Aid

- Prospective Students

- Entry to a Major Process

- Graduate Admissions

- Scholarships and Financial Aid

- Facilities and Equipment

- Faculty Research Specialty Areas

- Undergraduate Research

- Affiliated Faculty

- Emeritus Faculty

Doctor of Philosophy in Artificial Intelligence and Data Science

The Doctor of Philosophy (Ph.D.) degree is a research-oriented degree requiring a minimum of 64 semester credit hours of approved courses and research beyond the Master of Science (M.S.) degree [96 credit hours beyond the Bachelor of Science (B.S.) degree]. The university places limitations on these credit hours in addition to the requirements of the Department of Civil Engineering.

A complete discussion of all university requirements is found in the current Texas A&M University Graduate Catalog .

NOTE: All documents requiring departmental signatures must be submitted to the Civil Engineering Graduate Office in DLEB 101 at least one day prior to the Office of Graduate Studies deadline.

Artificial Intelligence and Data Science Faculty Members

- Dr. Mark Burris

- Dr. Nasir Gharaibeh

- Dr. Stefan Hurlebaus

- Dr. Dominique Lord

- Dr. Xingmao “Samuel” Ma

- Dr. Ali Mostafavi

- Dr. Arash Noshadravan

- Dr. Stephanie Paal

- Dr. Luca Quadrifoglio

- Dr. Scott Socolofsky

- Dr. Yunlong Zhang

Admission Admission to the AI/DS track is conditional upon meeting the general admission requirements. Also, students may only be admitted to the AI/DS track if a faculty member affiliated with the track is willing to supervise (and provide funding support via GAT or GAR or Fellowship) for the student. If a current student is approved to change from one track to another, they must complete the Track Change Request Form and send it to the CVEN Graduate Advising Office so notification can be sent to their original area coordinator. Please read the CVEN department policy on changing tracks.

Departmental Requirements In addition to fulfilling the University requirements for the Doctor of Philosophy (Ph.D.) degree, a student enrolled in the Civil Engineering graduate program in the area of Artificial Intelligence and Data Science area must satisfy the following department requirements.

- A minimum of 32 credit hours of graduate-level coursework taken through Texas A&M University [a minimum of 24 credit hours if the student already has taken at least another 24 credit hours of graduate course work for the Master of Science (M.S.) or Master of Engineering (MEng) degree].

- Remaining coursework requirement can be met by 32 hours of CVEN 691.

- Qualifying Exam

- Degree Plan

- Written Preliminary Exam

- Research Proposal

- Oral Preliminary Exam

- Completion of Dissertation

- Final Defense

Dissertation Topic Students pursuing the AI/DS track would work on dissertation topics with a great extent of interdisciplinary elements spanning across civil engineering and computer science/AI. Such interdisciplinary research would require a student to develop depth of knowledge and skills across both domains.

Committee The committee of Ph.D. students in the AI/DS track can be composed of faculty from different departments with backgrounds and skills related to the subject matter of the dissertation research.

Students in the AI/DS track are strongly encouraged to form their dissertation committee prior to the qualification exam. If the dissertation committee is formed prior to the qualification exam, the exam questions will be developed by the committee in coordination with the AI/DS Track Coordinator. If the student's dissertation committee is not formed at the time of the qualification examination, the Track Coordinator and the student advisor will handle the development of the qualification examination.

|

|

|

|

| 650 | STAT FND DATA SCIENCE | STAT |

| 647 | SPATIAL STATISTICS | STAT |

| 616 | STAT ASPECTS OF MACH LEARN I | STAT |

| 765 | MACH LEARN WITH NETWORKS | ECEN |

| 654 | STAT COMPUTING WITH R & PYTHON | STAT |

| 651 | STAT IN RESEARCH I | STAT |

| 633 | MACHINE LEARNING | CSCE |

| 639 | DATA MINING & ANALYSIS | STAT |

| 689 | SPTP: NETWORK SCI OF CITIES | URSC |

| 651 | STAT IN RESEARCH I | STAT |

| 689 | SPTP: NETWORK SCIENCE OF CITIES | URSC |

| 689 | SPTP: PROGRAMING IN URBAN ANALYTICS | URSC |

| 689 | Machine Intelligence and Applications in CE | CVEN |

PhD in Artificial Intelligence

To enter the Doctor of Philosophy in Artificial Intelligence, you must apply online through the UGA Graduate School web page . There is an application fee, which must be paid at the time the application is submitted.

There are several items which must be included in the application:

- Standardized test scores, including the GRE.

- 3 letters of recommendation, preferably from university faculty and/or professional supervisors. We encourage you to submit the letters to the graduate school online as you complete the application process.

- A sample of scholarly writing, in English. This can be anything you've written but should give an accurate indication of your writing abilities. The writing sample can be a term paper, research report, journal article, published paper, college paper, etc.

- A completed Application for Graduate Assistantship , if you are interested in receiving funding.

- A Statement of Purpose.

- A Resume or Curriculum Vitae.

Further information on program admissions is found in the AI Institute Frequently Asked Questions (FAQ) .

International Students should also review the links on the Information for International Students page for additional information relevant to the application process.

Graduate School Policies

University of Georgia Graduate School policies and requirements apply in addition to (and, in cases of conflict, take precedence over) those described here. It is essential that graduate students familiarize themselves with Graduate School policies, including minimum and continuous enrollment and other policies contained in the Graduate School Bulletin.

Students should also familiarize themselves with Graduate School Dates and Deadlines relevant to the degree.

Degree Requirements

Students of the doctoral program must complete a minimum of 40 hours of graduate coursework and 6 hours of dissertation credit (for a total of 46 credit hours), pass a comprehensive examination, and write and defend a dissertation. In addition, the University requires that all first-year graduate students enroll in a 1-credit-hour GradFirst seminar . Each of these requirements is described in greater detail below.

The degree program is offered using an in-person format, and classes are in general scheduled for full-time students. There are currently no special provisions for part-time, online, or off-campus students. Students are expected to attend all meetings of classes for which they are registered.

Program of Study

The Program of Study must include a minimum of 40 hours of graduate course work and a minimum of 6 hours of dissertation credit. Of the 40 hours of graduate course work, at least 20 hours must be 8000-level or 9000-level hours.

Required Courses

The following courses must be completed unless specifically waived for students entering the program with a master’s degree in Artificial Intelligence or a related field, or for students with substantially related graduate course work. All waived credits may be replaced by an equal number of doctoral research or doctoral dissertation credits (ARTI 9000, Doctoral Research or ARTI 9300, Doctoral Dissertation). Substitutions must be approved for a particular student by that student's Advisory Committee and by the Graduate Coordinator.

- PHIL/LING 6510 Deductive Systems (3 hours)

- CSCI 6380 Data Mining (4 hours) or CSCI 8950 Machine Learning (4 hours)

- CSCI/PHIL 6550 Artificial Intelligence (3 hours)

- ARTI 6950 Faculty Research Seminar (1 hour)

- ARTI/PHIL 6340 Ethics and Artificial Intelligence (3 hours)

Elective Courses

In addition to the required courses above, at least 6 additional courses must be taken from Groups A and Group B below, subject to the following requirements.

- At least 2 courses must be taken from Group A, from at least 2 areas.

- At least 2 courses must be taken from Group B, from at least 2 areas.

- At least 3 courses must be taken from a single area comprising the student’s chosen area of emphasis .

Since not all courses have the same number of credit hours, Ph.D. students may need to take additional graduate courses to complete the 40 hours.

AREA 1: Artificial Intelligence Methodologies

- CSCI 6560 Evolutionary Computing (4 hours)

- CSCI 8050 Knowledge Based Systems (4 hours)

- CSCI/PHIL 8650 Logic and Logic Programming (4 hours)

- CSCI 8920 Decision Making Under Uncertainty (4 hours)

- CSCI/ENGR 8940 Computational Intelligence (4 hours)

- CSCI/ARTI 8950 Machine Learning (4 hours)

AREA 2: Machine Learning and Data Science

- CSCI 6360 Data Science II (4 hours)

- CSCI 8360 Data Science Practicum (4 hours)

- CSCI 8945 Advanced Representation Learning (4 hours)

- CSCI 8955 Advanced Data Analytics (4 hours)

- CSCI 8960 Privacy-Preserving Data Analysis (4 hours)

AREA 3: Machine Vision and Robotics

- CSCI/ARTI 6530 Introduction to Robotics (4 hours)

- CSCI 6800 Human Computer Interaction (4 hours)

- CSCI 6850 Biomedical Image Analysis (4 hours)

- CSCI 8850 Advanced Biomedical Image Analysis (4 hours)

- CSCI 8820 Computer Vision and Pattern Recognition (4 hours)

- CSCI 8530 Advanced Topics in Robotics (4 hours)

- CSCI 8535 Multi Robot Systems (4 hours)

AREA 4: Cognitive Modeling and Logic

- PHIL/LING 6300 Philosophy of Language (3 hours)

- PHIL 6310 Philosophy of Mind (3 hours)

- PHIL/LING 6520 Model Theory (3 hours)

- PHIL 8310 Seminar in Philosophy of Mind (max of 3 hours)

- PHIL 8500 Seminar in Problems of Logic (max of 3 hours)

- PHIL 8600 Seminar in Metaphysics (max of 3 hours)

- PHIL 8610 Epistemology (max of 3 hours)

- PSYC 6100 Cognitive Psychology (3 hours)

- PSYC 8240 Judgment and Decision Making (3 hours)

- CSCI 6860 Computational Neuroscience (4 hours)

AREA 5: Language and Computation

- ENGL 6885 Introduction to Humanities Computing (3 hours)

- LING 6021 Phonetics and Phonology (3 hours)

- LING 6080 Language and Complex Systems (3 hours)

- LING 6570 Natural Language Processing (3 hours)

- LING 8150 Generative Syntax (3 hours)

- LING 8580 Seminar in Computational Linguistics (3 hours)

AREA 6: Artificial Intelligence Applications

- ELEE 6280 Introduction to Robotics Engineering (3 hours)

- ENGL 6826 Style: Language, Genre, Cognition (3 hours)

- ENGL/LING 6885 Introduction to Humanities Computing (3 hours)

- FORS 8450 Advanced Forest Planning and Harvest Scheduling (3 hours)

- INFO 8000 Foundations of Informatics for Research and Practice

- MIST 7770 Business Intelligence (3 hours)

Students may under special circumstances use up to 6 hours from the following list to apply towards the Electives group requirement.

- ARTI 8800 Directed Readings in Artificial Intelligence

- ARTI 8000 Topics in Artificial Intelligence

Other courses may be substituted for those on the Electives lists, provided the subject matter of the course is sufficiently related to artificial intelligence and consistent with the educational objectives of the Ph.D. degree program. Substitutions can be made only with the permission of the student's Advisory Committee and the Graduate Coordinator.

In addition to the specific PhD program requirements, all first-year UGA graduate students must enroll in a 1 credit-hour GRSC 7001 (GradFIRST) seminar which provides foundational training in research, scholarship, and professional development. Students may enroll in a section offered by any department, but it is recommended that they enroll in a section offered by AI Faculty Fellows for AI students. More information is available at the Graduate School website .

Core Competency

Core competency must be exhibited by each student and certified by the student’s advisory committee. This takes the form of achievement in the required courses of the curriculum. Students entering the Ph.D. program with a previous graduate degree sufficient to cover this basic knowledge will need to work with their advisory committee to certify their core competency. Students entering the Ph.D. program without sufficient graduate background to certify core competency must take at least three of the required courses, and then pursue certification with their advisory committee. A grade average of at least 3.56 (e.g., A-, A-, B+) must be achieved for three required courses (excluding ARTI 6950). Students below this average may take the fourth required course and achieve a grade average of at least 3.32 (e.g., A-, B+, B+, B).

Core competency is certified by the unanimous approval of the student's Advisory Committee as well as the approval by the Graduate Coordinator. Students are strongly encouraged to meet the core competency requirement within their first three enrolled academic semesters (excluding summer semester). Core Competency Certification must be completed before approval of the Final Program of Study.

Comprehensive Examination

Each student of the doctoral program must pass a Ph.D. Comprehensive Examination covering the student's advanced coursework. The examination consists of a written part and an oral part. Students have at most two attempts to pass the written part. The oral part may not be attempted unless the written part has been passed.

Admission to Candidacy

The student is responsible for initiating an application for admission to candidacy once all requirements, except the dissertation prospectus and the dissertation, have been completed.

Dissertation and Dissertation Credit Hours

In addition to the coursework and comprehensive examination, every student must conduct research in artificial intelligence under the direction of an advisory committee and report the results of his or her research in a dissertation acceptable to the Graduate School. The dissertation must represent originality in research, independent thinking, scholarly ability, and technical mastery of a field of study. The dissertation must also demonstrate competent style and organization. While working on his/her dissertation, the student must enroll for a minimum of 6 credit hours of ARTI 9300 Doctoral Dissertation spread over at least 2 semesters.

Advisory Committee

Before the end of the third semester, each student admitted into the program should approach relevant faculty members and form an advisory committee. Until the committee is formed, the student will be advised by the graduate coordinator. The committee consists of a major professor and two other faculty members, as follows:

- The major professor and at least one other member must be full members of the Graduate Program Faculty.

- The major professor and at least one other member must be Institute for Artificial Intelligence Faculty Fellows.

Deviations from the 3-member advisory committee structure, including having more members, are in some cases permitted but must conform to Graduate School policies.

The major professor and advisory committee shall guide the student in planning the dissertation. The committee shall agree upon, document, and communicate expectations for the dissertation. These expectations may include publication or submission requirements, but, should not exceed reasonable expectations for the given research domain. During the planning stage, the student will prepare a dissertation prospectus in the form of a detailed written dissertation proposal. It should clearly define the problem to be addressed, critique the current state-of-the-art, and explain the contributions to research expected by the dissertation work. When the major professor certifies that the dissertation prospectus is satisfactory, it must be formally considered by the advisory committee in a meeting with the student. This formal consideration may not take the place of the comprehensive oral examination.

Approval of the dissertation prospectus signifies that members of the advisory committee believe that it proposes a satisfactory research study. Approval of the prospectus requires the agreement of the advisory committee with no more than one dissenting vote as evidenced by their signing an appropriate form to be filed with the graduate coordinator’s office.

Graduation Requirements - Forms and Timeline

Before the end of the third semester in residence, a student must begin submitting to the Graduate School, through the graduate coordinator, the following forms: (i) a Preliminary Program of Study Form and (ii) an Advisory Committee Form. The Program of Study Form indicates how and when degree requirements will be met and must be formulated in consultation with the student's major professor. An Application for Graduation Form must also be submitted directly to the Graduate School. Forms and Timing must be submitted as follows:

- Advisory Committee Form (G130)—end of third semester

- Core Competency Form (Internal to IAI)—beginning of fourth semester

- Preliminary Doctoral Program of Study Form—Fourth semester

- Final Program of Study Form (G138)—before Comprehensive Examination

- Application for Admission to Candidacy (G162)—after Comprehensive Examination

- Application for Graduation Form (on Athena)—beginning of last semester

- Approval Form for Doctoral Dissertation (G164)—last semester

- ETD Submission Approval Form (G129)—last semester

Students should frequently check the Graduate School Dates and Deadlines webpage to ensure that all necessary forms are completed in a timely manner.

Student Handbook

Additional information on degree requirements and AI Institute policies can be found in the AI Student Handbook .

For information regarding the graduate programs in IAI, please contact:

Evette Dunbar [email protected] Boyd GSRC, Room 516 706-542-0358

We appreciate your financial support. Your gift is important to us and helps support critical opportunities for students and faculty alike, including lectures, travel support, and any number of educational events that augment the classroom experience. Click here to learn more about giving .

Every dollar given has a direct impact upon our students and faculty.

PhD Programs in Artificial Intelligence

The ultimate graduate degree in artificial intelligence is a doctoral degree, also referred to as a Doctor of Philosophy or PhD. Many PhDs in artificial intelligence are in computer science or engineering with a specialization or research focus in artificial intelligence. PhD students will learn advanced subjects in the discipline, like algorithms, decision theory, stochastic processes, and optimization.

What are PhD Programs in Artificial Intelligence?

As mentioned above, PhD programs in artificial intelligence are usually PhDs in computer science with a concentration in artificial intelligence. There are other PhDs with specializations in artificial intelligence, as you will see in the titles of many of the degrees listed below. These may include Mechanical Engineering, Machine Learning, and Computational Linguistics.

Most classes in a PhD program will be on-campus, but there may be a few offered online. (Only one PhD program in AI is offered completely online, as you will see in the list below). Some colleges and universities require PhD students to attend school full-time, while others admit part-time students.

Cost According to EducationData.org, the cost of a Doctor of Philosophy averages $106,860 as of 2023. Programs vary widely in their cost, however, depending upon whether the university is public or private and how much financial assistance is available.

Who Should Study for a PhD in Artificial Intelligence?

Those who get PhDs in artificial intelligence are often interested in research positions, teaching, or software engineering.

Jobs that are commonly held by those with PhDs in artificial intelligence include, but are not limited to:

- Research scientists

- Software engineers

- Executives in business (Chief Technology Officer, etc.)

- Principal scientist

- Lead data scientist

- Machine learning engineer

- Computer scientist

According to Payscale.com, the average salary for a person holding a PhD in Artificial Intelligence as of 2023 is $115,000 annually. The highest salaries are earned by executives such as Chief Technology Officers holding PhDs in AI, who average $198,235 annually. Computer scientists with PhDs in artificial intelligence fall at the lower end of the salary range, making $91,926 per year.

What are the Admissions Requirements for PhD Programs in Artificial Intelligence?

In order to be admitted to a PhD program in artificial intelligence, you will need at least a bachelor’s degree or a master’s degree in a related field. You must provide a resume, transcripts, a solid academic background, a personal statement of interest, and strong personal and professional recommendations. The Graduate Record Examination (GRE) may or may not be required. Check with the school(s) in which you are interested in applying for their GRE policies for doctoral students.

Be prepared for a long period of study, as the average PhD program takes three to six years to complete. Keep in mind that, once admitted, many doctoral programs in AI will require you to maintain a 3.5 GPA in order to remain in the program.

What is Studied in a PhD Program in Artificial Intelligence?

Courses that you will study in a PhD program in artificial intelligence will vary depending upon the specialization you choose, as well as your prior education. Some schools will give you credit for your master’s degree classes, while others will not. Expect courses in algorithms, analytics, statistics, machine learning, and robotics, among others.

Dissertation

The dissertation is the culmination of your doctoral degree studies, and shows the published research you have conducted on artificial intelligence and/or your specialization. It will take about two years to research and write a dissertation, and you will start working on it during the second or third year of your PhD program.

Specializations

Potential specializations in PhD programs in AI are listed in the degrees below. They include, but are not limited to:

- Data Science

- Automotive Systems

- Computational Linguistics

- Machine Learning

- Autonomous Systems

Doctoral Programs in Artificial Intelligence

Below you will find a list of PhD programs in various fields of artificial intelligence across the U.S., as of 2023.

PhD. Programs in Computational Linguistics and Natural Language Processing

District of Columbia

- Doctor of Philosophy in Linguistics: Computational Linguistics

- Doctor of Philosophy in Linguistics: Concentration in Computational Linguistics

Massachusetts

- Doctor of Philosophy in Computer Science with Studies in Computational Linguistics

- Doctor of Philosophy in Linguistics: Computational Methods

New York

- Doctor of Philosophy in Linguistics : Computational Linguistics

- Doctor of Philosophy in Linguistics : Concentration in Computational Linguistics

- D octor of Philosophy in Linguistics-Computational Linguistics track

Doctoral Programs in Machine Learning

- Doctor of Philosophy in Computer Engineering: Intelligent Systems and Machine Learning

- Doctor of Philosophy in Machine Learning

- Doctor of Philosophy in Computer Science: Artificial Intelligence and Machine Learning

- Doctor of Philosophy in Computer Science: Machine Learning emphasis

- Doctor of Philosophy in Applied Mathematics: Machine Learning and Artificial Intelligence concentration

Pennsylvania

- Doctor of Philosophy in Statistics & Machine Learning

- Doctor of Philosophy in Machine Learning & Public Policy

- Doctor of Philosophy in Neural Computation & Machine Learning

- Doctor of Philosophy in Statistics: Machine Learning and Big Data track

Doctoral Programs in Robotics and Autonomous Systems

- Doctor of Philosophy in Electrical & Computer Engineering: Intelligent Systems, Robotics and Control

- Doctor of Philosophy in Mechanical Engineering: Robotics and Systems Design

- Doctor of Philosophy in Intelligent Systems & Robotics

- D octor of Philosophy in Mechanical Engineering: Robotics

- Doctor of Philosophy in Systems Engineering: Automation, Robotics and Control

- Doctor of Philosophy in Human-Robot Interaction

- Doctor of Philosophy in Electrical and Computer Engineering: Robotics

- Doctor of Philosophy in Computer Science & Engineering- Intelligent & Autonomous Systems Research

New Jersey

- Doctor of Philosophy in Electrical Engineering: Robotics and Smart Systems Research

North Carolina

- Doctor of Philosophy in Electrical and Computer Engineering: Signal and Information Processing Track: Robotics Certificate

- Doctor of Philosophy in Mechanical Engineering and Materials Science: Robotics Certificate

- Doctor of Philosophy in Mechanical Engineering: Automotive Systems and Mobility

- Offers BS-PhD and MS-PhD paths

- Doctor of Philosophy in Mechanical Engineering: Robotics and Autonomy

Texas

- Doctor of Philosophy in Robotics

- Doctor of Philosophy in Mechanical Engineering: Robotics Track

- Doctor of Philosophy in Computer Science: Artificial Intelligence Research

- Doctor of Philosophy in Electrical Engineering: Intelligent Systems Research

- Doctor of Philosophy in Computer Science: Artificial Intelligence Emphasis

- Doctor of Philosophy in Intelligent Systems Engineering

- Doctor of Philosophy in Artificial Intelligence

- Online program

- Doctor of Philosophy in Computer Engineering: Data Science and Intelligent Systems Research Area

- Doctor of Philosophy in Computer Science: Artificial Intelligence

- Doctor of Philosophy in Information Technology: Artificial Intelligence concentration

- Doctor of Philosophy in Computer Engineering

- Doctor of Philosophy in Electrical Engineering: Applied Artificial Intelligence

- Pharm.D. and Master of Science in Artificial Intelligence

- Doctor of Philosophy in Computing and Information Sciences: Artificial Intelligence Research

Oregon

- Doctor of Philosophy in Computer and Information Science: Artificial Intelligence

- Doctor of Philosophy in Intelligent Systems

- Doctor of Philosophy in Computing and Information Sciences – Artificial Intelligence Research

Master of Philosophy in Artificial Intelligence Doctor of Philosophy in Artificial Intelligence

MPhil(AI) PhD(AI)

MPhil 2 years PhD 3 years (with a relevant research master’s degree), 4 years (without a relevant research master’s degree)

Artificial Intelligence Thrust Area Information Hub

Program Co-Directors: Prof Nevin ZHANG, Professor of Computer Science and Engineering Prof Fangzhen LIN, Professor of Computer Science and Engineering

https://gz.ust.hk/academics/four-hubs/information-hub

The Artificial Intelligence Thrust Area under the Information Hub at HKUST(GZ) is established to project and grow HKUST’s strength in AI and contribute to the nation’s drive to become a world leader in AI. The Master of Philosophy (MPhil) and Doctor of Philosophy (PhD) Programs in Artificial Intelligence are offered as an integral part of the endeavor to establish a world-renowned AI research center with the mission to advance applied research in AI as well as fundamental research relevant to the application areas. The MPhil Program aims to train students in independent and interdisciplinary research in AI. An MPhil graduate is expected to demonstrate sound knowledge in the discipline and is able to synthesize and create new knowledge, making a contribution to the field.

The PhD Program aims to train students in original research in AI, and to cultivate independent, interdisciplinary and innovative thinking that is essential for a successful career in AI-related industry or academia. A PhD graduate is expected to demonstrate mastery of knowledge in the chosen discipline and is able to synthesize and create new knowledge, making original and substantial scientific contributions to the discipline.

On successful completion of the MPhil program, graduates will be able to:

- Demonstrate thorough knowledge of the literature and a comprehensive understanding of scientific methods and techniques relevant to AI;

- Demonstrate practical skills in building AI systems;

- Independently pursue research or innovation of significance in AI applications; and

- Demonstrate skills in oral and written communication sufficient for a professional career.

On successful completion of the PhD program, graduates will be able to:

- Critically apply theories, methodologies, and knowledge to address fundamental questions in AI;

Minimum Credit Requirement

MPhil: 15 credits PhD: 21 credits

Credit Transfer

Students who have taken equivalent courses at HKUST or other recognized universities may be granted credit transfer on a case-by-case basis, up to a maximum of 3 credits for MPhil students, and 6 credits for PhD students.

Cross-disciplinary Core Courses

2 credits

All students are required to complete either IIMP 6010 or IIMP 6030. Students may complete the remaining courses as part of the credit requirements, as requested by the Program Planning cum Thesis Supervision Committee.

- Hub Core Courses

Students are required to complete at least one Hub core course (2 credits) from the Information Hub and at least one core course (2 credits) from other Hubs.

Information Hub Core Course

Other Hub Core Courses

Courses on Domain Knowledge

MPhil: minimum 9 credits of coursework PhD: minimum 15 credits of coursework

Under this requirement, each student is required to take one of the required courses, and other electives to form an individualized curriculum relevant to the cross-disciplinary thesis research.

For PhD, students must complete the AI required course in the first year of their study and obtain a B+ or above. Students who cannot meet the B+ requirement have to retake the course or take another AI required course in the second year to make it up.

Only one Independent Study course may be used to satisfy the course requirements.

To ensure that students will take appropriate courses to equip them with needed domain knowledge, each student has a Program Planning cum Thesis Supervision Committee to approve the courses to be taken soonest after program commencement and no later than the end of the first year. Depending on the approved curriculum, individual students may be required to complete additional credits beyond the minimal credit requirements.

Required Course List

Students are required to take one of the required courses listed below:

Sample Elective Course List

To meet individual needs, students will be taking courses in different areas, which may include but not limited to courses and areas listed below.

#: Full-time PhD students are encouraged to take at least 6 months of AI related research internship.

- Additional Foundation Courses

Individual students may be required to take foundation courses to strengthen their academic background and research capacity in related areas, which will be specified by the Program Planning cum Thesis Supervision Committee. The credits earned cannot be counted toward the credit requirements.

- Graduate Teaching Assistant Training

All full-time RPg students are required to complete PDEV 6800. The course is composed of a 10-hour training offered by the Center for Education Innovation (CEI), and session(s) of instructional delivery to be assigned by the respective departments. Upon satisfactory completion of the training conducted by CEI, MPhil students are required to give at least one 30-minute session of instructional delivery in front of a group of students for one term. PhD students are required to give at least one such session each in two different terms. The instructional delivery will be formally assessed.

- Professional Development Course Requirement

Students are required to complete PDEV 6770. The 1 credit earned from PDEV 6770 cannot be counted toward the credit requirements.

PhD students who are HKUST MPhil graduates and have completed PDEV 6770 or other professional development courses offered by the University before may be exempted from taking PDEV 6770, subject to prior approval of the Program Planning cum Thesis Supervision Committee.

Students are required to complete INFH 6780. The 1 credit earned from INFH 6780 cannot be counted toward the credit requirements.

PhD students who are HKUST MPhil graduates and have completed INFH 6780 or other professional development courses offered by the University before may be exempted from taking INFH 6780, subject to prior approval of the Program Planning cum Thesis Supervision Committee.

- English Language Requirement

Full-time RPg students are required to take an English Language Proficiency Assessment (ELPA) Speaking Test administered by the Center for Language Education before the start of their first term of study. Students whose ELPA Speaking Test score is below Level 4, or who failed to take the test in their first term of study, are required to take LANG 5000 until they pass the course by attaining at least Level 4 in the ELPA Speaking Test before graduation. The 1 credit earned from LANG 5000 cannot be counted toward the credit requirements.

Students are required to take one of the above three courses. The credit earned cannot be counted toward the credit requirements. Students can be exempted from taking this course with the approval of the Program Planning cum Thesis Supervision Committee.

- Postgraduate Seminar

Students are required to complete AIAA 6101 and AIAA 6102 in two terms. The credit earned cannot be counted toward the credit requirements.

- PhD Qualifying Examination

PhD students are required to pass a qualifying examination to obtain PhD candidacy following established policy.

- Thesis Research

MPhil:

- Registration in AIAA 6990; and

- Presentation and oral defense of the MPhil thesis.

- Registration in AIAA 7990; and

- Presentation and oral defense of the PhD thesis.

Last Update: 24 July 2020

To qualify for admission, applicants must meet all of the following requirements. Admission is selective and meeting these minimum requirements does not guarantee admission.

Applicants seeking admission to a master's degree program should have obtained a bachelor’s degree from a recognized institution, or an approved equivalent qualification;

Applicants seeking admission to a doctoral degree program should have obtained a bachelor’s degree with a proven record of outstanding performance from a recognized institution; or presented evidence of satisfactory work at the postgraduate level on a full-time basis for at least one year, or on a part-time basis for at least two years.

Applicants have to fulfill English Language requirements with one of the following proficiency attainments:

TOEFL-iBT: 80*

TOEFL-pBT: 550

TOEFL-Revised paper-delivered test: 60 (total scores for Reading, Listening and Writing sections)

IELTS (Academic Module): Overall score: 6.5 and All sub-score: 5.5

* refers to the total score in one single attempt

Applicants are not required to present TOEFL or IELTS score if

their first language is English, or

they obtained the bachelor's degree (or equivalent) from an institution where the medium of instruction was English.

© 2017 HKUST ALL RIGHTS RESERVED DESIGNED BY PTC

Department of Linguistics and Philosophy

Ethics & ai @ mit.

Computing and AI: Humanistic Perspectives from MIT

The advent of artificial intelligence presents our species with an historic opportunity — disguised as an existential challenge: Can we stay human in the age of AI?

The Tools of Moral Philosophy

"Framing a discussion of the risks of advanced technology entirely in terms of ethics suggests that the problems raised are ones that can and should be solved by individual action."

Computing and AI Series: Humanistic Perspective from Philosophy at MIT

“The MIT Schwartzman College of Computing presents an opportunity for MIT to be an intellectual leader in the ethics of technology. The Ethics Lab we propose could turn this opportunity into reality."

Ethical AI by Design | Q&A with Abby Everett Jaques PhD ’18

MIT postdoctoral associate is bringing philosophical tools to ethical questions related to information technologies.

Computing and AI Series: Humanistic Perspective from Women’s and Gender Studies at MIT

"The Schwarzman College of Computing presents MIT with a unique opportunity to take a leadership role in addressing some of most pressing challenges that have emerged from the ubiquitous role computing technologies now play in our society — including [...]

SHASS Computing and Society Concentration

The Computing and Society Concentration introduces students to critical thinking about the social and ethical dimensions of computing.

Drawing on selected classes from nine MIT-SHASS units, this concentration helps students understand that computing is a social practice with profound human implications, and that the humanities and social sciences offer insights to improve the cultural, ethical and political impact of contemporary and future technology.

Concentration requirement: four subjects taken from nine MIT-SHASS units:

The Schwarzman College of Computing

A new era of computing and AI education, research, and innovation.

Ethics & Computing Lecture Series

A lecture series focused on the interface of ethics, politics, and computing.

Philosophy & Computing Email List

Subscribe for anything to do with philosophy and computing

Department of Philosophy

Dietrich college of humanities and social sciences, ethics & artificial intelligence.

Recent advances in computer science and robotics are rapidly producing computational systems with the ability to perform tasks that were once reserved solely for humans. In a variety of sectors of life, from driverless cars and automated factories, to medical diagnostics and personal care robots, to military drones and cyber defense systems, the deployment of computational decision makers raises a complex array of ethical issues.

Work on these issues in the Philosophy Department is distinctive for its interdisciplinary character , its deep connections with the technical disciplines driving these advances, and its focus on concrete ethical problems that are pressing issues now, or (likely) in the next five to ten years. It is also distinctive for its focus on the likely broader social impacts of the integration of artificial decision makers into various social systems, including the assessment of ways that human social relations are likely to change in light of the new forms of interaction that such systems are likely to enable. Moreover, we work to proactively shape the development of these technologies towards more ethical processes, products, and outcomes.

Danks has explored the importance of trust in systems with autonomous capabilities, including the ways this important ethical value can impact human understanding of those systems, and our incorporation of them into functional and effective teams. He has examined these issues in a range of domains, including security & defense, cyber-systems, healthcare, and autonomous vehicles. This work raises and addresses basic questions about responsibility, liability, and the permissible division of labor between human and computational systems.

London and Danks have explored challenges in the development and regulation of autonomous systems, and the respects in which oversight mechanisms from other areas might usefully be emulated in this context. Their work draws heavily on a model of technological development articulated by London and colleagues in the context of drug development. They have also explored issues of bias in algorithms and the way that normative standards for ethically appropriate processes and outcomes are essential to evaluating the potential for different kinds of bias in algorithms.

As ambitions increase for developing computational systems that are capable of making a wider range of ethical decisions it becomes increasingly important to understand the structure of ethical decision making and the prospects for implementing such structures in various kinds of formal systems. Here, foundational issues about methodology in theoretical and applied ethics intersect with formal methods from game and decision theory, causal modeling, and machine learning.

Finally, the work of several faculty, such as Wenner and London, is relevant to understanding the conditions under which these new technologies might be used in ways that enhance human capabilities or exacerbate domination or exploitation.

- Graduate Application

- Support Philosophy @ CMU

Doctor of Philosophy with a major in Machine Learning

The Doctor of Philosophy with a major in Machine Learning program has the following principal objectives, each of which supports an aspect of the Institute’s mission:

- Create students that are able to advance the state of knowledge and practice in machine learning through innovative research contributions.

- Create students who are able to integrate and apply principles from computing, statistics, optimization, engineering, mathematics and science to innovate, and create machine learning models and apply them to solve important real-world data intensive problems.

- Create students who are able to participate in multidisciplinary teams that include individuals whose primary background is in statistics, optimization, engineering, mathematics and science.

- Provide a high quality education that prepares individuals for careers in industry, government (e.g., national laboratories), and academia, both in terms of knowledge, computational (e.g., software development) skills, and mathematical modeling skills.

- Foster multidisciplinary collaboration among researchers and educators in areas such as computer science, statistics, optimization, engineering, social science, and computational biology.

- Foster economic development in the state of Georgia.

- Advance Georgia Tech’s position of academic leadership by attracting high quality students who would not otherwise apply to Tech for graduate study.

All PhD programs must incorporate a standard set of Requirements for the Doctoral Degree .

The central goal of the PhD program is to train students to perform original, independent research. The most important part of the curriculum is the successful defense of a PhD Dissertation, which demonstrates this research ability. The academic requirements are designed in service of this goal.

The curriculum for the PhD in Machine Learning is truly multidisciplinary, containing courses taught in nine schools across three colleges at Georgia Tech: the Schools of Computational Science and Engineering, Computer Science, and Interactive Computing in the College of Computing; the Schools of Aerospace Engineering, Chemical and Biomolecular Engineering, Industrial and Systems Engineering, Electrical and Computer Engineering, and Biomedical Engineering in the College of Engineering; and the School of Mathematics in the College of Science.

Summary of General Requirements for a PhD in Machine Learning

- Core curriculum (4 courses, 12 hours). Machine Learning PhD students will be required to complete courses in four different areas: Mathematical Foundations, Probabilistic and Statistical Methods in Machine Learning, ML Theory and Methods, and Optimization.

- Area electives (5 courses, 15 hours).

- Responsible Conduct of Research (RCR) (1 course, 1 hour, pass/fail). Georgia Tech requires that all PhD students complete an RCR requirement that consists of an online component and in-person training. The online component is completed during the student’s first semester enrolled at Georgia Tech. The in-person training is satisfied by taking PHIL 6000 or their associated academic program’s in-house RCR course.

- Qualifying examination (1 course, 3 hours). This consists of a one-semester independent literature review followed by an oral examination.

- Doctoral minor (2 courses, 6 hours).

- Research Proposal. The purpose of the proposal is to give the faculty an opportunity to give feedback on the student’s research direction, and to make sure they are developing into able communicators.

- PhD Dissertation.

Almost all of the courses in both the core and elective categories are already taught regularly at Georgia Tech. However, two core courses (designated in the next section) are being developed specifically for this program. The proposed outlines for these courses can be found in the Appendix. Students who complete these required courses as part of a master’s program will not need to repeat the courses if they are admitted to the ML PhD program.

Core Courses

Machine Learning PhD students will be required to complete courses in four different areas. With the exception of the Foundations course, each of these area requirements can be satisfied using existing courses from the College of Computing or Schools of ECE, ISyE, and Mathematics.

Machine Learning core:

Mathematical Foundations of Machine Learning. This required course is the gateway into the program, and covers the key subjects from applied mathematics needed for a rigorous graduate program in ML. Particular emphasis will be put on advanced concepts in linear algebra and probabilistic modeling. This course is cross-listed between CS, CSE, ECE, and ISyE.

ECE 7750 / ISYE 7750 / CS 7750 / CSE 7750 Mathematical Foundations of Machine Learning

Probabilistic and Statistical Methods in Machine Learning

- ISYE 6412 , Theoretical Statistics

- ECE 7751 / ISYE 7751 / CS 7751 / CSE 7751 Probabilistic Graphical Models

- MATH 7251 High Dimension Probability

- MATH 7252 High Dimension Statistics

Machine Learning: Theory and Methods. This course serves as an introduction to the foundational problems, algorithms, and modeling techniques in machine learning. Each of the courses listed below treats roughly the same material using a mix of applied mathematics and computer science, and each has a different balance between the two.

- CS 7545 Machine Learning Theory and Methods

- CS 7616 , Pattern Recognition

- CSE 6740 / ISYE 6740 , Computational Data Analysis

- ECE 6254 , Statistical Machine Learning

- ECE 6273 , Methods of Pattern Recognition with Applications to Voice

Optimization. Optimization plays a crucial role in both developing new machine learning algorithms and analyzing their performance. The three courses below all provide a rigorous introduction to this topic; each emphasizes different material and provides a unique balance of mathematics and algorithms.

- ECE 8823 , Convex Optimization: Theory, Algorithms, and Applications

- ISYE 6661 , Linear Optimization

- ISYE 6663 , Nonlinear Optimization

- ISYE 7683 , Advanced Nonlinear Programming

After core requirements are satisfied, all courses listed in the core not already taken can be used as (appropriately classified) electives.

In addition to meeting the core area requirements, each student is required to complete five elective courses. These courses are required for getting a complete breadth in ML. These courses must be chosen from at least two of the five subject areas listed below. In addition, students can use up to six special problems research hours to satisfy this requirement.

i. Statistics and Applied Probability : To build breadth and depth in the areas of statistics and probability as applied to ML.

- AE 6505 , Kalman Filtering

- AE 8803 Gaussian Processes

- BMED 6700 , Biostatistics

- ECE 6558 , Stochastic Systems

- ECE 6601 , Random Processes

- ECE 6605 , Information Theory

- ISYE 6402 , Time Series Analysis

- ISYE 6404 , Nonparametric Data Analysis

- ISYE 6413 , Design and Analysis of Experiments

- ISYE 6414 , Regression Analysis

- ISYE 6416 , Computational Statistics

- ISYE 6420 , Bayesian Statistics

- ISYE 6761 , Stochastic Processes I

- ISYE 6762 , Stochastic Processes II

- ISYE 7400 , Adv Design-Experiments

- ISYE 7401 , Adv Statistical Modeling

- ISYE 7405 , Multivariate Data Analysis

- ISYE 8803 , Statistical and Probabilistic Methods for Data Science

- ISYE 8813 , Special Topics in Data Science

- MATH 6221 , Probability Theory for Scientists and Engineers

- MATH 6266 , Statistical Linear Modeling

- MATH 6267 , Multivariate Statistical Analysis

- MATH 7244 , Stochastic Processes and Stochastic Calculus I

- MATH 7245 , Stochastic Processes and Stochastic Calculus II

ii. Advanced Theory: To build a deeper understanding of foundations of ML.

- AE 8803 , Optimal Transport Theory and Applications

- CS 7280 , Network Science

- CS 7510 , Graph Algorithms

- CS 7520 , Approximation Algorithms

- CS 7530 , Randomized Algorithms

- CS 7535 , Markov Chain Monte Carlo Algorithms

- CS 7540 , Spectral Algorithms

- CS 8803 , Continuous Algorithms

- ECE 6283 , Harmonic Analysis and Signal Processing

- ECE 6555 , Linear Estimation

- ISYE 7682 , Convexity

- MATH 6112 , Advanced Linear Algebra

- MATH 6241 , Probability I

- MATH 6262 , Advanced Statistical Inference

- MATH 6263 , Testing Statistical Hypotheses

- MATH 6580 , Introduction to Hilbert Space

- MATH 7338 , Functional Analysis

- MATH 7586 , Tensor Analysis

- MATH 88XX, Special Topics: High Dimensional Probability and Statistics

iii. Applications: To develop a breadth and depth in variety of applications domains impacted by/with ML.

- AE 6373 , Advanced Design Methods

- AE 8803 , Machine Learning for Control Systems

- AE 8803 , Nonlinear Stochastic Optimal Control

- BMED 6780 , Medical Image Processing

- BMED 6790 / ECE 6790 , Information Processing Models in Neural Systems

- BMED 7610 , Quantitative Neuroscience

- BMED 8813 BHI, Biomedical and Health Informatics

- BMED 8813 MHI, mHealth Informatics

- BMED 8813 MLB, Machine Learning in Biomedicine

- BMED 8823 ALG, OMICS Data and Bioinformatics Algorithms

- CHBE 6745 , Data Analytics for Chemical Engineers

- CHBE 6746 , Data-Driven Process Engineering

- CS 6440 , Introduction to Health Informatics

- CS 6465 , Computational Journalism

- CS 6471 , Computational Social Science

- CS 6474 , Social Computing

- CS 6475 , Computational Photography

- CS 6476 , Computer Vision

- CS 6601 , Artificial Intelligence

- CS 7450 , Information Visualization

- CS 7476 , Advanced Computer Vision

- CS 7630 , Autonomous Robots

- CS 7632 , Game AI

- CS 7636 , Computational Perception

- CS 7643 , Deep Learning

- CS 7646 , Machine Learning for Trading

- CS 7647 , Machine Learning with Limited Supervision

- CS 7650 , Natural Language Processing

- CSE 6141 , Massive Graph Analysis

- CSE 6240 , Web Search and Text Mining

- CSE 6242 , Data and Visual Analytics

- CSE 6301 , Algorithms in Bioinformatics and Computational Biology

- ECE 4580 , Computational Computer Vision

- ECE 6255 , Digital Processing of Speech Signals

- ECE 6258 , Digital Image Processing

- ECE 6260 , Data Compression and Modeling

- ECE 6273 , Methods of Pattern Recognition with Application to Voice

- ECE 6550 , Linear Systems and Controls

- ECE 8813 , Network Security

- ISYE 6421 , Biostatistics

- ISYE 6810 , Systems Monitoring and Prognosis

- ISYE 7201 , Production Systems

- ISYE 7204 , Info Prod & Ser Sys

- ISYE 7203 , Logistics Systems

- ISYE 8813 , Supply Chain Inventory Theory

- HS 6000 , Healthcare Delivery

- MATH 6759 , Stochastic Processes in Finance

- MATH 6783 , Financial Data Analysis

iv. Computing and Optimization: To provide more breadth and foundation in areas of math, optimization and computation for ML.

- AE 6513 , Mathematical Planning and Decision-Making for Autonomy

- AE 8803 , Optimization-Based Learning Control and Games

- CS 6515 , Introduction to Graduate Algorithms

- CS 6550 , Design and Analysis of Algorithms

- CSE 6140 , Computational Science and Engineering Algorithms

- CSE 6643 , Numerical Linear Algebra

- CSE 6644 , Iterative Methods for Systems of Equations

- CSE 6710 , Numerical Methods I

- CSE 6711 , Numerical Methods II

- ECE 6553 , Optimal Control and Optimization

- ISYE 6644 , Simulation

- ISYE 6645 , Monte Carlo Methods

- ISYE 6662 , Discrete Optimization

- ISYE 6664 , Stochastic Optimization

- ISYE 6679 , Computational methods for optimization

- ISYE 7686 , Advanced Combinatorial Optimization

- ISYE 7687 , Advanced Integer Programming