What Is Data Cleaning and Why Does It Matter?

Effective data cleaning is a vital part of the data analytics process. But what is it, why is it important, and how do you do it?

Good data hygiene is so important for business. For starters, it’s good practice to keep on top of your data, ensuring that it’s accurate and up-to-date. However, data cleaning is also a vital part of the data analytics process. If your data has inconsistencies or errors, you can bet that your results will be flawed, too. And when you’re making business decisions based on those insights, it doesn’t take a genius to figure out what might go wrong!

In a field like marketing, bad insights can mean wasting money on poorly targeted campaigns. In a field like healthcare or the sciences, it can quite literally mean the difference between life and death. In this article, I’ll explore exactly what data cleaning is and why it’s so vital to get it right. We’ll also provide an overview of the key steps you should take when cleaning your data.

Why not get familiar with data cleaning and the rest of the data analytics process in our free 5-day data short course ?

We’ll answer the following questions:

- What is data cleaning?

- Why is data cleaning important?

- How do you clean data?

- What are some of the most useful data cleaning tools?

1. What is data cleaning?

Data cleaning (sometimes also known as data cleansing or data wrangling) is an important early step in the data analytics process .

This crucial exercise, which involves preparing and validating data, usually takes place before your core analysis. Data cleaning is not just a case of removing erroneous data, although that’s often part of it. The majority of work goes into detecting rogue data and (wherever possible) correcting it.

What is rogue data?

‘Rogue data’ includes things like incomplete, inaccurate, irrelevant, corrupt or incorrectly formatted data. The process also involves deduplicating, or ‘deduping’. This effectively means merging or removing identical data points.

Why is it important to correct rogue data?

The answer is straightforward enough: if you don’t, they’ll impact the results of your analysis.

Since data analysis is commonly used to inform business decisions, results need to be accurate. In this case, it might seem safer simply to remove rogue or incomplete data. But this poses problems, too: an incomplete dataset will also impact the results of your analysis. That’s why one of the main aims of data cleaning is to keep as much of a dataset intact as possible. This helps improve the reliability of your insights.

Data cleaning is not only important for data analysis. It’s also important for general business housekeeping (or ‘data governance’). The sources of big data are dynamic and constantly changing. Regularly maintaining databases, therefore, helps you keep on top of things. This has several additional benefits, which we’ll cover in the next section.

Want to try your hand at cleaning a dataset? You may be interested in this introductory tutorial to data cleaning, hosted by Dr. Humera Noor Minhas.

2. Why is data cleaning important?

A common refrain you’ll hear in the world of data analytics is: ‘garbage in, garbage out’. This maxim, so often used by data analysts, even has its own acronym… GIGO. But what does it mean?

Essentially, GIGO means that if the quality of your data is sub-par, then the results of any analysis using those data will also be flawed. Even if you follow every other step of the data analytics process to the letter, if your data is a mess, it won’t make a difference.

For this reason, the importance of properly cleaning data can’t be overstated. It’s like creating a foundation for a building: do it right and you can build something strong and long-lasting. Do it wrong, and your building will soon collapse. This mindset is why good data analysts will spend anywhere from 60-80% of their time carrying out data cleaning activities. Beyond data analytics, good data hygiene has several other benefits. Let’s look at those now.

Key benefits of data cleaning

As we’ve covered, data analysis requires effectively cleaned data to produce accurate and trustworthy insights. But clean data has a range of other benefits, too:

- Staying organized: Today’s businesses collect lots of information from clients, customers, product users, and so on. These details include everything from addresses and phone numbers to bank details and more. Cleaning this data regularly means keeping it tidy. It can then be stored more effectively and securely.

- Avoiding mistakes: Dirty data doesn’t just cause problems for data analytics. It also affects daily operations. For instance, marketing teams usually have a customer database. If that database is in good order, they’ll have access to helpful, accurate information. If it’s a mess, mistakes are bound to happen, such as using the wrong name in personalized mail outs .

- Improving productivity: Regularly cleaning and updating data means rogue information is quickly purged. This saves teams from having to wade through old databases or documents to find what they’re looking for.

- Avoiding unnecessary costs: Making business decisions with bad data can lead to expensive mistakes. But bad data can incur costs in other ways too. Simple things, like processing errors, can quickly snowball into bigger problems. Regularly checking data allows you to detect blips sooner. This gives you a chance to correct them before they require a more time-consuming (and costly) fix.

- Improved mapping: Increasingly, organizations are looking to improve their internal data infrastructures. For this, they often hire data analysts to carry out data modeling and to build new applications. Having clean data from the start makes it far easier to collate and map, meaning that a solid data hygiene plan is a sensible measure.

Data quality

Key to data cleaning is the concept of data quality. Data quality measures the objective and subjective suitability of any dataset for its intended purpose.

There are a number of characteristics that affect the quality of data including accuracy, completeness, consistency, timeliness, validity, and uniqueness. You can learn more about data quality in this full article .

3. How to clean your data (step-by-step)

So far, we’ve covered what data cleaning is and why it’s important. In this section, we’ll explore the practical aspects of effective data cleaning. Since there are multiple approaches you can take for completing each of these tasks, we’ll focus instead on the high-level activities.

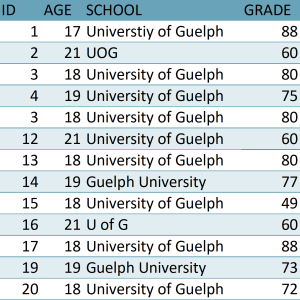

Step 1: Get rid of unwanted observations

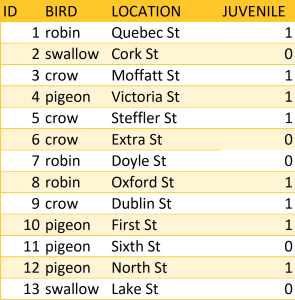

The first stage in any data cleaning process is to remove the observations (or data points) you don’t want. This includes irrelevant observations, i.e. those that don’t fit the problem you’re looking to solve.

For instance, if we were running an analysis on vegetarian eating habits, we could remove any meat-related observations from our data set. This step of the process also involves removing duplicate data. Duplicate data commonly occurs when you combine multiple datasets, scrape data online, or receive it from third-party sources.

Step 2: Fix structural errors

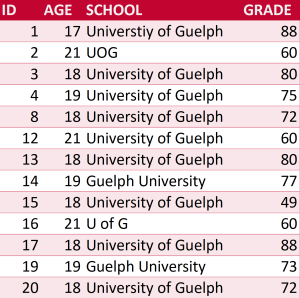

Structural errors usually emerge as a result of poor data housekeeping. They include things like typos and inconsistent capitalization, which often occur during manual data entry.

Let’s say you have a dataset covering the properties of different metals. ‘Iron’ (uppercase) and ‘iron’ (lowercase) may appear as separate classes (or categories). Ensuring that capitalization is consistent makes that data much cleaner and easier to use. You should also check for mislabeled categories.

For instance, ‘Iron’ and ‘Fe’ (iron’s chemical symbol) might be labeled as separate classes, even though they’re the same. Other things to look out for are the use of underscores, dashes, and other rogue punctuation!

Step 3: Standardize your data

Standardizing your data is closely related to fixing structural errors, but it takes it a step further. Correcting typos is important, but you also need to ensure that every cell type follows the same rules.

For instance, you should decide whether values should be all lowercase or all uppercase, and keep this consistent throughout your dataset. Standardizing also means ensuring that things like numerical data use the same unit of measurement.

As an example, combining miles and kilometers in the same dataset will cause problems. Even dates have different conventions, with the US putting the month before the day, and Europe putting the day before the month. Keep your eyes peeled; you’ll be surprised what slips through.

Step 4: Remove unwanted outliers

Outliers are data points that dramatically differ from others in the set. They can cause problems with certain types of data models and analysis.

For instance, while decision tree algorithms are generally accepted to be quite robust to outliers, outliers can easily skew a linear regression model. While outliers can affect the results of an analysis, you should always approach removing them with caution.

Only remove an outlier if you can prove that it is erroneous, e.g. if it is obviously due to incorrect data entry, or if it doesn’t match a comparison ‘gold standard’ dataset.

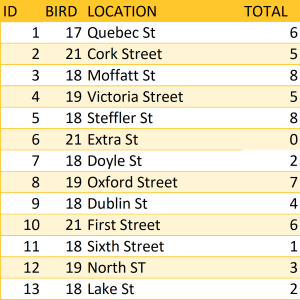

Step 5: Fix contradictory data errors

Contradictory (or cross-set) data errors are another common problem to look out for. Contradictory errors are where you have a full record containing inconsistent or incompatible data.

An example could be a log of athlete racing times. If the column showing the total amount of time spent running isn’t equal to the sum of each racetime, you’ve got a cross-set error.

Another example might be a pupil’s grade score being associated with a field that only allows options for ‘pass’ and ‘fail’, or an employee’s taxes being greater than their total salary.

Step 6: Type conversion and syntax errors

Once you’ve tackled other inconsistencies, the content of your spreadsheet or dataset might look good to go.

However, you need to check that everything is in order behind the scenes, too. Type conversion refers to the categories of data that you have in your dataset. A simple example is that numbers are numerical data, whereas currency uses a currency value. You should ensure that numbers are appropriately stored as numerical data, text as text input, dates as objects, and so on. I

n case you missed any part of Step 2, you should also remove syntax errors/white space (erroneous gaps before, in the middle of, or between words).

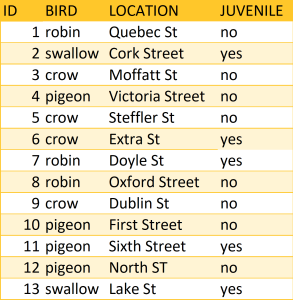

Step 7: Deal with missing data

When data is missing, what do you do? There are three common approaches to this problem.

The first is to remove the entries associated with the missing data. The second is to impute (or guess) the missing data , based on other, similar data. In most cases, however, both of these options negatively impact your dataset in other ways. Removing data often means losing other important information. Guessing data might reinforce existing patterns, which could be wrong.

The third option (and often the best one) is to flag the data as missing . To do this, ensure that empty fields have the same value, e.g. ‘missing’ or ‘0’ (if it’s a numerical field). Then, when you carry out your analysis, you’ll at least be taking into account that data is missing, which in itself can be informative.

Step 8: Validate your dataset

Once you’ve cleaned your dataset, the final step is to validate it. Validating data means checking that the process of making corrections, deduping, standardizing (and so on) is complete.

This often involves using scripts that check whether or not the dataset agrees with validation rules (or ‘check routines’) that you have predefined. You can also carry out validation against existing, ‘gold standard’ datasets.

This all sounds a bit technical, but all you really need to know at this stage is that validation means checking the data is ready for analysis. If there are still errors (which there usually will be) you’ll need to go back and fix them…there’s a reason why data analysts spend so much of their time cleaning data!

4. Data cleaning tools

Now we’ve covered the steps of the data cleaning process, it’s clear that this is not a manual task. So, what tools might help? The answer depends on factors like the data you’re working with and the systems you’re using. But here are some baseline tools to get to grips with.

Microsoft Excel

MS Excel has been a staple of computing since its launch in 1985. Love it or loathe it, it remains a popular data-cleaning tool to this day. Excel comes with many inbuilt functions to automate the data cleaning process, from deduping to replacing numbers and text, shaping columns and rows, or combining data from multiple cells . It’s also relatively easy to learn, making it the first port of call for most new data analysts.

Programming languages

Often, data cleaning is carried out using scripts that automate the process. This is essentially what Excel can do, using pre-existing functions. However, carrying out specific batch processing (running tasks without end-user interaction) on large, complex datasets often means writing scripts yourself.

This is usually done with programming languages like Python , Ruby, SQL , or—if you’re a real coding whizz—R (which is more complex, but also more versatile). While more experienced data analysts may code these scripts from scratch, many ready-made libraries exist. Python, in particular, has a tonne of data cleaning libraries that can speed up the process for you, such as Pandas and NumPy .

Visualizations

Using data visualizations can be a great way of spotting errors in your dataset. For instance, a bar plot is excellent for visualizing unique values and might help you spot a category that has been labeled in multiple different ways (like our earlier example of ‘Iron’ and ‘Fe’). Likewise, scatter graphs can help spot outliers so that you can investigate them more closely (and remove them if needed).

Proprietary software

Many companies are cashing in on the data analytics boom with proprietary software. Much of this software is aimed at making data cleaning more straightforward for non-data-savvy users. Since there are tonnes of applications out there (many of which are tailored to different industries and tasks) we won’t list them here. But we encourage you to go and see what’s available. To get you started, play around with some of the free, open-source tools. Popular ones include OpenRefine and Trifacta .

You’ll find a more thorough comparison of some of the best data cleaning tools in this guide .

Final thoughts

Data cleaning is probably the most important part of the data analytics process. Good data hygiene isn’t just about data analytics, though; it’s good practice to maintain and regularly update your data anyway. Clean data is a core tenet of data analytics and the field of data science more generally.

In this post, we’ve learned that:

- Clean data is hugely important for data analytics: Using dirty data will lead to flawed insights. As the saying goes: ‘Garbage in, garbage out.’

- Data cleaning is time-consuming: With great importance comes great time investment. Data analysts spend anywhere from 60-80% of their time cleaning data.

- Data cleaning is a complex process: Data cleaning means removing unwanted observations, outliers, fixing structural errors, standardizing, dealing with missing information, and validating your results. This is not a quick or manual task!

- There are tools out there to help you: Fear not, tools like MS Excel and programming languages like Python are there to help you clean your data. There are also many proprietary software tools available.

Why not try your hand at data analytics with our free, five-day data analytics short course ? Alternatively, read the following to find out more:

- What are the different types of data analysis?

- Quantitative vs. qualitative data: What’s the difference?

- The 7 most useful data analytics methods and techniques

- New Zealand

- United Kingdom

What Is Data Cleaning in the Context of Data Science?

Stay Informed With Our Weekly Newsletter

Receive crucial updates on the ever-evolving landscape of technology and innovation.

By clicking 'Sign Up', I acknowledge that my information will be used in accordance with the Institute of Data's Privacy Policy .

Data cleaning, also known as data cleansing or scrubbing, is a crucial process in data science. It involves identifying and correcting errors, inconsistencies, and inaccuracies in datasets.

It aims to improve data quality, ensuring it is accurate, reliable, and suitable for analysis.

Understanding the concept of data cleaning

Data cleaning is an integral part of the data science workflow.

At its core, it helps to ensure that the data used for analysis is reliable and appropriate for the intended purpose.

The role of data cleaning in data science

Data cleaning plays a pivotal role in data science as it directly impacts the accuracy of the analysis and the insights derived from it.

By eliminating errors and inconsistencies, it helps to ensure that the conclusions drawn from the data are valid and reliable.

Key terms and definitions in data cleaning

- Data error : Refers to any mistake, inaccuracy, or inconsistency in the dataset.

- Data inconsistency : occurs when different parts of the dataset conflict with each other.

- Data anomaly : This represents an observation that significantly deviates from the expected behavior of the dataset.

By being aware of the types of errors and inconsistencies that can occur, data scientists can develop strategies to detect and rectify them, ensuring the reliability and integrity of the data.

The importance of data cleaning in data science

It involves removing duplicate entries, handling missing values, standardizing formats, and resolving discrepancies.

This meticulous process is crucial for maintaining data integrity and ensuring reliable results.

Ensuring accuracy in data analysis

Data analysis is heavily reliant on the quality of the data being used.

If errors or inconsistencies are present in the dataset, it can lead to incorrect conclusions and misleading insights.

By detecting and addressing outliers, data cleaning ensures that the analysis is not skewed by these unusual data points, resulting in more accurate and meaningful insights.

Enhancing the quality of data

High-quality data is crucial for any data-driven project.

Eliminating errors and inconsistencies helps enhance the dataset’s quality, making it more suitable for analysis.

Clean data can lead to more accurate models, improved decision-making, and better overall outcomes.

It involves transforming all dates into a consistent format, ensuring uniformity and ease of analysis, and handling missing values common in real-world datasets.

The process

Identifying and removing errors.

The first step is to identify and remove errors from the dataset.

Dealing with missing or incomplete data

Data analysis is necessary to have complete data.

Data cleaning involves strategies to handle this issue, such as imputation techniques or excluding incomplete records.

The goal is to ensure the dataset is as complete as possible without introducing bias or inaccuracies.

Tools and techniques for data cleaning

Popular software.

Several software options can assist with data cleaning, including open-source solutions like OpenRefine and commercial software such as Trifacta or SAS Data Integration Studio.

These tools provide features like data profiling, string manipulation, and error detection, simplifying the process.

Manual vs automated data cleaning

The process of cleaning data can be performed manually or through automated processes.

Manual cleaning involves human intervention to identify and correct errors, while automated cleaning utilizes algorithms and scripts to automate the process.

The choice between manual and automated data cleaning depends on factors such as the complexity of the data and the available resources.

Challenges and solutions in cleaning data

Common obstacles in the process

One of the main challenges in cleaning data is dealing with large and complex datasets.

The sheer volume of data can make it difficult to identify errors or inconsistencies.

Additionally, data from multiple sources may have different formats or structures, requiring careful integration and transformation.

Best practices for effective data cleaning

To overcome these challenges, data scientists should follow best practices when cleaning data.

Some tips include:

- Document the process to keep track of changes made to the dataset.

- Perform exploratory data analysis to gain insights into the dataset before cleaning it.

- Use validation techniques to ensure data accuracy after the cleaning process.

- Regularly review and update data cleaning procedures as new issues arise.

Cleaning data is an essential step in the data science workflow that should not be overlooked.

By carefully cleaning and preparing the data, data scientists can ensure the accuracy and reliability of their analyses, leading to better insights and decisions.

Embracing data cleaning as an integral part of the data science process enables organizations to unlock the full potential of their data and derive meaningful and actionable information.

Considering embarking on a data science journey?

By choosing the Institute of Data as your learning partner, you’ll be equipped with the skills needed in this highly sought-after field of tech.

Want to learn more? Contact our local team for a free career consultation today.

Follow us on social media to stay up to date with the latest tech news

Stay connected with Institute of Data

Iterating Into Artificial Intelligence: Sid’s Path from HR to Data Science & AI

From Curiosity to Cybersecurity: Maria Kim’s Path to Protecting the Digital World

Mastering Cybersecurity: Ruramai’s Inspiring Journey from Law to Digital Defense

From Passion to Pursuing a New Career: Neil Kripal’s Driven Journey into Software Engineering

From Teaching to Data Science: Eamon’s Journey of Passion and Persistence

© Institute of Data. All rights reserved.

Copy Link to Clipboard

This website may not work correctly because your browser is out of date. Please update your browser .

Data cleaning

Data cleaning involves the detection and removal (or correction) of errors and inconsistencies in a data set or database due to data corruption or inaccurate entry.

Incomplete, inaccurate or irrelevant data is identified and then either replaced, modified or deleted.

Incorrect or inconsistent data can create a number of problems that lead to the drawing of false conclusions. Therefore data cleaning can be an important element in some data analysis situations. However, data cleaning is not without risks and problems including the loss of important information or valid data.

There are a large variety of tools available that can be used to support data cleaning. Additionally, m any statistical programs have data validation built-in, which can pick up some errors automatically, for example, non-valid variable codes.

Advice for using this method

- Back up your data before starting your data cleaning process.

- Create a list of all variables, variable labels and variable codes.

- Decide which variables are crucial to the analysis and must have values for the responses to be complete. Often, survey responses will come back with missing data for certain questions and variables. If this appears on a crucial variable, the data from that survey will not be useful.

- Something like gender will have in most cases the possible codes of 1 = male, 2 = female, 0 = missing, and so in this case a code of 12 would be an error

- Other errors might include missing data values

- A frequency test can help to identify errors

- Outliers can hide or create statistical significance and are important to identify

- Creating a bar graph or similar is one way to quickly identify outliers

- Cross-tabulating pairs of variables is one way of rooting out inconsistencies

- Removing responses with missing or incorrect values

- Correct missing or incorrect data if the correct value is known

- Going back to the data source and filling in the missing data variables

- Setting values to an average or other statistical value

This blog post from the American Evaluation Association provides an overview of Google Refine, a desktop application (downloadable) that can be used to calculate frequencies and multi-tabulate data from large datasets and clean up your data.

This paper from the University of Leipzig sets out to explain the main problems that data cleaning is able to correct and then provides an overview of the solutions that are available to implement the cleansing of data.

This paper from the Robert Wood Johnson Medical School outlines a step-by-step process for verifying that data values are correct or, at the very least, conform to a set of rules through the use of a data cleaning process.

Written by Associate Professor of Evaluation, Statistics, and Measurement at the University of Tennessee, Jenifer Morrow, the Brief Introduction to the 12 steps to data cleaning is a slide presentation that provides a concise overview to the importance

Rahm, E., & Hai Do, H. University of Leipzig, Germany, (n.d.). Data cleaning: Problems and current approaches . Retrieved from website: http://wwwiti.cs.uni-magdeburg.de/iti_db/lehre/dw/paper/data_cleaning.pdf

Wikipedia (2012). Data cleansing . Retrieved from http://en.wikipedia.org/wiki/Data_cleansing

Expand to view all resources related to 'Data cleaning'

- Data cleaning 101

'Data cleaning' is referenced in:

Framework/guide.

- Rainbow Framework : Manage data

Back to top

© 2022 BetterEvaluation. All right reserved.

- Policy Guidance

- Submitting Data

- Accessing Data

- About the Genomic Data Sharing (GDS) Policy

- Key Documents

- Preparing Genomic Data

- Extramural Grantees

- Non-NCI Funded Investigators

- Intramural Investigators

- Accessing Genomic Data

- Genomic Data Sharing Policy Contact Information

- ARPA-H BDF Toolbox

- Cancer Research Data Commons

- Childhood Cancer Data Initiative

- NCI-Department of Energy Collaboration

- Real-World Data

- U.S.-EU Artificial Intelligence Administrative Arrangement

- NCI Data Catalog

- CDISC Terminology

- FDA Terminology

- NCPDP Terminology

- Pediatric Terminology

- Metadata for Cancer Research

- Informatics Technology for Cancer Research (ITCR) Tools

- Generating and Collecting Data

- Cleaning Data

- Exploring and Analyzing Data

- Predictive Modeling

- Visualizing Data

- Sharing Data

- Cancer Data Science Course

- Training Guide Library

- Cancer Data Science Pulse Blog

- Data Science Seminar Series

- Jobs and Fellowships

- Contact CBIIT

- Organization

- CBIIT Director

- Staff Directory

- Application Support

- Genomic Data Sharing

- Cancer Vocabulary

- Learn About Cancer Data Science

- Improve My Data Science Skills

Cleaning Data: The Basics

What is data cleaning.

At its most basic level, data cleaning is the process of fixing or removing data that’s inaccurate, duplicated, or outside the scope of your research question.

Some errors might be hard to avoid. You may have made a mistake during data entry, or you might have a corrupt file. You may find the format is wrong for combining multiple data sets or different sources. Or you may have metadata that’s mislabeled.

Before beginning to clean your data, it’s a good idea to keep a copy of the raw data set. If you make an error during the cleaning stage, you can always go back to the original, and you won’t lose important information.

In working with data, remember the three “C”s:

- Complete —Avoid missing data. You can use default records as stand-ins for incomplete data sets. Or you can recode data using a different format or fill in missing values using a statistic tool. Be sure to use metadata that’s appropriate for the data type and topic.

- Consistent —Ensure that the data collected at the beginning of the study matches data from the end of the study (in both semantics and scope).

- Correct —Look for outliers and duplicates. Duplicate records can lead to incorrect calculations and impact statistical results so be sure to delete them. You can identify outliers using statistics (e.g., z scores or box plots). Before removing any outliers, consider the significance of these data and how removal could impact downstream analytics. Sometimes outliers are deceptive, but sometimes they offer insightful information.

Following these three Cs will help you when it comes time to aggregate data and will make filtering, selecting, and calculating more efficient.

Why Do We Need Clean Data for Cancer Research?

Accurate data supports sound decision making, helping you address your research question and allowing you to avoid misleading findings and costly mistakes.

What Do I Need to Know?

Quality data takes effort. Below are some typical areas that can cause problems:

- Mismatched or incomplete metadata . One of the most common problems occurs when researchers assign the wrong code. You may also find that codes change over time with the release of new versions. NCI Thesaurus can help you assign the correct codes. For more on the importance of semantics in data science , see the blogs, “ Semantics Primer ,” and “ Semantics Series: A Deep Dive Into Common Data Elements .”

- Inconsistent formatting. Review your formatting and carefully watch for data entry errors. Be sure that the entries exactly match your research, as many errors can occur during data entry. Check your columns to make certain you’ve used the same descriptors consistently. You can drop any columns that aren’t immediately relevant to your research question, and you can split columns as needed (depending on the software program that you’re using). Be sure to keep one entry per cell. You can flag any entries that need more attention (such as checking a patient’s medication history or confirming a date). You can always go back to those problem areas and resolve them when you have more information.

- Watch for bias. Data bias is another area that can result in misleading conclusions. Personal or societal biases can creep into research, even without your knowledge. It’s difficult to de-bias data during data cleaning. It’s better to think about the research questions you’ll ask and look for ways to offset bias before you collect the data. For example, you might want to recruit a range of study subjects by retooling your informed consent forms and broadening your outreach. You also might need to make adjustments to mitigate algorithm and data collection biases.

Repository Matters

You can maximize your data’s discoverability and re-use by uploading your files to a general or specialty data repository. Repositories serve as archives for data. They may have different data requirements. Some generalist collections allow you to upload a variety of formats and data types whereas specialty collections have very specific guidelines.

After you submit your data to a registry, the repository staff will do the following:

- Check your data for errors, inconsistencies, or missing information. Quality control includes regular checks for data completeness, accuracy, and adherence to coding standards.

- Validate your data. This may include registrars cross-checking data with multiple sources and/or verifying specific details with healthcare providers.

- Ensure your data are correctly linked. Data may be linked with other databases, such as vital records, to gather additional information and ensure comprehensive data capture for each case.

- Remove certain patient information. Personal identifiers, which link data to a specific person, are typically removed from the data to protect patient privacy. This is done before it is sent to a repository for broader distribution.

- Check that your data fits the repository’s system. Registries follow standardized coding systems and reporting guidelines to ensure consistency across different regions and over time, allowing for meaningful comparisons and analysis.

Setting up your data correctly from the start can help you avoid delays in formatting when it comes time to deposit your data, especially if your research is NIH funded. NIH’s Data Management and Sharing Policy requires making effective data management and sharing practices a routine part of scientific discovery.

Privacy is Vital

If you’re working with genetic data, imaging data, or other data that includes personal information, you must take steps to ensure patient privacy. The Health Insurance Portability and Accountability Act (HIPAA) requires you remove patients’ personal information.

The Informatics Technology for Cancer Research (ITCR) Program has a course, “ Ethical Data Handling for Cancer Research ,” that you can take to better understand important ethical principles of data management from a privacy, security, usability, and discoverability perspective.

Documentation is Key

Tracking how you cleaned your data can help save time in the future, reminding you of the types of errors you encountered and the approaches you used to fix those errors. It’s also good to document how you managed outliers.

If you use informatics tools in your research but have not had training in reproducibility tools and methods, take ITCR’s “ Intro to Reproducibility in Cancer Informatics ” course. You’ll gain skills in writing durable code, making a project open source with GitHub, analyzing documents, and more.

After you’ve completed the introductory course, take “ Advanced Reproducibility in Cancer Informatics ,” which will teach you more complex GitHub functions, how to engage in code review, how to modify a Docker image, and more.

Reminders to Keep in Mind

- Plan your data collection efforts well in advance of starting your study, and be sure to keep careful documentation. Doing this will minimize the time-consuming and tedious task of cleaning data.

- See the article, “ Generating and Collecting Data: The Basics ” for more tips.

- Technology also may be able to help lighten your data-cleaning workload. Traditionally, data cleaning has been an arduous task that relied heavily on human decisions. This may be changing, however, as technology helps make some of these decisions. For example, tools, both commercial and open source, are now available that can remove unnecessary columns, filter results, and validate data sets.

NCI Data Cleaning Resources and Initiatives

Now that you have a sense of the basics, use the following resources to discover more about the topic and understand NCI’s investment in this stage of the data science lifecycle.

- Semantics Series: A Deep Dive Into Common Data Elements : Learn how using proper descriptors can help you prepare your data for analysis.

- NCI’s Surveillance, Epidemiology, and End Results (SEER) Program has a training site with modules to help with collecting and recording cancer data. SEER also offers resources with links to reference materials and organizations that can help with coding and registering cancer cases.

- The NCI Cancer Research Data Commons offers a wide range of support to researchers —including tutorials, user guides, and office hours—to help them learn to use this cloud-based collection of data sets, accessible through its data commons or cloud resources, that also make thousands of analytical tools available.

Publications

- Interoperable Slide Microscopy Viewer and Annotation Tool for Imaging Data Science and Computational Pathology . Nature Communications , 2023. | Learn about Slim, an open-source, web-based slide microscopy viewer that helps facilitate interoperability with a range of existing medical imaging systems.

- Effects of Slide Storage on Detection of Molecular Markers by IHC and FISH in Endometrial Cancer Tissues From a Clinical Trial: An NRG Oncology/GOG Pilot Study . Applied Immunohistochemistry & Molecular Morphology , 2022. | See a study that showed that although it’s feasible to use aged-stored slides for identifying biomarkers for cancer, the results may modestly underestimate the true values in endometrial cancer.

- Uniform Genomic Data Analysis in the NCI Genomic Data Commons . Nature Communications , 2021. | Learn about the pipelines and workflows used to process and harmonize data in NCI’s Genomic Data Commons.

- Robustness Study of Noisy Annotation in Deep Learning Based Medical Image Segmentation . Physics in Medicine and Biology , 2020. | See a study showing that a deep network trained with noisy labels is inferior to that trained with reference annotation.

- Screen Technical Noise in Single Cell RNA Sequencing Data . Genomics , 2020. | Learn about a new data cleaning pipeline for single cell RNA-seq data.

- Building Portable and Reproducible Cancer Informatics Workflows: An RNA Sequencing Case Study . Methods in Molecular Biology , 2019. | See a case study using different tools in NCI’s Cancer Genomics Cloud for analyzing RNA sequencing data.

- QuagmiR: A Cloud-based Application for isomiR Big Data Analytics . Bioinformatics , 2019. | Learn about QuagmiR, a cloud-based tool for analyzing MicroRNA isoforms from next generation sequencing data.

- RNA-seq from Archival FFPE Breast Cancer Samples: Molecular Pathway Fidelity and Novel Discovery . BMC Medical Genomics , 2019. | See information on a formalin-fixed, paraffin-embedded, RNA sequencing pipeline for research on breast cancer.

- Scalable Open Science Approach for Mutation Calling of Tumor Exomes Using Multiple Genomic Pipelines . Cell Systems , 2018. | Learn about the “Multi-Center Mutation Calling in Multiple Cancers” project. See how this comprehensive encyclopedia of somatic mutations helps enable cross-tumor-type analyses using The Cancer Genome Atlas data sets.

- Ready to start your project? Get an overview of the data science lifecycle and what you should do in each stage .

- Want to learn the basic skills for cancer data science? Check out our basics skills video course .

- Need answers to data science questions? Visit our Training Guide Library .

Please participate

We are conducting a survey to assess the compute-intensive resource needs of the cancer research community. Regardless of your area of cancer research, your insights will contribute to a better understanding of computing needs and challenges for research that involves large amounts of data and analysis.

Root out friction in every digital experience, super-charge conversion rates, and optimise digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered straight to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Meet the operating system for experience management

- Free Account

- Product Demos

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Employee Exit Interviews

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

- Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Survey Analysis

- Survey Data Cleaning

Try Qualtrics for free

Survey data cleaning: how to get the most accurate survey data.

4 min read Before you analyse your survey results, data cleaning is a must-do. It helps you get the best quality data possible, so you can make more accurate decisions. Here’s how to get your data sparkling clean.

First things first – What is data cleaning?

Cleaning data means getting rid of any anomalous, incorrectly filled or otherwise “odd” results that could skew your analysis.

Some examples include:

- Straight-lining , where the respondent has selected the first response to every question, regardless of the question.

- Christmas-trees , where answers have been selected to create a visual pattern or picture – resembling a Christmas tree or some other deliberate design – rather than in response to the survey questions.

Not all problematic results are deliberate – you may also find duplicate responses caused by people accidentally filling in a survey twice or failing to realise that their submission had completed.

When you clean your survey data, you’re eliminating these ‘noisy’ responses that don’t add value and can confuse your end results. Think of it like weeding your garden to give your best plants more room to grow.

How to find the ‘dirt’ when data cleaning

There are a few methods experienced survey designers use spot the results that should be weeded out. These can involve looking at the metadata of the survey or visualising data to uncover patterns.

Find the fastest respondents

Time data can show where respondents have whizzed through a survey selecting answers without properly reading and considering the questions. Setting a ‘speed limit’ for your responses can help eliminate thoughtless or random answers.

Turn numeric data into graphics

For issues like Christmas tree or straight-lining respondents, it can be easier to spot problems if your data appears as a chart or graph rather than a table of numbers.

Review open-ended questions

Where your survey design requires participants to answer in their own words, you can spot problem data by noting where the open fields have been filled in with nonsense text. This could indicate that they survey has been completed by a bot rather than a human or where the survey respondent was not engaged with questions.

Learn more about open-ended questions

Edge cases to consider when cleaning data

Sometimes deciding whether to exclude certain survey responses from your final data set isn’t clear-cut. In these situations, you’ll need to make a choice depending on the volume of data you have and your overall goals for the survey.

These are answers that are numerically miles away from the rest of your data, or seem implausible from a common-sense point of view. This could be something like selecting a number above 16 for “how many hours a day do you spend watching TV”. It could be the result of a user error or a misunderstanding of the question. Or in some cases, it could be an unusual but accurate reply.

Self-contradictory answers

If a respondent’s answers seem inconsistent or don’t add up to a coherent picture, it could mean they’ve answered without reading carefully. For example, in one question they might tell you they’re vegetarian and in another tick ‘bacon’ as a favorite food.

Incomplete surveys

See how Qualtrics can help you get more accurate data

Related resources

Analysis & Reporting

Margin of Error 11 min read

Text analysis 44 min read, sentiment analysis 21 min read, behavioural analytics 12 min read, descriptive statistics 15 min read, statistical significance calculator 18 min read, zero-party data 12 min read, request demo.

Ready to learn more about Qualtrics?

Frequently asked questions

What is data cleaning.

Data cleaning involves spotting and resolving potential data inconsistencies or errors to improve your data quality. An error is any value (e.g., recorded weight) that doesn’t reflect the true value (e.g., actual weight) of something that’s being measured.

In this process, you review, analyze, detect, modify, or remove “dirty” data to make your dataset “clean.” Data cleaning is also called data cleansing or data scrubbing.

Frequently asked questions: Methodology

Attrition refers to participants leaving a study. It always happens to some extent—for example, in randomized controlled trials for medical research.

Differential attrition occurs when attrition or dropout rates differ systematically between the intervention and the control group . As a result, the characteristics of the participants who drop out differ from the characteristics of those who stay in the study. Because of this, study results may be biased .

Action research is conducted in order to solve a particular issue immediately, while case studies are often conducted over a longer period of time and focus more on observing and analyzing a particular ongoing phenomenon.

Action research is focused on solving a problem or informing individual and community-based knowledge in a way that impacts teaching, learning, and other related processes. It is less focused on contributing theoretical input, instead producing actionable input.

Action research is particularly popular with educators as a form of systematic inquiry because it prioritizes reflection and bridges the gap between theory and practice. Educators are able to simultaneously investigate an issue as they solve it, and the method is very iterative and flexible.

A cycle of inquiry is another name for action research . It is usually visualized in a spiral shape following a series of steps, such as “planning → acting → observing → reflecting.”

To make quantitative observations , you need to use instruments that are capable of measuring the quantity you want to observe. For example, you might use a ruler to measure the length of an object or a thermometer to measure its temperature.

Criterion validity and construct validity are both types of measurement validity . In other words, they both show you how accurately a method measures something.

While construct validity is the degree to which a test or other measurement method measures what it claims to measure, criterion validity is the degree to which a test can predictively (in the future) or concurrently (in the present) measure something.

Construct validity is often considered the overarching type of measurement validity . You need to have face validity , content validity , and criterion validity in order to achieve construct validity.

Convergent validity and discriminant validity are both subtypes of construct validity . Together, they help you evaluate whether a test measures the concept it was designed to measure.

- Convergent validity indicates whether a test that is designed to measure a particular construct correlates with other tests that assess the same or similar construct.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related. This type of validity is also called divergent validity .

You need to assess both in order to demonstrate construct validity. Neither one alone is sufficient for establishing construct validity.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related

Content validity shows you how accurately a test or other measurement method taps into the various aspects of the specific construct you are researching.

In other words, it helps you answer the question: “does the test measure all aspects of the construct I want to measure?” If it does, then the test has high content validity.

The higher the content validity, the more accurate the measurement of the construct.

If the test fails to include parts of the construct, or irrelevant parts are included, the validity of the instrument is threatened, which brings your results into question.

Face validity and content validity are similar in that they both evaluate how suitable the content of a test is. The difference is that face validity is subjective, and assesses content at surface level.

When a test has strong face validity, anyone would agree that the test’s questions appear to measure what they are intended to measure.

For example, looking at a 4th grade math test consisting of problems in which students have to add and multiply, most people would agree that it has strong face validity (i.e., it looks like a math test).

On the other hand, content validity evaluates how well a test represents all the aspects of a topic. Assessing content validity is more systematic and relies on expert evaluation. of each question, analyzing whether each one covers the aspects that the test was designed to cover.

A 4th grade math test would have high content validity if it covered all the skills taught in that grade. Experts(in this case, math teachers), would have to evaluate the content validity by comparing the test to the learning objectives.

Snowball sampling is a non-probability sampling method . Unlike probability sampling (which involves some form of random selection ), the initial individuals selected to be studied are the ones who recruit new participants.

Because not every member of the target population has an equal chance of being recruited into the sample, selection in snowball sampling is non-random.

Snowball sampling is a non-probability sampling method , where there is not an equal chance for every member of the population to be included in the sample .

This means that you cannot use inferential statistics and make generalizations —often the goal of quantitative research . As such, a snowball sample is not representative of the target population and is usually a better fit for qualitative research .

Snowball sampling relies on the use of referrals. Here, the researcher recruits one or more initial participants, who then recruit the next ones.

Participants share similar characteristics and/or know each other. Because of this, not every member of the population has an equal chance of being included in the sample, giving rise to sampling bias .

Snowball sampling is best used in the following cases:

- If there is no sampling frame available (e.g., people with a rare disease)

- If the population of interest is hard to access or locate (e.g., people experiencing homelessness)

- If the research focuses on a sensitive topic (e.g., extramarital affairs)

The reproducibility and replicability of a study can be ensured by writing a transparent, detailed method section and using clear, unambiguous language.

Reproducibility and replicability are related terms.

- Reproducing research entails reanalyzing the existing data in the same manner.

- Replicating (or repeating ) the research entails reconducting the entire analysis, including the collection of new data .

- A successful reproduction shows that the data analyses were conducted in a fair and honest manner.

- A successful replication shows that the reliability of the results is high.

Stratified sampling and quota sampling both involve dividing the population into subgroups and selecting units from each subgroup. The purpose in both cases is to select a representative sample and/or to allow comparisons between subgroups.

The main difference is that in stratified sampling, you draw a random sample from each subgroup ( probability sampling ). In quota sampling you select a predetermined number or proportion of units, in a non-random manner ( non-probability sampling ).

Purposive and convenience sampling are both sampling methods that are typically used in qualitative data collection.

A convenience sample is drawn from a source that is conveniently accessible to the researcher. Convenience sampling does not distinguish characteristics among the participants. On the other hand, purposive sampling focuses on selecting participants possessing characteristics associated with the research study.

The findings of studies based on either convenience or purposive sampling can only be generalized to the (sub)population from which the sample is drawn, and not to the entire population.

Random sampling or probability sampling is based on random selection. This means that each unit has an equal chance (i.e., equal probability) of being included in the sample.

On the other hand, convenience sampling involves stopping people at random, which means that not everyone has an equal chance of being selected depending on the place, time, or day you are collecting your data.

Convenience sampling and quota sampling are both non-probability sampling methods. They both use non-random criteria like availability, geographical proximity, or expert knowledge to recruit study participants.

However, in convenience sampling, you continue to sample units or cases until you reach the required sample size.

In quota sampling, you first need to divide your population of interest into subgroups (strata) and estimate their proportions (quota) in the population. Then you can start your data collection, using convenience sampling to recruit participants, until the proportions in each subgroup coincide with the estimated proportions in the population.

A sampling frame is a list of every member in the entire population . It is important that the sampling frame is as complete as possible, so that your sample accurately reflects your population.

Stratified and cluster sampling may look similar, but bear in mind that groups created in cluster sampling are heterogeneous , so the individual characteristics in the cluster vary. In contrast, groups created in stratified sampling are homogeneous , as units share characteristics.

Relatedly, in cluster sampling you randomly select entire groups and include all units of each group in your sample. However, in stratified sampling, you select some units of all groups and include them in your sample. In this way, both methods can ensure that your sample is representative of the target population .

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

The key difference between observational studies and experimental designs is that a well-done observational study does not influence the responses of participants, while experiments do have some sort of treatment condition applied to at least some participants by random assignment .

An observational study is a great choice for you if your research question is based purely on observations. If there are ethical, logistical, or practical concerns that prevent you from conducting a traditional experiment , an observational study may be a good choice. In an observational study, there is no interference or manipulation of the research subjects, as well as no control or treatment groups .

It’s often best to ask a variety of people to review your measurements. You can ask experts, such as other researchers, or laypeople, such as potential participants, to judge the face validity of tests.

While experts have a deep understanding of research methods , the people you’re studying can provide you with valuable insights you may have missed otherwise.

Face validity is important because it’s a simple first step to measuring the overall validity of a test or technique. It’s a relatively intuitive, quick, and easy way to start checking whether a new measure seems useful at first glance.

Good face validity means that anyone who reviews your measure says that it seems to be measuring what it’s supposed to. With poor face validity, someone reviewing your measure may be left confused about what you’re measuring and why you’re using this method.

Face validity is about whether a test appears to measure what it’s supposed to measure. This type of validity is concerned with whether a measure seems relevant and appropriate for what it’s assessing only on the surface.

Statistical analyses are often applied to test validity with data from your measures. You test convergent validity and discriminant validity with correlations to see if results from your test are positively or negatively related to those of other established tests.

You can also use regression analyses to assess whether your measure is actually predictive of outcomes that you expect it to predict theoretically. A regression analysis that supports your expectations strengthens your claim of construct validity .

When designing or evaluating a measure, construct validity helps you ensure you’re actually measuring the construct you’re interested in. If you don’t have construct validity, you may inadvertently measure unrelated or distinct constructs and lose precision in your research.

Construct validity is often considered the overarching type of measurement validity , because it covers all of the other types. You need to have face validity , content validity , and criterion validity to achieve construct validity.

Construct validity is about how well a test measures the concept it was designed to evaluate. It’s one of four types of measurement validity , which includes construct validity, face validity , and criterion validity.

There are two subtypes of construct validity.

- Convergent validity : The extent to which your measure corresponds to measures of related constructs

- Discriminant validity : The extent to which your measure is unrelated or negatively related to measures of distinct constructs

Naturalistic observation is a valuable tool because of its flexibility, external validity , and suitability for topics that can’t be studied in a lab setting.

The downsides of naturalistic observation include its lack of scientific control , ethical considerations , and potential for bias from observers and subjects.

Naturalistic observation is a qualitative research method where you record the behaviors of your research subjects in real world settings. You avoid interfering or influencing anything in a naturalistic observation.

You can think of naturalistic observation as “people watching” with a purpose.

A dependent variable is what changes as a result of the independent variable manipulation in experiments . It’s what you’re interested in measuring, and it “depends” on your independent variable.

In statistics, dependent variables are also called:

- Response variables (they respond to a change in another variable)

- Outcome variables (they represent the outcome you want to measure)

- Left-hand-side variables (they appear on the left-hand side of a regression equation)

An independent variable is the variable you manipulate, control, or vary in an experimental study to explore its effects. It’s called “independent” because it’s not influenced by any other variables in the study.

Independent variables are also called:

- Explanatory variables (they explain an event or outcome)

- Predictor variables (they can be used to predict the value of a dependent variable)

- Right-hand-side variables (they appear on the right-hand side of a regression equation).

As a rule of thumb, questions related to thoughts, beliefs, and feelings work well in focus groups. Take your time formulating strong questions, paying special attention to phrasing. Be careful to avoid leading questions , which can bias your responses.

Overall, your focus group questions should be:

- Open-ended and flexible

- Impossible to answer with “yes” or “no” (questions that start with “why” or “how” are often best)

- Unambiguous, getting straight to the point while still stimulating discussion

- Unbiased and neutral

A structured interview is a data collection method that relies on asking questions in a set order to collect data on a topic. They are often quantitative in nature. Structured interviews are best used when:

- You already have a very clear understanding of your topic. Perhaps significant research has already been conducted, or you have done some prior research yourself, but you already possess a baseline for designing strong structured questions.

- You are constrained in terms of time or resources and need to analyze your data quickly and efficiently.

- Your research question depends on strong parity between participants, with environmental conditions held constant.

More flexible interview options include semi-structured interviews , unstructured interviews , and focus groups .

Social desirability bias is the tendency for interview participants to give responses that will be viewed favorably by the interviewer or other participants. It occurs in all types of interviews and surveys , but is most common in semi-structured interviews , unstructured interviews , and focus groups .

Social desirability bias can be mitigated by ensuring participants feel at ease and comfortable sharing their views. Make sure to pay attention to your own body language and any physical or verbal cues, such as nodding or widening your eyes.

This type of bias can also occur in observations if the participants know they’re being observed. They might alter their behavior accordingly.

The interviewer effect is a type of bias that emerges when a characteristic of an interviewer (race, age, gender identity, etc.) influences the responses given by the interviewee.

There is a risk of an interviewer effect in all types of interviews , but it can be mitigated by writing really high-quality interview questions.

A semi-structured interview is a blend of structured and unstructured types of interviews. Semi-structured interviews are best used when:

- You have prior interview experience. Spontaneous questions are deceptively challenging, and it’s easy to accidentally ask a leading question or make a participant uncomfortable.

- Your research question is exploratory in nature. Participant answers can guide future research questions and help you develop a more robust knowledge base for future research.

An unstructured interview is the most flexible type of interview, but it is not always the best fit for your research topic.

Unstructured interviews are best used when:

- You are an experienced interviewer and have a very strong background in your research topic, since it is challenging to ask spontaneous, colloquial questions.

- Your research question is exploratory in nature. While you may have developed hypotheses, you are open to discovering new or shifting viewpoints through the interview process.

- You are seeking descriptive data, and are ready to ask questions that will deepen and contextualize your initial thoughts and hypotheses.

- Your research depends on forming connections with your participants and making them feel comfortable revealing deeper emotions, lived experiences, or thoughts.

The four most common types of interviews are:

- Structured interviews : The questions are predetermined in both topic and order.

- Semi-structured interviews : A few questions are predetermined, but other questions aren’t planned.

- Unstructured interviews : None of the questions are predetermined.

- Focus group interviews : The questions are presented to a group instead of one individual.

Deductive reasoning is commonly used in scientific research, and it’s especially associated with quantitative research .

In research, you might have come across something called the hypothetico-deductive method . It’s the scientific method of testing hypotheses to check whether your predictions are substantiated by real-world data.

Deductive reasoning is a logical approach where you progress from general ideas to specific conclusions. It’s often contrasted with inductive reasoning , where you start with specific observations and form general conclusions.

Deductive reasoning is also called deductive logic.

There are many different types of inductive reasoning that people use formally or informally.

Here are a few common types:

- Inductive generalization : You use observations about a sample to come to a conclusion about the population it came from.

- Statistical generalization: You use specific numbers about samples to make statements about populations.

- Causal reasoning: You make cause-and-effect links between different things.

- Sign reasoning: You make a conclusion about a correlational relationship between different things.

- Analogical reasoning: You make a conclusion about something based on its similarities to something else.

Inductive reasoning is a bottom-up approach, while deductive reasoning is top-down.

Inductive reasoning takes you from the specific to the general, while in deductive reasoning, you make inferences by going from general premises to specific conclusions.

In inductive research , you start by making observations or gathering data. Then, you take a broad scan of your data and search for patterns. Finally, you make general conclusions that you might incorporate into theories.

Inductive reasoning is a method of drawing conclusions by going from the specific to the general. It’s usually contrasted with deductive reasoning, where you proceed from general information to specific conclusions.

Inductive reasoning is also called inductive logic or bottom-up reasoning.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Triangulation can help:

- Reduce research bias that comes from using a single method, theory, or investigator

- Enhance validity by approaching the same topic with different tools

- Establish credibility by giving you a complete picture of the research problem

But triangulation can also pose problems:

- It’s time-consuming and labor-intensive, often involving an interdisciplinary team.

- Your results may be inconsistent or even contradictory.

There are four main types of triangulation :

- Data triangulation : Using data from different times, spaces, and people

- Investigator triangulation : Involving multiple researchers in collecting or analyzing data

- Theory triangulation : Using varying theoretical perspectives in your research

- Methodological triangulation : Using different methodologies to approach the same topic

Many academic fields use peer review , largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the published manuscript.

However, peer review is also common in non-academic settings. The United Nations, the European Union, and many individual nations use peer review to evaluate grant applications. It is also widely used in medical and health-related fields as a teaching or quality-of-care measure.

Peer assessment is often used in the classroom as a pedagogical tool. Both receiving feedback and providing it are thought to enhance the learning process, helping students think critically and collaboratively.

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. It also represents an excellent opportunity to get feedback from renowned experts in your field. It acts as a first defense, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process.

Peer-reviewed articles are considered a highly credible source due to this stringent process they go through before publication.

In general, the peer review process follows the following steps:

- First, the author submits the manuscript to the editor.

- Reject the manuscript and send it back to author, or

- Send it onward to the selected peer reviewer(s)

- Next, the peer review process occurs. The reviewer provides feedback, addressing any major or minor issues with the manuscript, and gives their advice regarding what edits should be made.

- Lastly, the edited manuscript is sent back to the author. They input the edits, and resubmit it to the editor for publication.

Exploratory research is often used when the issue you’re studying is new or when the data collection process is challenging for some reason.

You can use exploratory research if you have a general idea or a specific question that you want to study but there is no preexisting knowledge or paradigm with which to study it.

Exploratory research is a methodology approach that explores research questions that have not previously been studied in depth. It is often used when the issue you’re studying is new, or the data collection process is challenging in some way.

Explanatory research is used to investigate how or why a phenomenon occurs. Therefore, this type of research is often one of the first stages in the research process , serving as a jumping-off point for future research.

Exploratory research aims to explore the main aspects of an under-researched problem, while explanatory research aims to explain the causes and consequences of a well-defined problem.

Explanatory research is a research method used to investigate how or why something occurs when only a small amount of information is available pertaining to that topic. It can help you increase your understanding of a given topic.

Clean data are valid, accurate, complete, consistent, unique, and uniform. Dirty data include inconsistencies and errors.

Dirty data can come from any part of the research process, including poor research design , inappropriate measurement materials, or flawed data entry.

Data cleaning takes place between data collection and data analyses. But you can use some methods even before collecting data.

For clean data, you should start by designing measures that collect valid data. Data validation at the time of data entry or collection helps you minimize the amount of data cleaning you’ll need to do.

After data collection, you can use data standardization and data transformation to clean your data. You’ll also deal with any missing values, outliers, and duplicate values.

Every dataset requires different techniques to clean dirty data , but you need to address these issues in a systematic way. You focus on finding and resolving data points that don’t agree or fit with the rest of your dataset.

These data might be missing values, outliers, duplicate values, incorrectly formatted, or irrelevant. You’ll start with screening and diagnosing your data. Then, you’ll often standardize and accept or remove data to make your dataset consistent and valid.

Data cleaning is necessary for valid and appropriate analyses. Dirty data contain inconsistencies or errors , but cleaning your data helps you minimize or resolve these.

Without data cleaning, you could end up with a Type I or II error in your conclusion. These types of erroneous conclusions can be practically significant with important consequences, because they lead to misplaced investments or missed opportunities.

Research misconduct means making up or falsifying data, manipulating data analyses, or misrepresenting results in research reports. It’s a form of academic fraud.

These actions are committed intentionally and can have serious consequences; research misconduct is not a simple mistake or a point of disagreement but a serious ethical failure.

Anonymity means you don’t know who the participants are, while confidentiality means you know who they are but remove identifying information from your research report. Both are important ethical considerations .

You can only guarantee anonymity by not collecting any personally identifying information—for example, names, phone numbers, email addresses, IP addresses, physical characteristics, photos, or videos.

You can keep data confidential by using aggregate information in your research report, so that you only refer to groups of participants rather than individuals.

Research ethics matter for scientific integrity, human rights and dignity, and collaboration between science and society. These principles make sure that participation in studies is voluntary, informed, and safe.

Ethical considerations in research are a set of principles that guide your research designs and practices. These principles include voluntary participation, informed consent, anonymity, confidentiality, potential for harm, and results communication.

Scientists and researchers must always adhere to a certain code of conduct when collecting data from others .

These considerations protect the rights of research participants, enhance research validity , and maintain scientific integrity.

In multistage sampling , you can use probability or non-probability sampling methods .

For a probability sample, you have to conduct probability sampling at every stage.

You can mix it up by using simple random sampling , systematic sampling , or stratified sampling to select units at different stages, depending on what is applicable and relevant to your study.

Multistage sampling can simplify data collection when you have large, geographically spread samples, and you can obtain a probability sample without a complete sampling frame.

But multistage sampling may not lead to a representative sample, and larger samples are needed for multistage samples to achieve the statistical properties of simple random samples .

These are four of the most common mixed methods designs :

- Convergent parallel: Quantitative and qualitative data are collected at the same time and analyzed separately. After both analyses are complete, compare your results to draw overall conclusions.

- Embedded: Quantitative and qualitative data are collected at the same time, but within a larger quantitative or qualitative design. One type of data is secondary to the other.

- Explanatory sequential: Quantitative data is collected and analyzed first, followed by qualitative data. You can use this design if you think your qualitative data will explain and contextualize your quantitative findings.

- Exploratory sequential: Qualitative data is collected and analyzed first, followed by quantitative data. You can use this design if you think the quantitative data will confirm or validate your qualitative findings.

Triangulation in research means using multiple datasets, methods, theories and/or investigators to address a research question. It’s a research strategy that can help you enhance the validity and credibility of your findings.

Triangulation is mainly used in qualitative research , but it’s also commonly applied in quantitative research . Mixed methods research always uses triangulation.

In multistage sampling , or multistage cluster sampling, you draw a sample from a population using smaller and smaller groups at each stage.

This method is often used to collect data from a large, geographically spread group of people in national surveys, for example. You take advantage of hierarchical groupings (e.g., from state to city to neighborhood) to create a sample that’s less expensive and time-consuming to collect data from.

No, the steepness or slope of the line isn’t related to the correlation coefficient value. The correlation coefficient only tells you how closely your data fit on a line, so two datasets with the same correlation coefficient can have very different slopes.

To find the slope of the line, you’ll need to perform a regression analysis .

Correlation coefficients always range between -1 and 1.

The sign of the coefficient tells you the direction of the relationship: a positive value means the variables change together in the same direction, while a negative value means they change together in opposite directions.

The absolute value of a number is equal to the number without its sign. The absolute value of a correlation coefficient tells you the magnitude of the correlation: the greater the absolute value, the stronger the correlation.

These are the assumptions your data must meet if you want to use Pearson’s r :

- Both variables are on an interval or ratio level of measurement

- Data from both variables follow normal distributions

- Your data have no outliers

- Your data is from a random or representative sample

- You expect a linear relationship between the two variables

Quantitative research designs can be divided into two main categories:

- Correlational and descriptive designs are used to investigate characteristics, averages, trends, and associations between variables.