An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Int J Clin Pract

- v.2022; 2022

Semantic Web in Healthcare: A Systematic Literature Review of Application, Research Gap, and Future Research Avenues

A. k. m. bahalul haque.

1 Electrical and Computer Engineering, North South University, Dhaka 1229, Bangladesh

B. M. Arifuzzaman

Sayed abu noman siddik, tabassum sadia shahjahan, t. s. saleena.

2 PG & Research Department of Computer Science, Sullamussalam Science College Areekode, Malappuram, Kerala 673639, India

Morshed Alam

3 Institute of Education and Research, Jagannath University, Dhaka 1100, Bangladesh

Md. Rabiul Islam

4 Department of Pharmacy, University of Asia Pacific, 74/A Green Road, Farmgate, Dhaka 1205, Bangladesh

Foyez Ahmmed

5 Department of Statistics, Comilla University, Kotbari, Cumilla, Bangladesh

Md. Jamal Hossain

6 Department of Pharmacy, State University of Bangladesh, 77 Satmasjid Road, Dhanmondi, Dhaka 1205, Bangladesh

Associated Data

The data used to support the findings of this study are included within the article.

Today, healthcare has become one of the largest and most fast-paced industries due to the rapid development of digital healthcare technologies. The fundamental thing to enhance healthcare services is communicating and linking massive volumes of available healthcare data. However, the key challenge in reaching this ambitious goal is letting the information exchange across heterogeneous sources and methods as well as establishing efficient tools and techniques. Semantic Web (SW) technology can help to tackle these problems. They can enhance knowledge exchange, information management, data interoperability, and decision support in healthcare systems. They can also be utilized to create various e-healthcare systems that aid medical practitioners in making decisions and provide patients with crucial medical information and automated hospital services. This systematic literature review (SLR) on SW in healthcare systems aims to assess and critique previous findings while adhering to appropriate research procedures. We looked at 65 papers and came up with five themes: e-service, disease, information management, frontier technology, and regulatory conditions. In each thematic research area, we presented the contributions of previous literature. We emphasized the topic by responding to five specific research questions. We have finished the SLR study by identifying research gaps and establishing future research goals that will help to minimize the difficulty of adopting SW in healthcare systems and provide new approaches for SW-based medical systems' progress.

1. Introduction

The detection and remedy of illnesses through medical professionals are expressed as healthcare. The healthcare system consists of medical practitioners, researchers, and technologists that work together to provide affordable and quality healthcare services. They tend to generate considerable amounts of data from heterogeneous sources to enhance diagnostic accuracy, elevate quick treatment decisions, and pave the way for the effective distribution of information between medical practitioners and patients. However, it is necessary to organize those valuable data appropriately so that they can fetch those, while required.

One of the main challenges in utilizing medical healthcare data is extracting knowledge from heterogeneous data sources. The interoperability of well-being and clinical information poses tremendous obstacles due to data irregularity and inconsistency in structure and organization [ 1 , 2 ]. This is also because data are stored in various authoritative areas, making it challenging to retrieve knowledge and authorize a primary route along with information analysis. The information from a hospital can prove to be very useful in healthcare if these data are shared, analyzed, integrated, and managed regularly. Again, platforms that provide healthcare services also face dilemmas in automating time-efficient and low-cost web service arrangements [ 3 ]. This indicates that meaningful healthcare solutions must be proposed and implemented to provide extensive functionality based on electronic health record (EHR) workflows and data flow to enable scalable and interoperable systems [ 4 ], such as a blockchain-based smart e-health system that provides patients with an easy-to-access electronic health record system through a distributed ledger containing records of all occurrences [ 5 – 8 ]. A standard-based and scalable semantic interoperability framework is required to integrate patient care and clinical research domains [ 9 ]. The increasing number of knowledge grounds, heterogeneity of schema representation, and lack of conceptual description make the processing of these knowledge bases complicated. Non-experts find mixing knowledge with patient databases challenging to facilitate data sharing [ 10 ]. Similarly, ensuring the certainty of disease diagnosis also becomes a more significant challenge for health providers. Brashers et al. [ 11 ] in their work examined the significance of credible authority and the level of confidence HIV patients have in their medical professionals. Many participants agreed that doctors might not be fully informed of their ailment, but they emphasized the value of a strong patient-physician bond. With the help of big data management techniques, these challenges can be minimized. Likewise, Crowd HEALTH aims to establish a new paradigm of holistic health records (HHRs) that incorporate all factors defining health status by facilitating individual illness prevention and health promotion through the provision of collective knowledge and intelligence [ 11 – 13 ]. Another similar approach is adopted by the beHealthier platform which constructs health policies out of collective knowledge by using a newly proposed type of electronic health records (i.e., eXtended Health Records (XHRs)) and analysis of ingested healthcare data [ 14 ]. Making healthcare decisions during the diagnosis of a disease is a complex undertaking. Clinicians combine their subjectivity with experimental and research artifacts to make diagnostic decisions [ 9 ].

In recent years, Web 2.0 technologies have significantly changed the healthcare domain. However, in proportion to the growing trend of being able to access data from anywhere, which is primarily driven by the widespread use of smartphones, computers, and cloud applications, it is no longer sufficient. To address such challenges, Semantic Web Technologies have been adopted over time to facilitate efficient sharing of medical knowledge and establish a unified healthcare system. Tim Berners-Lee, also known as the father of the web, first introduced Semantic Web (SW) in 1989 [ 15 ]. The term “Semantic Web” refers to linked data formed by combining information with intelligent content. SW is an extension of the World Wide Web (WWW) and provides technologies for human agents and machines to understand web page contents, metadata, and other information objects. It also provides a framework for any kind of content, such as web pages, text documents, videos, speech files, and so on. The linked data comprise technologies such as Resource Description Framework (RDF), Web Ontology Language (OWL), SPARQL, and SKOS. It aims to create an intelligent, flexible, and personalized environment that influences various sectors and professions, including the healthcare system.

Data interoperability can only be improved when the semantics of the content are well defined across heterogeneous data sources. Ontology is one of the semantic tools, which is frequently used to support interoperability and communication between software, communities, and healthcare organizations [ 16 , 17 ]. It is also commonly used to personalize a patient's environment. Kumari et al. [ 18 ] and Haque et al. [ 19 ] proposed an Android-based personalized healthcare monitoring and appointment application that considers the health parameters such as body temperature, blood pressure, and so on to keep track of the patient's health and provide in-home medical services. Some existing ontologies of medicine are Gene, NCI, GALEN,LinkBase, and UMLS [ 20 ]. They have also been used in offering e-healthcare systems based on GPS tracking and user queries. Osama et al. proposed two ontologies for a medical differential diagnosis: disease symptom ontology (DSO) and patient ontology (PO) [ 21 ]. Sreekanth et al. used semantic interoperability to propose an application that brings together different actors in the health insurance sector [ 22 ]. Semantic Web not only enables information system interoperability but also addresses some of the most challenging issues with automated healthcare web service settings. SW combined with AI, IoT, and other technologies has produced a smart healthcare system that enables the standardization and depiction of medical data [ 1 , 23 , 24 ]. In terms of economic efficiency, the Semantic Web-Based Healthcare Framework (SWBHF) is said to benchmark the existing BioMedLib Search Engine [ 25 ]. SW also offered a new user-oriented dataset information resource (DIR) to boost dataset knowledge and health informatics [ 26 ]. This technology is also used in the rigorous registration process to discover, classify, and composite web services for the service owner [ 4 ]. To provide answers to medical questions, it has been integrated with NLP to create RDF datasets and attach them with source text [ 27 ]. Babylon Health, which enables doctors to prescribe medications to patients using mobile applications, has benefited from the spread of semantic technology. Archetypes, ontology, and datasets have been used in web-based methods for diagnosing colorectal cancer screening. Clinical information and knowledge about disease diagnosis are encoded for decision making with the use of ontological understanding and probabilistic reasoning. The integration of pharmaceutical and medical knowledge, as well as IoT-enabled smart cities, has made extensive use of SW technologies [ 8 ]. To put it briefly, this emerging technology has revolutionized the healthcare and medical system.

Despite its relevance, researchers who looked into the benefits of SW efforts showed substantial deficiencies in the wide range of semantic information in the medical and healthcare sectors. To the best of our knowledge, no previous systematic literature review (SLR) has been published on the Semantic Web and none of the research has previously classified the precise application area in which SW can be applied. Furthermore, there was an absence of research questions in the previous literature for analyzing and comparing similar works in order to understand their flaws, strengths, and problems.

In this study, we present a systematic review of the literature on Semantic Web in healthcare, with an emphasis on its application domain. It is absolutely essential to point the SW user community in the right direction for future research, to broaden knowledge on research topics, and to determine which domains of study are essential and must be performed. Thus, the current SLR can help researchers by addressing a number of factors that either limit or encourage medical and healthcare industries to employ Semantic Web technologies. Furthermore, the study also identifies various gaps in the existing literature and suggests future research directions to help resolve them. The research questions (RQs) that this systematic review will seek to answer are as follows. ( RQ1 ) What is the research profile of existing literature on the Semantic Web in the healthcare context? ( RQ2 ) What are the primary objectives of using the Semantic Web, and what are the major areas of medical and healthcare where Semantic Web technologies are adopted? ( RQ3 ) Which Semantic Web technologies are used in the literature, and what are the familiar technologies considered by each solution? ( RQ4 ) What are the evaluating procedures used to assess the efficiency of each solution? ( RQ5 ) What are the research gaps and limitations of the prior literature, and what future research avenues can be derived to advance Web 3.0 or Semantic Web technology in medical and healthcare?

This research contributes in a number of ways. This paper's main focus is centered on the collection of some statistical data and analysis results that are mostly focused on the adoption of SW technologies in the medical and healthcare fields. First, we gathered data from five publishers, including Scopus, IEEE Xplore Digital Library, ACM Digital Library, and Semantic Scholar, to thoroughly review, analyze, and synthesize past research findings. Furthermore, the current study does not focus on a specific theme, rather, it offers a broad overview of all possible research themes related to the use of SW in healthcare. Finally, this SLR identifies gaps in the existing literature and suggests a future research agenda. The primary contributions of our study are listed as follows:

- To find out the up-to-date research progress of SW technology in medical and healthcare.

- To open up new technical fields in healthcare where SW technologies can be used.

- To identify all the constraints in the healthcare industry during the adoption of SW technologies.

- To identify key future trends for semantics in the healthcare sector.

- To analyze and investigate alternative strategies for ensuring semantic interoperability in the healthcare contexts.

This review paper is organized as follows. Section 1 introduces Semantic Web technologies in healthcare followed by Section 2 which describes the methodology followed, the inclusion/exclusion criteria, and the data extracted and analyzed in this literature review paper. Section 3 elaborately discusses different thematic areas, and Section 4 presents the research gaps to address future research agendas. Section 5 presents a detailed discussion of the specified RQs. Lastly, Section 6 consists of the conclusion for this SLR.

2. Methodology

A systematic review is a research study that looks at many publications to answer a specific research topic. This study follows such a review to examine previous research studies that include identifying, analyzing, and interpreting all accessible information relevant to the recent progress of pertinent literature on Web 3.0 or Semantic Web in medical and healthcare or our phenomenon of interest. In the advancement of medical and healthcare analysis, numerous SLRs have been undertaken with inductive methodologies to identify major themes where Semantic Web technologies are being adopted [ 28 , 29 ]. In our study, we adopted the procedures outlined by Keele with a few important distinctions to assure the study's transferability, dependability, and transparency, emphasizing and documenting the selection method [ 30 ]. The guidelines outlined in that paper were derived from three existing approaches used by medical researchers, two books written by social science researchers, and a discussion with other academics interested in evidence-based practice [ 8 , 31 – 40 ]. The guidelines have been modified to include medical policies in order to address the unique challenges of software engineering research.

Our study sequentially conducted an SLR to accomplish the precise objectives. At first, we planned the necessary approach to identify the problems. Next, we collected related study materials and retrieved data from them. Finally, we documented the findings and carried out the research in the following steps (see Figure 1 ) maintaining its replicability as well as precision.

- Step 1 . Plan the review by finding appropriate research measures to detect corresponding documents.

- Step 2 . Collect analyses by outlining the inclusion and exclusion criteria to assess their applicability.

- Step 3 . Extract relevant data using numerous screening approaches to use accordingly.

- Step 4 . Document the research findings.

SLR methodology and protocols.

2.1. Planning the Review

The very first stage in conducting SLR is to identify the needs for a specific systematic review, outline the research questions, design a procedural review, and offer a study framework to assist the investigation in subsequent phases to identify the systematic review's significant objectives. This phase begins with the identification of needs for the proposed systematic review. Section 1 of this paper went into detail about why a systematic review of Semantic Web technologies in healthcare was deemed necessary. Following that, the definition of research questions, the selection of a synthesis method, initial keywords, and databases are given. To begin, we devised the RQs for this SLR in order to gain a comprehensive understanding of the semantic-based solutions in the field of healthcare. Defining research questions is an important part of conducting a systematic review because they guide the overall review methodology. Based on the objectives, we conducted a pilot study of a systematic review of fifteen sample studies, resulting in the broad application of the Semantic Web to a specific niche, refinement of research questions, and redefinition of the review research protocol. To find relevant scientific contributions for our RQs, we used Scopus, IEEE Xplore Digital Library, ACM Digital Library, and Semantic Scholar. Furthermore, we utilized the primary term “Web 3.0 or Semantic Web” to search the databases and then identified and refined the comprehensive keywords that would be used as search strings. We did not limit our search to a single period instead; we looked at all linked studies.

2.2. Collecting Analyses

A systematic review's unit of analysis is crucial since it broadens the scope of the overall approach. This study aims to better understand how Web 3.0 or Semantic Web technologies are employed in medical and healthcare settings, as well as to identify the extent to which they have been applied. We have selected academic research articles and journals as the unit of analysis for our SLR. We specified inclusion and exclusion criteria to narrow the investigation in the following study selection process, as shown in Table 1 . To gather our search phrases, we used a nine-step procedure as mentioned in [ 41 ]. The studies obtained from online repositories were compared with exclusion criteria to select peer-reviewed papers and eliminate any non-peer-reviewed studies. To perform this review, we employed decisive exclusion criteria to identify grey literature, which included white papers, theses, project reports, and working papers. To remove language barriers, we only selected papers written in English. We did not consider any review papers or project reports to maintain the quality. Older publications that have never been cited were excluded from the review to explore the potential value of Web 3.0 and SW technologies in medical and healthcare.

Criteria for inclusion and exclusion.

| Inclusion criteria (IC) | Exclusion criteria (EC) |

|---|---|

| ( ) Primary studies ( ) Peer-reviewed publications ( ) The studies are written in English language ( ) The research must be based on empirical evidence (qualitative and quantitative research) ( ) Journal articles published through January 22, 2022 ( ) Studies available in full text ( ) Studies that focus on the Semantic Web to support medical and healthcare ( ) Any published study that has the potential to address at least one research question | ( ) Studies not written in English ( ) White papers, working papers, positional papers, review papers, short papers (<4 pages), and project reports. ( ) Theses, editorials, keynotes, forum conversations, posters, editorials, analysis, tutorial overviews, technological articles, and essays. ( ) Grey literature, i.e., editorial, abstract, keynote, and studies without bibliographic information, e.g., publication date/type, volume, and issue number ( ) Research does not focus on the SW to support medical and healthcare |

2.3. Extracting Relevant Data

Initially, we searched for papers in Google Scholar with “Web 3.0 in medical and healthcare” keywords. However, reviewing the title and abstract from the top 50 articles further improved the search keyword to develop a more appropriate search string. The top search string (“Semantic Web” OR “Web 3.0”) AND (“Healthcare” OR “medical”) was used in Scopus, IEEE Xplore Digital Library, ACM Digital Library, and Semantic Scholar to find related papers for our SLR on 22 January 2022. We found a total of 4137 papers, including 2237 from IEEE Xplore Digital Library, 1761 from Scopus, 103 from Semantic Scholar, and 36 from ACM Digital library. Primary review grasped articles up to 2001. So, all the identified publications were from 2001 to 2021. Four authors performed the screening method through different stages. After each step, a discussion session was held to finalize the step and move further.

At first, we checked for any duplicate articles from both indexing databases. We eliminated available duplicate articles by checking the Digital Object Identifier (DOI) and the research heading. After removing the duplicate articles, we were left with 1923 articles. After that, titles, keywords, and abstracts were read as part of the preliminary screening process. During the screening procedure, articles were divided into three categories: retain, exclude, and suspect. After removing articles unrelated to Web 3.0 or Semantic Web in medical and healthcare, only 1741 articles were retrained. Upon analyzing the contents of both suspect and retain studies using the inclusion and exclusion criteria listed in Table 1 , we were left with 343 publications. Following that, we read the full text of the articles that were picked, and we were left with 54 papers being considered for our conclusive stage. Finally, we applied the snowballing strategy, also known as the citation chaining technique [ 42 ]. Surprisingly, this step resulted in the addition of another ten studies (7 from backward citation and three from forwarding citation). The final review pool thus comprised 65 papers being considered for our conclusive stage ( Figure 2 depicts the study selection process in detail).

Study selection process.

2.4. Document Research Findings

The shortlisted research papers were profiled using descriptive statistics, which include publication year, methodology, and publication sources [ 23 , 43 , 44 ]. According to the chronology of the number of publications, the majority of the research articles were published in 2013. However, between 2018 and 2021, the number declined. Figure 3 depicts the yearly (between 2001 and 2022) distribution of published papers.

The majority of the studies presented a framework for developing a medical data information management system. Web 3.0 technologies appear to be in their early phases of adoption, with scholars only recently becoming interested in the topic. A few other papers discussed medical data interchange mechanisms, diseases, frontier technology such as AI and NLP, and regulatory conditions. Nearly half of the research ( n = 39) was published between 2001 and 2012, with the remaining studies ( n = 26) published after that (see Figure 3 ). The Semantic Web theory gained widespread interest after the architect of the World Wide Web, Tim Berners-Lee, James Hendler, and Ora Lassila popularized it in a Scientific American article in May 2001 [ 15 ]. This trend also gained momentum in recent years, with John Markoff coining the term Web 3.0 in 2006 and Gavin Wood, Ethereum's co-founder, coining the word later in 2014.

Number of articles published yearly.

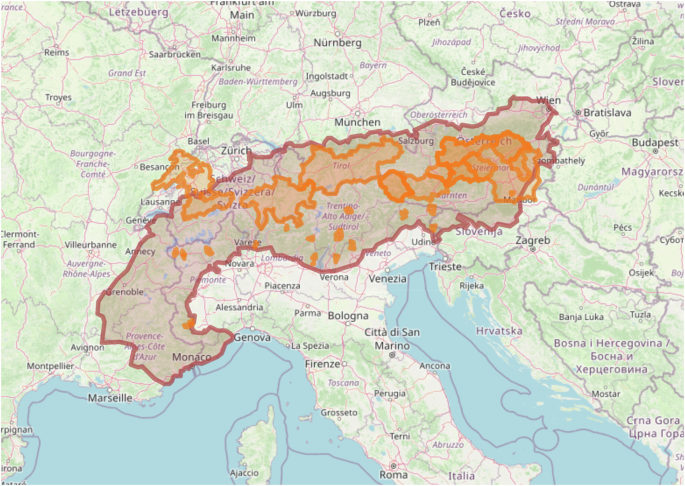

Medical and healthcare writings have been published in several renowned conferences, journals, book series, and events. The 65 shortlisted papers are distributed throughout 27 conference proceedings, 21 journals, and 17 book series. The descriptive analysis depicts that 65 shortlisted analyses were authored by 25 publishers, accompanied by Springer ( n = 17), IEEE Xplore ( n = 15), IOS Press ( n = 6), ACM ( n = 5), and Elsevier ( n = 3). Only a few publishers published many studies. The reset included 15 publishers, each of whom only published one study. However, the majority of the papers were published in Lecture Notes in Computer Science (LNCS), CEUR Workshop Proceedings, and Studies in Health Technology and Informatics Series (see Figure 4 ). Furthermore, our SLR demonstrates the wide geographic span of existing research papers. The United States (11 articles), France (23 articles), India (9 articles), Canada (8 articles), Belgium (4 articles), and South Korea (4 articles) all had a significant number of studies. Figure 5 summarizes the past literature's geographical distribution.

Publication-source-wise distribution.

Country-wise article distribution.

According to the systematic literature review, the application of Semantic Web technologies in the field of healthcare is a prominent classical research theme, with many innovative and promising research topics. The number of Semantic Web publications and interest in healthcare has increased rapidly in recent years, and Semantic Web methods, tools, and languages are being used to solve the complex problems that today's healthcare industries face. Semantic Web technology allows comprehensive knowledge management and sharing, as well as semantic interoperability across application, enterprise, and community boundaries. This makes the Semantic Web a viable option for improving healthcare services by improving tasks such as standards and interoperable rich semantic metadata for complex systems, representing patient records, investigating the integration of Internet of Things and artificial computational methods in disease identification, and outlining SW-based security. While there are interesting possibilities for the application of Semantic Web technologies in the healthcare setting, some limitations may explain why those possibilities are less apparent. We believe one reason is a lack of support for developers and researchers. Semantic Web-based healthcare applications should be viewed as independent research prototypes that must be implemented in real-world scenarios rather than as a widget that is integrated with the Web 2.0-based solution. This study discusses the findings and future directions from two different perspectives. First, consider the potential applications of Semantic Web technologies in different healthcare scenarios and also look at the barriers to their practical application and how to overcome them (see Section 3 ). Last, the fourth (see Section 4 ) section discusses the scope of research in Semantic Web-enabled healthcare.

3. Analysis of the Selected Articles: Thematic Areas

This section focuses on three key steps: summarizing, comparing, and discussing the shortlisted papers to describe and categorize them into common themes. To systematically analyze all 65 studies, we adopted the technique used in recently published SLRs [ 23 , 43 ]. After identifying and selecting relevant papers that could answer our research questions, we used the content analysis technique to classify, code, and synthesize the findings of those studies. A three-step approach was proposed by Erika Hayes et al., which was used to interpret unambiguous and unbiased meaning from the content of text data [ 45 ]. The steps were as follows: (a) the authors assigned categories to each study and a coding scheme created directly and inductively from raw data using valid reasoning and interpretation; (b) the authors immersed themselves in the material and allowed themes to arise from the data to validate or extend categories and coding schemes using directed content analysis; (c) the authors used summative content analysis, which begins with manifesting content and then expands to identify hidden meanings and themes in the research areas.

This thematic analysis answers the second research question (RQ2), “What are the primary objectives of using the Semantic Web, and what are the major areas of medical and healthcare where Semantic Web technologies are adopted?”, and this analysis architecture highlights five broad medical and healthcare-related research themes based on their primary contribution (see Table 2 ), notably e-healthcare service, diseases, information management, frontier technology, and regulatory conditions.

Derived themes and their descriptions.

| Theme description | |

|---|---|

| E-healthcare services are defined as healthcare services and resources that are improved or supplied over the Internet and other associated technologies to reduce the burden on the patients. | |

| Diseases include a wide range of illnesses, including dementia, diabetes, chronic disorders, cardiovascular disease, and critical limb ischemia. The objective is to use SWT to integrate medical information and data from various electronic health data sources for efficient diagnosis and clinical services. | |

| Information management in healthcare is the process of gathering, evaluating, and preserving medical data required for providing high-quality healthcare management systems. In this thematic area, we discuss how SWT can be used to develop the management of massive healthcare data. | |

| In a broad sense, frontier technology in healthcare refers to technologies such as artificial intelligence, various spectrum of IoT, augmented reality, and genomics that are pushing the boundaries of technological capabilities and adoption. Only the works of scholars who collaborated with Semantic Web and frontier technologies to meet healthcare demand are included in this category. | |

| Regulatory conditions refer to the activities that aim to develop adequate underlying motives and beliefs, guidelines, and healthcare protocols across healthcare facilities and systems. This section's research focuses on the improvement of good practice and clinical norms using SWT for documenting the semantics of medical and healthcare data and resources. | |

Two themes, namely, IoT and cloud computing, were nevertheless left out since they lack a wide description that would be useful in developing a meaningful theme. Some of the papers from which we defined these two thematic areas were included in the selected themes based on their similarity to the chosen thematic areas. Figure 6 illustrates this categorization, with different themes' description, which emerged from our review.

Thematic description of Semantic Web approaches in medical and healthcare.

3.1. E-Healthcare Service

The use of various technologies to provide healthcare support is known as e-service in healthcare or e-healthcare service. While staying at home, a person can obtain all the necessary medical information as well as a variety of healthcare services such as disease reasoning, medication, and recommendation through e-healthcare services. It is similar to a door-to-door service. The Semantic Web or Web 3.0 plays a critical role in this regard. The Semantic Web offers a variety of technologies, including semantic interoperability, semantic reasoning, and morphological variation that can be used to create a variety of frameworks that improve e-healthcare services.

SW makes the task of sharing medical information among healthcare experts more efficient and easier [ 2 , 46 – 48 ]. A dataset information resource for medical knowledge makes the work more trouble-free and faster. A healthcare dataset information resource has been created along with a question-answering module related to health information [ 26 ]. Combining different databases can be more effective as it expands the information range of knowledge. In this respect, Barisevičius et al. [ 49 ] designed a medical linked data graph that combines different medical databases and they also developed a chatbot using NLP-based knowledge extraction that provides healthcare services by supplying knowledge about various medical information. Besides information sharing and database combining, Semantic Web-based frameworks can provide virtual medical and hospital-based services. A system has been created that provides medical health planning according to patient's information [ 50 ]. Concerning this, it could be very helpful if there is a system that can match patient requirements with the services. Such a matchmaking system has been developed to match the web services with the patient's requirements for medical appointments [ 51 ]. To provide hospital-based services, a Semantic Web-based dynamic healthcare system was developed using ontologies [ 17 ]. Disease reasoning is a vital task for e-healthcare services. A number of frameworks were developed that are used for reasoning diseases [ 49 , 52 , 53 ]. In addition, some authors implemented systems that provide support for sequential decision making [ 54 – 57 ]. Moreover, Mohammed and Benlamri [ 21 ] designed a system that could help to prescribe differential diagnosis recommendations. Grouping similar diagnosis patients can be useful to enhance the medication process. In this regard, Fernández-Breis et al. [ 58 ] created a framework to group the patients by identifying patient cohorts. Moreover, Kiourtis et al. [ 59 ] proposed a new device-to-device (D2D) protocol for short-distance health data exchange between a healthcare professional and a citizen utilizing a sequence of Bluetooth communications. Supplying medical information to people is one of the main tasks of e-healthcare services [ 58 ]. Before proceeding with a medical diagnosis, we need to be sure about the correctness of the procedure. Andreasik et al. [ 60 ] developed a Semantic Web-based framework to determine the correctness of medical procedures. Various systems for medical education were developed using Semantic Web technologies such as a web service delivery system [ 4 ], a web service searching system [ 61 ], and an e-learning framework for the patients to learn about different medical information [ 62 , 63 ]. Some articles discussed the rule-based approaches for the advancement of medical applications [ 64 , 65 ]. Quality assurance of Semantic Web services is necessary, and so a framework was created using a Semantic Web-based replacement policy to assure the quality of a set of services and replace it with a newly defined subset of services when the existing one fails in execution [ 3 ]. A framework was designed for Semantic Web-based data representation [ 66 ]. Meilender et al. [ 67 ] described the migration of Web 2.0 data into Semantic Web data for the ease of further advancement in Web 3.0.

Researchers used different Semantic Web services to convert the relational database to create Resource Description Framework (RDF) and Web Ontology Language (OWL)-based ontologies. It is done by extracting the instances from the relational databases and representing them into RDF datasets [ 21 , 55 , 57 , 62 ]. In some prior literature, many RDF datasets were created using Apache JENA 4.0 [ 4 ], different versions of protégé were used to construct and represent various healthcare ontologies [ 2 , 17 ], Apache Jena framework was used for OWL reasoning on the RDF datasets [ 50 , 53 ], and the EYE engine was used for reasoning [ 54 ]. Besides, Kiourtis et al. [ 68 ] developed a technique for converting healthcare data into its equivalent HL7 FHIR structure, which principally corresponds to the most used data structures for describing healthcare information. Furthermore, a sublanguage of F-logic named Frame Logic for Semantic Web Services (FLOG4SWS) and web services along with some features of Flora-2 was used to represent the ontology [ 51 ]. The authors of some papers used RDF and OWL for data representation of different ontologies [ 50 , 52 , 54 , 66 ]. Mohammed and Benlamri [ 21 ] offered a number of Semantic Web strategies for ontology alignment, such as ontology matching and ontology linking, and some used ontology mapping for the ontology alignment [ 58 , 66 ]. By combining RDF and semantic mapping features, Perumal et al. [ 69 ] provided a translation mechanism for healthcare event data along with Semantic Web Services and decision making. In addition, a linked data graph (LDG) is utilized to combine numerous publicly available medical data sources using RDF converters [ 49 ]. The works in [ 52 , 54 ] used Notation3 for data mapping. SPARQL was used as the query language for the database [ 2 , 17 , 50 , 52 , 57 ]. Besides, the Jena API was also used as a query language [ 21 ]. The Semantic Web's rules and logic were expressed in terms of OWL concepts using the Semantic Web Rule Language (SWRL) [ 55 , 57 ]. TopBraid Composer is used as the Semantic Web modeling tool [ 60 ].

There was no proof that the system created using semantic networks was able to share knowledge among healthcare services [ 2 , 46 , 48 ]. Researchers did not mention how a system can be integrated with different types of datasets in the world [ 2 , 47 ]. In their paper, Ramasamy et al. [ 3 ] did not mention whether the system could replace all types of services or not. Shi et al. [ 26 ] did not discuss the success rate of the datasets in their dataset information resource and the accuracies of different systems created with these datasets. No proper evaluation techniques have been given for linked data graph [ 49 ], Semantic Web service delivery systems [ 4 , 50 ], and Semantic Web reasoning system [ 52 , 53 ] in their studies. There is no discussion of the reliability and validity of numerous decision making and recommendation systems [ 21 , 54 , 70 ]. Podgorelec and Gradišnik [ 64 ] did not provide information about the betterment of the combined Semantic Web technologies and rule-based systems against other alternatives. Most of the articles discussed or offered various techniques to build different healthcare services, but there are only a few articles that implemented the proposed systems and tested them in a real-life context.

3.2. Diseases

This thematic area aims to specifically identify and discuss the contributions of Semantic Web technologies to reach interoperability of information in the healthcare sector and aid in the initial detection and nursing of diseases, such as diabetes, chronic conditions, cardiovascular disease, dementia, and so on. SW provides a framework to integrate medical knowledge and data for effective diagnosis and clinical service. They help to select patients, recognize drug effects, and analyze results by using electronic health data from numerous sources. The queryMed packages were proposed for pharmaco-epidemiologists that link medical and pharmacological knowledge with electronic health records [ 10 ]. This application searches for people with critical limb ischemia (CLI) with at least one medication or none at all and gives them healthcare recommendations. SW also emphasizes the study of phenotypes and their influence on personal genomics. The Mayo Clinic's project, Linked Clinical Data (LCD), facilitates the use of SW and makes it easier to extract and express phenotypes from electronic medical records [ 71 ]. It also emphasizes the use of semantic reasoning for the identification of cardiovascular diseases. Besides this, it aims to improve healthcare service quality for people suffering from chronic conditions. Proper planning and management are required for the better treatment and management of chronic diseases. Thus, the Chronic Care Model (CCM) provides knowledge-based acquisition to patients [ 72 ].

Ontology-based applications such as the Concept Unique Identifier (CUI) from Unified Medical Language System, Drug Indication Database (DID), and Drug Interaction Knowledge Base (DIKB) are widely used in the medical domain to establish mappings between medical terms [ 10 ]. In the context of ontology, the ECOIN framework uses a single ontology, multiple view approach that exploits modifiers and conversion functions for context mediation between different data sources [ 73 ]. To support clinical knowledge sharing through interaction models, the OpenKnowledge project has been initiated from different data sources [ 9 ], and K-MORPH architecture has been proposed for a unified prostate cancer clinical pathway.

Along with information sharing, medical data management is critical in the diagnosis of disorders like dementia. To establish a better diagnosis method for dementia, a medical information management system (MIMS) was designed using SW technologies through the extraction of metadata from medical databases [ 74 , 75 ]. In order to further eliminate the e-health information and knowledge sharing crisis, Bai and Zhang [ 76 ] suggested Integrated Mobile Information System (IMIS) for healthcare. It provides a platform to connect diabetic patients with care providers to receive proper treatment and diagnosis facilities at home. The Diabetes Healthcare Knowledge Management project also aims to ease decision support and clinical data management in diabetes healthcare processes [ 72 ].

To construct decision models for the Diabetes Healthcare Knowledge Management framework, tools such as Semantic Web Rule Language (SWRL), OWL, and RDF were used. This ontology-based knowledge framework provides ontologies, patient registries, and an evidence-based healthcare resource repository for chronic care services [ 72 ]. Web Ontology Language (OWL), Resource Description Framework (RDF), and SPARQL were also commonly used for the creation of metadata in dementia diagnosis [ 77 ]. On the other hand, the Semantic Web-based retrieval system for the pathology project, known as “A Semantic Web for Pathology,” involves building and managing ontology for lungs which was made up of common semantic tools RDF and OWL which were used along with RDQL query language [ 20 ].

Even though effective frameworks were proposed to diagnose certain diseases, research gaps still exist that affect medical data management. For instance, the fuzzy technique-based service-oriented architecture has proved to be beneficial in terms of adjustability and reliability. But still, in the context of domain-specific ontologies, the applicability of this architecture is yet to be validated [ 78 ]. Effective distribution of knowledge into the existing healthcare system is a huge challenge in augmenting decision making and improving the care service quality. Therefore, future works are intended to focus on embedding knowledge and conducting user evaluations for better disease management.

3.3. Information Management

Managing patients' information and storing test results are significant tasks in the medical and healthcare industries. The application of the SW-based approach in this area can make an influential impact on this data organization. Such an approach to gather valuable and new medical information was primarily made by creating a network of computers [ 79 ]. Domain ontology was created according to the user's choice, suggesting medical terminologies to retrieve customized medical information [ 80 ]. RDF datasets can be used to find the trustworthiness of intensive care unit (ICU) medical data [ 70 ]. The SW has also been used to document healthcare video contents [ 81 ] and radiological images to provide appropriate information about those records [ 82 ].

However, moving from the conventional web-based information management to the Semantic Web had some reasons. As medical knowledge is essential to verify and share across hospitals and medical centers, introducing the Semantic Web approach helped to achieve a proper mapping system [ 83 ]. A medical discussion forum based on the SW helped to exchange valuable data among healthcare practitioners to map-related information in the dataset [ 84 ]. The use of the fuzzy cognitive system in the SW also helped to share and reuse knowledge from databases and simplify maintenance [ 85 ]. This methodology also helped to improve data integration, analysis, and sharing between clinical and information systems and researchers [ 86 ]. Moving towards this approach also aided the researchers in connecting different data storage domains and creating effective mapping graphs [ 87 ].

Though the approach of SW in healthcare has a broad area, most applications are pretty similar. The framework mainly proposed the use of RDF, SPARQL, and OWL [ 4 , 76 ]. Link relevance methods were used to produce semantically relevant results to extract pertinent information from domain knowledge [ 49 ]. Ontology-based logical framework procedures and SMS architecture helped to organize the heterogeneous domain network [ 88 , 89 ].

Evaluating the system's performance is necessary to get the actual results. A Health Level 7 (HL7) messaging mechanism has been developed for mapping the generated Web Service Modeling Ontology [ 90 ]. However, there were some issues regarding the heterogeneity problem. JavaSIG API was used to generate the HL7 message to resolve these issues [ 91 ]. Some of the evaluation tools are not advanced enough to handle vast amounts of data. PMCEPT physics algorithms were used to verify the algorithm [ 92 ]. Abidi and Hussain [ 9 ] created two levels to characterize different ontological models to establish morphing. BioMedLib Search Engine creation for economic efficiency helped to develop a Semantic Web framework for rural people [ 25 ]. The Metamorphosis installation wizard converted the text format UMLS into a MySQL database UMLS in order to access a SPARQL endpoint [ 93 ].

However, the frameworks proposed in different statements were not implemented precisely, which created a gap in each framework. Some frameworks are proposed to integrate with the blockchain for additional security and privacy [ 23 , 94 – 96 ]. AI and IoT integration can also enhance system maintenance [ 1 ]. Hussain et al. [ 97 ] suggested a framework named Electronic Health Record for Clinical Research (EHR4CR), but they did not get any actual results from this framework in the real world [ 97 ]. The proposed framework's implementation result will provide more development on this.

3.4. Frontier Technology

In this segment, we critically analyze works that are primarily keen on how cutting-edge technologies like AI and computer vision can be applied to the medical field with the continuous advancement of science and technology. Semantic Web-enabled intelligent systems leverage a knowledge base and a reasoning engine to solve problems, and they can help healthcare professionals with diagnosis and therapy. They can assist with medical training in a resource-constrained environment. To illustrate, Haque et al. [ 8 ], Chondrogiannis et al. [ 98 ], Haque and Bhushan [ 99 ], and Haque et al. [ 24 ] created a secure, fast, and decentralized application that uses blockchain technologies to allow users and health insurance organizations to reach an agreement during the implementation of the healthcare insurance policies in each contract. To preserve the formal expression of both insured users' data and contract terms, health standards and Semantic Web technologies were used. Accordingly, significant work has been proposed by Tamilarasi and Shanmugam [ 100 ] which explores the relationship between the Semantic Web, machine learning, deep learning, and computer vision in the context of medical informatics and introduces a few areas of applications of machine learning and deep learning algorithms. This study also presents a hypothesis on how image as ontology can be used in medical informatics and how ontology-based deep learning models can help in the advancement of computer vision.

The real-world healthcare datasets are prone to missing, inconsistent, and noisy data due to their heterogeneous nature. Machine learning and data mining algorithms would fail to identify patterns effectively in this noisy data, resulting in low accuracy. To get these high-quality data, data preprocessing is essential. Besides, RDF datasets representing healthcare knowledge graphs are very important in data mining and integrating IoT data with machine learning applications [ 8 , 101 ]. RDF datasets are made up of a distinguishable RDF graph and zero or more named graphs, which are pairings of an IRI or blank node with an RDF graph. While RDF graphs have formal model-theoretic semantics that indicate which world configurations make an RDF graph true, there are no formal semantics for RDF datasets. Unlike traditional tabular format datasets, RDF datasets require a declarative SPARQL query language to match graph patterns to RDF triples, which makes data preprocessing more crucial. In the context of data preprocessing, Monika and Raju [ 101 ] proposed a cluster-based missing value imputation (CMVI) preprocessing strategy for preparing raw data to enhance the imputed data quality of a diabetes ontology graph. The data quality evaluation metrics R2, D2, and root mean square error (RMSE) were used to assess simulated missing values.

Nowadays, question-answering (QA) systems (e.g., chatbots and forums) are becoming increasingly popular in providing digital healthcare. In order to retrieve the required information, such systems require in-depth analysis of both user queries and records. NLP is an underlying technology, which converts unstructured text into standardized data to increase the accuracy and reliability of electronic health records. A Semantic Web application has been deployed for question-answering using NLP where users can ask questions about health-related information [ 27 ]. In addition, this study introduces a novel query simplification methodology for question-answering systems, which overcomes issues or limitations in existing NLP methodologies (e.g., implicit information and need for reasoning).

The majority of contributions to this category have organized their work using semantic languages on a smaller scale. Besides, it is noteworthy that hardly any of the approaches, except [ 27 , 101 ], adopted a framework for developing their models. Asma Ben et al. used a benchmark (corpus for evidence-based medicine summarization) to evaluate the question-answering (QA) system and analyzed the obtained outcomes [ 27 ]. Some studies have not included a prior literature review for the discovery of available frontier services [ 100 ]. In addition, the study shows that with the soaring demand for better, speedier, more accurate, and personalized patient treatment, deep learning powered models in production are becoming increasingly prevalent. Often these models are not easily explainable and prone to biases. Explainable AI (XAI) has grown in popularity in healthcare due to its extraordinary success in explaining decision-making criteria to systems, reducing unintended outcomes and bias, and assisting in gaining patients' trust—even when making life-or-death decisions [ 102 ]. To the best of our knowledge, XIA has gleaned attention on ontology-based data management but received relatively little attention on collaborating Semantic Web technologies across healthcare, biomedical, clinical research, and genomic medicine. Similarly, within the IoT system spectrum, invocation of semantic knowledge and logic across various Medical Internet of Things (MIoT) applications, gathering vast amounts of data, monitoring vital body parameters, and gathering detailed information from sensors and other connected devices, as well as maintaining safety, data confidentiality, and service availability also received relatively little attention.

3.5. Regulatory Conditions

This segment concentrates on Semantic Web-based tools, technologies, and terminologies for documenting the semantics of medical and healthcare data and resources. As the healthcare industries generate a massive amount of heterogeneous data on a global scale, the use of a knowledge-based ontology on such data can reduce mortality rate and healthcare costs and also facilitate early detection of contagious diseases. Besides, the SW provides a single platform for sharing and reusing data across apps, companies, and communities. The biomedical community has specific requirements for the Semantic Web of the future. There are a variety of languages that can be used to formalize ontologies for medical healthcare, each with its expressiveness. A collaborative effort led by W3C, involving many research and industrial partners, set the requirements of medical ontologies. A real ontology of brain cortex anatomy has been used to assess the requirements stated by W3C in two available languages at that time, Protégé and DAML + OIL [ 103 ]. The development and comparative analysis contexts of brain cortex anatomy ontologies are partially addressed in this. In 2019, a survey-based study was conducted to determine faculty and researcher usage, impact, and satisfaction with Web 3.0 networking sites on medical academic performance [ 104 ]. This study explores the awareness and willingness to implement Web 3.0 technologies within healthcare at Rajiv Gandhi University of Health Sciences. The results of this study imply that Web 3.0 technologies have an impact on professor and researcher academic performance, with those who are tech-savvy being disproportionately found in high-income groups [ 104 ].

Documentation of semantic tools and data is required to resolve healthcare reimbursement challenges. Besides, regulations are also necessary to standardize semantic tools while ensuring that healthcare communities and systems adhere to general health policies. Unfortunately, we found only a few works focusing on this challenge based on SWT. Only the study conducted by Sugihartati [ 104 ] adopted a proper survey methodology. Therefore, future efforts should focus on regulating, documenting, and standardizing semantic tools, technologies, and health resources, as well as conducting user evaluations to understand and optimize functional efficiency and accelerate market access for medicines for general health.

Tables Tables3 3 3 – 5 provide a detailed analysis of the studied works for the derived five categories.

Summarization of the research contribution of the selected articles.

| Themes | Contributions |

|---|---|

| (i) An ontology-based semantic server for healthcare organizations to exchange information among them [ ]. (ii) Discussed healthcare data interoperability and integration plan of the solution [ ]. (iii) Used Semantic Web terms (SWT) to provide oral medicine knowledge and information [ ] and to build a decision support system [ ]. (iv) Developed a prototype that generates the desired reports using a high degree of data integration and discussed a production rule-based approach to establish a link between prevalent diseases and the range of the diseases in a particular gene [ ]. (v) Represented global ontology via bridge methods to avoid conflicts among different local ontologies [ ]. (vi) Implemented a WSMO (Web Service Modeling Ontology) automated service delivery system [ ]. (vii) Designed a system for automatic alignment of user-defined EHR (electronic health record) workflows [ ]. (viii) Proposed an upper-level-ontological service providing a mechanism to provide integrity constraints of data and to improve the usability of the medical linked data graph (LDG) services [ ]. (ix) Developed a chatbot and a triaging system that provides information about diseases, screens users' problems, and sorts patients into groups based on the user's needs [ ]. (x) Developed a healthcare dataset information resource (DIR) to hold dataset information and respond to parameterized questions [ ]. (xi) A healthcare service framework that coordinates web services to locate the closest hospital, ambulance service, pharmacy, and laboratory during an emergency [ ]. (xii) Used web service replacement policy to build a Semantic Web service composition model which replaces a set of services with a generated service subset when the previous set of services fails in execution [ ]. (xiii) Proposed ontology-based data linking to understand and extract medical information more precisely [ ]. (xiv) Integrated knowledge with clinical practice to provide guidelines in medicine [ ]. (xv) An abstraction method that converts XML-type medical information to RDF and OWL to create electronic health record (EHR) architecture for the identification of patient cohorts [ ]. (xvi) Designed a platform for solving complex medical tasks by interpreting algorithms and meta-components [ ]. (xvii) Provided a strategy for suggestions in view of clients' likeness figuring and exhibited the adequacy of the model suggested through configuration, execution, and examination in social learning environments [ ]. (xviii) Constructed semantic relationships of input and output medical-related parameters to resolve conflicts and algorithms that remove the redundancy of web service paths [ ]. (xix) Used a management time and run time subsystem to discover the potential web services [ ]. (xx) Integrated weak inferring with a single and explanation-based generalization to leverage the complementary strengths [ ]. | |

| (i) Created an ontology to build and manage information about a particular disease [ ]. (ii) Developed a web-based prototype of Integrated Mobile Information System for healthcare of diabetic patients [ ]. (iii) Implemented embedded feedback between users and designers and communication mechanisms between patients and care providers [ ]. (iv) QueryMed package made the integration of clinical and pharmacological information that is used to distinguish all the medications endorsed for critical limb ischemia (CLI) and to recognize one contraindicated solution for one patient [ ]. (v) A semantics-driven system based on EMRs that can break down multifactorial phenotypes, like peripheral arterial disease and coronary heart disease [ ]. (vi) Discussed a way to deal with a unified prostate cancer clinical pathway by incorporating three different clinical pathways: Halifax pathway, Calgary pathway, and Winnipeg pathway [ ]. (vii) Demonstrated the achievability and tolerability of a distributed web-oriented environment as an effective study and approval technique for characterizing a real-life setting [ ]. | |

| (i) Proposed a brief process of integration for interoperability and scalability to create an ontology of inflammation [ ]. (ii) Discussed an indexing mechanism to extract attributes from an audio-visual web system [ ]. (iii) Developed ontology-enabled security enforcement for hospital data security [ ]. (iv) Semantic Web mining-based ontologies allow medical practitioners to have better access to the databases of the latest diseases and other information [ ]. (v) Proposed a medical knowledge morphing system to focus on ontology-based knowledge articulation and morphing of diverse information through logic-based reasoning with ontology mediation [ ]. (vi) The annotation image (AIM) ontology was created to give essential semantic information within photos, allowing radiological images to be mined for image patterns that predict the biological properties of the structures they include [ ]. (vii) Described a semantic data architecture where an accumulative approach was used to append data sources [ ]. (viii) Implemented a functional web-based remote MC system and PMCEPT code system, as well as a description of how to use a beam phase space dataset for dosimetric and radiation therapy planning [ ]. (ix) Discussed an approach using Notation3 (N3) over RDF to present a generic approach to formalizing medical knowledge [ ]. (x) It was demonstrated that in the healthcare domain, knowledge management approaches and the synergy of social media networks may be used as a foundation for the creation of information system (IS). This helps to optimize data flow in healthcare processes and provides synchronized knowledge for better healthcare decision making (cardiac diseases) [ ]. (xi) Using semantic mining principles, the authors described a technique for minimizing information asymmetry in the healthcare insurance sector to assist clients in understanding healthcare insurance plans and terms and conditions [ ]. (xii) Discussed a mapping-based approach to generate Web Service Modeling Ontology (WSMO) description from HL7 (Health Level 7) V3 specification where Messaging Modeling Ontology (MMO) is mapped with WSMO [ ]. (xiii) Designed a web crawler-based search engine to gather medical information as per patients' needs [ ]. (xiv) A framework where patients can get relevant medical information from a personalized database, where the patient's medical history and current health condition are captured and then analyzed to search for particular information regarding the patient's needs [ ]. (xv) Demonstrated an Electronic Health Record for Clinical Research (EHR4CR) semantic interoperability approach for bridging the clinical care and clinical research domains [ ]. (xvi) SNOMED-CT ontologies were used to map big laboratory datasets with metadata in the form of clinical concepts [ ]. (xvii) An online medical discussion forum where practitioners can start a topic-specific discussion and then the platform analyzes centrality measurements and semantic similarity metrics to find the most prominent practitioners in a discussion forum [ ]. (xviii) Developed a UMLS-OWL conversion system to translate UMLS content into an OWL 2 ontology that can be queried and inferred via a SPARQL endpoint [ ]. (xix) Researchers used SPA to detect illness and connect to the most excellent specialist. Besides, they recounted a schema representing a database query enabling doctors to pick and determine the most suitable EHR and patient data in healthcare scenarios [ ]. (xx) The Semantic Web, blockchain, and Graph DB were combined to provide a patient-centric perspective on healthcare data in a cooperative healthcare system [ ]. | |

| (i) A cluster-based missing value imputation (CMVI) preprocessing strategy for preparing raw data is designed to enhance the imputed data quality of a diabetes ontology graph [ ]. (ii) Presented hypotheses on how image as ontology can be used in medical informatics and how ontology-based deep learning models can help computer vision [ ]. (iii) Discussed a deep learning technique called the ontology-based restricted Boltzmann machine (ORBM) that can be used to gain an understanding of electronic health records (EHRs) [ ]. (iv) Developed a Semantic Web app for question-answering using NLP where users can question about health-related information [ ]. | |

| (i) One needs DAML + OIL to express sophisticated taxonomic knowledge, and rules should aid in the definition of dependencies between relations and use predicates of arbitrary, while metaclasses may be useful in taking advantage of current medical standards [ ]. (ii) Described using Web 3.0-based social application for medical knowledge and communication with others and with faculty members [ ]. (iii) The impact of Web 3.0 awareness on the academic performance of Rajiv Gandhi University of Health Sciences faculty and researchers was investigated [ ]. (iv) Users can insert structured clinical information in the domains using SNOMED-CT terms [ ]. (v) Demonstrated the congruence between health informatics and Semantic Web standards, obstacles in representing Semantic Web data, and barriers in using Semantic Web technology for web service [ ]. (vi) The significant qualities of a Semantic Web language for medical ontologies were discussed [ ]. | |

Summarization of the research gaps and future research avenues.

| Themes | Research gaps |

|---|---|

| (i) No interoperable healthcare system has yet been deployed [ ]. (ii) Researchers have not yet looked into the policy limits of video as ontologies at an organizational level [ ]. (iii) Prior research has focused solely on the limitations and policies of expanding an existing healthcare delivery system to directly recommend medications to users without the assistance of medical professionals [ ]. (iv) Scholars are yet to investigate how Web 3.0 can be used to promote education through resource sharing [ ]. (v) There is a dearth of studies on the role of existing healthcare applications in detecting patient severity levels based on the health data collected from patients [ ]. (vi) Any prior studies did not take into account a system that can automatically determine, choose, and compose web services [ ]. (vii) The challenges in big data connectivity into RDF, as well as privacy and security concerns, that were not addressed [ ]. (viii) The extant literature includes only a few examples where researchers have developed a systematic clinical validation system based on the study [ ]. (ix) The prior literature still cannot seem to distinguish ways to improve the similarity score between service parameters using statistics-based strategies and natural language processing techniques [ ]. (x) Any previous studies on the Internet of Things domain did not consider the semantic interoperability assessment between healthcare data, services, and applications [ ]. | |

| (i) No previous work had proposed an ontology for a healthcare system to efficiently store ontological data with proper evaluation criteria that meet W3C standards [ ]. (ii) Researcher is yet to put them into practice a full-featured Integrated Mobile Information System for diabetic healthcare [ ]. (iii) No prior work is done in expanding the set of prebuilt queries of a particular disease to handle a wide range of use cases through possible linked data evolutions [ ]. (iv) Earlier studies did not consider mapping the triplets of one disease RDF to other existing medical services, applications, and administrations in order to conduct client assessments [ ]. (v) There is a deficit of research on the development of intelligent user interfaces that understand the semantics of clinical data [ ]. | |

| (i) Knowledge gap in the current research in indexing higher-quality videos for better attribute extraction [ ]. (ii) Indexing strategy for retrieving attributes from an audio-visual web system is yet to be addressed [ ]. (iii) Need for a greater understanding of Semantic Web applications related to web mining to build ontologies for healthcare websites [ ]. (iv) Prior literature addressed only modeling and annotation for a specific disease such as urinary tract infection diseases. The literature is yet to identify methods for generalizing clinical application models [ ]. (v) No studies on the asymmetry minimization system take into account both the insurer's and the existing patient's perspectives [ ]. (vi) The literature is yet to find ways to complete the WSMO generator from HL7 with a user interface [ ]. | |

| (i) The literature is yet to find ways so that web applications can combine natural language processing (NLP) and domain knowledge induction in decision making and automate medical healthcare services [ ]. (ii) The literature is yet to discover a technique to combine cloud computing, AI, and quantum physics with a platform to anticipate the chemical and pharmacological properties of small-molecule compounds for medication research and design [ ]. | |

| (i) Lack of information on the Semantic Web tools before the authors moved onto the architecture of the system [ ]. (ii) There was no emphasis on the semantic quality of available languages in any of the literature evaluation steps [ ]. | |

Future research avenues in the form of research questions.

| Themes | Future research avenues |

|---|---|

| (i) What features should an interoperability framework contain in order to be considered complete [ ]? (ii) What technologies are required to generate video file ontologies, and what are the drawbacks of doing so [ ]? (iii) What approaches may healthcare organizations use to provide medical recommendations without consulting the medical practitioners directly [ ]? (iv) How can the healthcare industry use Web 3.0 to boost medical education [ ]? (v) What strategies can be applied to assess a patient's severity level based on the patient's collected health data [ ]? (vi) What technologies can be utilized to create web services, and how can a system automatically determine and choose the optimal web services for it [ ]? (vii) What kinds of security precautions should be considered while sharing information over the web [ ]? (viii) When it comes to adopting a Web 3.0-based clinical validation system, what technological skills and facility-related challenges do researchers face? What steps should be taken to ensure that clinical processes are validated [ ]? (ix) What strategies and techniques can healthcare organizations use to increase similarity scores between service parameters [ ]? (x) How can semantic interoperability between healthcare data, services, and applications be assessed in the context of the Internet of Things [ ]? | |

| (i) Which policies and regulations may ontological systems use to comply with W3C standards [ ]? (ii) How can scholars expand a disease's set of queries to cover a wider range of use cases [ ]? (iii) What will be the most effective user interface designs for massive data networks that can interpret the semantics of clinical data [ ]? | |

| (i) What are the recommendations for indexing high-quality videos in Graph DB to increase attribute extraction [ ]? (ii) What procedures must be followed in order to extract attributes from the data that are gathered from different audio-visual web systems [ ]? (iii) Is it possible to improve the performance of the Web Service Modeling Ontology generator with a modified user interface [ ]? (iv) Will the RDF ontology be able to replace web crawlers in terms of retrieving required data from the web [ ]? | |

| (i) How can web applications automate medical healthcare services by combining natural language processing (NLP) with domain knowledge induction in decision making [ ]? (ii) How could the Semantic Web platform anticipate the chemical and pharmacological properties of small-molecule compounds using cloud computing, quantum physics, and artificial intelligence [ ]? (iii) What are the procedures to implement ontology-based restricted Boltzmann machine (ORBM) in electronic healthcare record (EHR) [ ]? | |

| (i) Which techniques can be used to optimize NLP for transforming pathology report segments into XML [ ]? (ii) What strategies and activities may developers employ to address semantic quality issues in existing languages [ ]? | |

4. Research Gaps

This systematic literature review presents a vast knowledge about the use of Web 3.0 or Semantic Web technology in different approaches to the medical and healthcare sector. By analyzing various kinds of literature, we recognized different research gaps to address future research avenues, which will enable scholars from different parts to examine the area and discover new developments. Table 4 summarizes the overall research gaps and Table 5 summarizes the future research avenues we encounter during the literature review.

4.1. Scope of E-Healthcare Service Research

Even though studies in the domain of e-healthcare services suggested and created numerous frameworks to provide vital support to the users, there are still research gaps among the methods. Several frameworks were proposed to facilitate data interoperability. However, based on what we know best, none of the proposed frameworks has been implemented in the actual world. Furthermore, there is no evidence of knowledge sharing among organizations using semantic network-based systems. Besides, just a handful of the research papers included assessment methodologies and a discussion of the findings. Furthermore, the frameworks that provide medical services such as disease reasoning, decision making, and drug recommendations lack reliability and validity. Most of the research articles suggested architectures but did not implement them, and their intended prototypes were never built.

4.2. Scope of Disease Research

Semantic Web technologies are being used in the healthcare sector to improve information interoperability and aid in identifying and treating diseases. Only a few studies among the 65 papers have examined the various frameworks for developing a fully functional system for either diabetic healthcare or disease collection of prebuilt queries. Earlier research also lacks mapping triplets of one illness RDF to other existing medical services, applications, and administrations. Researchers also lack the creation of intelligent user interfaces that grasp the semantics of clinical data. This paper shows that more study is required to efficiently use ontology in the healthcare sector to preserve data with proper evaluation criteria.

4.3. Scope of Information Management Research

Medical data are considered valuable information utilized to assist patients in receiving better care. It is challenging to implement Semantic Web technologies to store and search for data. Various studies attempt to adopt specific methods that may aid in the proper management of medical information; however, some gaps remain. There is no attempt to index high-quality videos and collect attributes for categorizing them. A validation gap exists due to the lack of suitable evaluation techniques. In most studies, RDF ontologies are used to collect information from websites and represent those data. However, no information is provided about how effective those models are in real-world applications.

4.4. Scope of Frontier Technology Research

Even though cutting-edge technology such as AI, ML, robotics, and the IoT has revolutionized the healthcare industry and helped improve everything from routine tasks to data management and pharmaceutical development, the industry is still evolving and looking for ways to improve. If we consider the aspect of research, the history of the Semantic Web and frontier technology is technically not new at all, yet the Semantic Web presents some limitations. Since the web began as a web of documents, converting each document into data is incredibly challenging. Various tools and approaches, such as natural language processing (NLP), may be used to do this task, but it would take a long time. However, only a small attempt has been made to integrate NLP and domain knowledge induction. Ontology and AI, and logic, have always been and will continue to be essential elements of AI development. Besides, connecting ontology to AI is frequently a problem in and of itself. Furthermore, because ontology trees often have a large number of nodes, real-time execution is problematic. Earlier studies have apparently failed to solve this problem. There have been significant attempts to incorporate the various aspects of IoT resources into ontology creation, such as connectivity, virtualization, mobility, energy, or life cycle [ 108 , 109 ]. The authors attempted to enhance the computerization of the health and medical industry by utilizing the Internet of Things (IoT) and Semantic Web technologies (SWTs), which are two key emerging technologies that play a significant role in overcoming the challenges of handling and presenting data searches in hospitals, clinics, and other medical establishments on a regular basis. Despite its significant efforts to collaborate different IoT spectrum and Semantic Web technologies, research gaps in medical data management persist. For instance, after its introduction, the Medical Internet of Things (MIoT) has taken an active role in improving the health, safety, and care of billions of people. Rather than going to the hospital for help and support, patients' health-related parameters can now be monitored remotely, constantly, consistently, and in real time and then processed and transferred to medical data enters via cloud storage. Because of cloud platforms' security risks, choosing one is a major technological challenge for the healthcare industry. Some of these cloud-based storage systems cannot adequately preserve patients' data and information regarding semantic data [ 6 , 8 ]. However, none of the research articles suggested any architectures, nor were any intended prototypes built to address these cloud security issues of MIoT in general.

4.5. Scope of Regulatory Condition Research

Regulations are paramount for the healthcare and medical industries to function properly. They support the global healthcare market, ensure the delivery of healthcare services, and safeguard patients,' doctors,' developers,' researchers,' and healthcare agents' rights and safety. The Semantic Web also has its detractors, like many other technologies, in terms of legislation and regulation. Historically, scaling medical knowledge graphs has always been a challenge. As a result of privacy and legal clarity, healthcare companies are not sufficiently incentivized to share their data as linked data. Only a few academic papers and documents disclose how these corporations use to automate the process. Furthermore, compared to other types of datasets, many linked datasets representing tools are of poor quality. As a result, applying them to real-world problems is highly challenging. Other alternatives, such as property graph databases like Neo4j and mixed models like OriendDB, have grown in popularity due to the RDF format's complexity. Healthcare application developers and designers prefer to use web APIs over SPARQL endpoint to send data in JSON format. This study illustrates that more research is needed to improve the semantic quality of available technologies (e.g., RDF, OWL, and SPARQL) to effectively use them in the healthcare industry to ease healthcare development.

5. Discussion

This section describes the findings from the selected studies based on answer to the research questions. Therefore, the readers will be able to map the research questions with the contribution of this systematic review.

5.1. (RQ1) What Is the Research Profile of Existing Literature on the Semantic Web in the Healthcare Context?