Organizing Your Social Sciences Research Assignments

- Annotated Bibliography

- Analyzing a Scholarly Journal Article

- Group Presentations

- Dealing with Nervousness

- Using Visual Aids

- Grading Someone Else's Paper

- Types of Structured Group Activities

- Group Project Survival Skills

- Leading a Class Discussion

- Multiple Book Review Essay

- Reviewing Collected Works

- Writing a Case Analysis Paper

- Writing a Case Study

- About Informed Consent

- Writing Field Notes

- Writing a Policy Memo

- Writing a Reflective Paper

- Writing a Research Proposal

- Generative AI and Writing

- Acknowledgments

Definition and Introduction

Journal article analysis assignments require you to summarize and critically assess the quality of an empirical research study published in a scholarly [a.k.a., academic, peer-reviewed] journal. The article may be assigned by the professor, chosen from course readings listed in the syllabus, or you must locate an article on your own, usually with the requirement that you search using a reputable library database, such as, JSTOR or ProQuest . The article chosen is expected to relate to the overall discipline of the course, specific course content, or key concepts discussed in class. In some cases, the purpose of the assignment is to analyze an article that is part of the literature review for a future research project.

Analysis of an article can be assigned to students individually or as part of a small group project. The final product is usually in the form of a short paper [typically 1- 6 double-spaced pages] that addresses key questions the professor uses to guide your analysis or that assesses specific parts of a scholarly research study [e.g., the research problem, methodology, discussion, conclusions or findings]. The analysis paper may be shared on a digital course management platform and/or presented to the class for the purpose of promoting a wider discussion about the topic of the study. Although assigned in any level of undergraduate and graduate coursework in the social and behavioral sciences, professors frequently include this assignment in upper division courses to help students learn how to effectively identify, read, and analyze empirical research within their major.

Franco, Josue. “Introducing the Analysis of Journal Articles.” Prepared for presentation at the American Political Science Association’s 2020 Teaching and Learning Conference, February 7-9, 2020, Albuquerque, New Mexico; Sego, Sandra A. and Anne E. Stuart. "Learning to Read Empirical Articles in General Psychology." Teaching of Psychology 43 (2016): 38-42; Kershaw, Trina C., Jordan P. Lippman, and Jennifer Fugate. "Practice Makes Proficient: Teaching Undergraduate Students to Understand Published Research." Instructional Science 46 (2018): 921-946; Woodward-Kron, Robyn. "Critical Analysis and the Journal Article Review Assignment." Prospect 18 (August 2003): 20-36; MacMillan, Margy and Allison MacKenzie. "Strategies for Integrating Information Literacy and Academic Literacy: Helping Undergraduate Students make the most of Scholarly Articles." Library Management 33 (2012): 525-535.

Benefits of Journal Article Analysis Assignments

Analyzing and synthesizing a scholarly journal article is intended to help students obtain the reading and critical thinking skills needed to develop and write their own research papers. This assignment also supports workplace skills where you could be asked to summarize a report or other type of document and report it, for example, during a staff meeting or for a presentation.

There are two broadly defined ways that analyzing a scholarly journal article supports student learning:

Improve Reading Skills

Conducting research requires an ability to review, evaluate, and synthesize prior research studies. Reading prior research requires an understanding of the academic writing style , the type of epistemological beliefs or practices underpinning the research design, and the specific vocabulary and technical terminology [i.e., jargon] used within a discipline. Reading scholarly articles is important because academic writing is unfamiliar to most students; they have had limited exposure to using peer-reviewed journal articles prior to entering college or students have yet to gain exposure to the specific academic writing style of their disciplinary major. Learning how to read scholarly articles also requires careful and deliberate concentration on how authors use specific language and phrasing to convey their research, the problem it addresses, its relationship to prior research, its significance, its limitations, and how authors connect methods of data gathering to the results so as to develop recommended solutions derived from the overall research process.

Improve Comprehension Skills

In addition to knowing how to read scholarly journals articles, students must learn how to effectively interpret what the scholar(s) are trying to convey. Academic writing can be dense, multi-layered, and non-linear in how information is presented. In addition, scholarly articles contain footnotes or endnotes, references to sources, multiple appendices, and, in some cases, non-textual elements [e.g., graphs, charts] that can break-up the reader’s experience with the narrative flow of the study. Analyzing articles helps students practice comprehending these elements of writing, critiquing the arguments being made, reflecting upon the significance of the research, and how it relates to building new knowledge and understanding or applying new approaches to practice. Comprehending scholarly writing also involves thinking critically about where you fit within the overall dialogue among scholars concerning the research problem, finding possible gaps in the research that require further analysis, or identifying where the author(s) has failed to examine fully any specific elements of the study.

In addition, journal article analysis assignments are used by professors to strengthen discipline-specific information literacy skills, either alone or in relation to other tasks, such as, giving a class presentation or participating in a group project. These benefits can include the ability to:

- Effectively paraphrase text, which leads to a more thorough understanding of the overall study;

- Identify and describe strengths and weaknesses of the study and their implications;

- Relate the article to other course readings and in relation to particular research concepts or ideas discussed during class;

- Think critically about the research and summarize complex ideas contained within;

- Plan, organize, and write an effective inquiry-based paper that investigates a research study, evaluates evidence, expounds on the author’s main ideas, and presents an argument concerning the significance and impact of the research in a clear and concise manner;

- Model the type of source summary and critique you should do for any college-level research paper; and,

- Increase interest and engagement with the research problem of the study as well as with the discipline.

Kershaw, Trina C., Jennifer Fugate, and Aminda J. O'Hare. "Teaching Undergraduates to Understand Published Research through Structured Practice in Identifying Key Research Concepts." Scholarship of Teaching and Learning in Psychology . Advance online publication, 2020; Franco, Josue. “Introducing the Analysis of Journal Articles.” Prepared for presentation at the American Political Science Association’s 2020 Teaching and Learning Conference, February 7-9, 2020, Albuquerque, New Mexico; Sego, Sandra A. and Anne E. Stuart. "Learning to Read Empirical Articles in General Psychology." Teaching of Psychology 43 (2016): 38-42; Woodward-Kron, Robyn. "Critical Analysis and the Journal Article Review Assignment." Prospect 18 (August 2003): 20-36; MacMillan, Margy and Allison MacKenzie. "Strategies for Integrating Information Literacy and Academic Literacy: Helping Undergraduate Students make the most of Scholarly Articles." Library Management 33 (2012): 525-535; Kershaw, Trina C., Jordan P. Lippman, and Jennifer Fugate. "Practice Makes Proficient: Teaching Undergraduate Students to Understand Published Research." Instructional Science 46 (2018): 921-946.

Structure and Organization

A journal article analysis paper should be written in paragraph format and include an instruction to the study, your analysis of the research, and a conclusion that provides an overall assessment of the author's work, along with an explanation of what you believe is the study's overall impact and significance. Unless the purpose of the assignment is to examine foundational studies published many years ago, you should select articles that have been published relatively recently [e.g., within the past few years].

Since the research has been completed, reference to the study in your paper should be written in the past tense, with your analysis stated in the present tense [e.g., “The author portrayed access to health care services in rural areas as primarily a problem of having reliable transportation. However, I believe the author is overgeneralizing this issue because...”].

Introduction Section

The first section of a journal analysis paper should describe the topic of the article and highlight the author’s main points. This includes describing the research problem and theoretical framework, the rationale for the research, the methods of data gathering and analysis, the key findings, and the author’s final conclusions and recommendations. The narrative should focus on the act of describing rather than analyzing. Think of the introduction as a more comprehensive and detailed descriptive abstract of the study.

Possible questions to help guide your writing of the introduction section may include:

- Who are the authors and what credentials do they hold that contributes to the validity of the study?

- What was the research problem being investigated?

- What type of research design was used to investigate the research problem?

- What theoretical idea(s) and/or research questions were used to address the problem?

- What was the source of the data or information used as evidence for analysis?

- What methods were applied to investigate this evidence?

- What were the author's overall conclusions and key findings?

Critical Analysis Section

The second section of a journal analysis paper should describe the strengths and weaknesses of the study and analyze its significance and impact. This section is where you shift the narrative from describing to analyzing. Think critically about the research in relation to other course readings, what has been discussed in class, or based on your own life experiences. If you are struggling to identify any weaknesses, explain why you believe this to be true. However, no study is perfect, regardless of how laudable its design may be. Given this, think about the repercussions of the choices made by the author(s) and how you might have conducted the study differently. Examples can include contemplating the choice of what sources were included or excluded in support of examining the research problem, the choice of the method used to analyze the data, or the choice to highlight specific recommended courses of action and/or implications for practice over others. Another strategy is to place yourself within the research study itself by thinking reflectively about what may be missing if you had been a participant in the study or if the recommended courses of action specifically targeted you or your community.

Possible questions to help guide your writing of the analysis section may include:

Introduction

- Did the author clearly state the problem being investigated?

- What was your reaction to and perspective on the research problem?

- Was the study’s objective clearly stated? Did the author clearly explain why the study was necessary?

- How well did the introduction frame the scope of the study?

- Did the introduction conclude with a clear purpose statement?

Literature Review

- Did the literature review lay a foundation for understanding the significance of the research problem?

- Did the literature review provide enough background information to understand the problem in relation to relevant contexts [e.g., historical, economic, social, cultural, etc.].

- Did literature review effectively place the study within the domain of prior research? Is anything missing?

- Was the literature review organized by conceptual categories or did the author simply list and describe sources?

- Did the author accurately explain how the data or information were collected?

- Was the data used sufficient in supporting the study of the research problem?

- Was there another methodological approach that could have been more illuminating?

- Give your overall evaluation of the methods used in this article. How much trust would you put in generating relevant findings?

Results and Discussion

- Were the results clearly presented?

- Did you feel that the results support the theoretical and interpretive claims of the author? Why?

- What did the author(s) do especially well in describing or analyzing their results?

- Was the author's evaluation of the findings clearly stated?

- How well did the discussion of the results relate to what is already known about the research problem?

- Was the discussion of the results free of repetition and redundancies?

- What interpretations did the authors make that you think are in incomplete, unwarranted, or overstated?

- Did the conclusion effectively capture the main points of study?

- Did the conclusion address the research questions posed? Do they seem reasonable?

- Were the author’s conclusions consistent with the evidence and arguments presented?

- Has the author explained how the research added new knowledge or understanding?

Overall Writing Style

- If the article included tables, figures, or other non-textual elements, did they contribute to understanding the study?

- Were ideas developed and related in a logical sequence?

- Were transitions between sections of the article smooth and easy to follow?

Overall Evaluation Section

The final section of a journal analysis paper should bring your thoughts together into a coherent assessment of the value of the research study . This section is where the narrative flow transitions from analyzing specific elements of the article to critically evaluating the overall study. Explain what you view as the significance of the research in relation to the overall course content and any relevant discussions that occurred during class. Think about how the article contributes to understanding the overall research problem, how it fits within existing literature on the topic, how it relates to the course, and what it means to you as a student researcher. In some cases, your professor will also ask you to describe your experiences writing the journal article analysis paper as part of a reflective learning exercise.

Possible questions to help guide your writing of the conclusion and evaluation section may include:

- Was the structure of the article clear and well organized?

- Was the topic of current or enduring interest to you?

- What were the main weaknesses of the article? [this does not refer to limitations stated by the author, but what you believe are potential flaws]

- Was any of the information in the article unclear or ambiguous?

- What did you learn from the research? If nothing stood out to you, explain why.

- Assess the originality of the research. Did you believe it contributed new understanding of the research problem?

- Were you persuaded by the author’s arguments?

- If the author made any final recommendations, will they be impactful if applied to practice?

- In what ways could future research build off of this study?

- What implications does the study have for daily life?

- Was the use of non-textual elements, footnotes or endnotes, and/or appendices helpful in understanding the research?

- What lingering questions do you have after analyzing the article?

NOTE: Avoid using quotes. One of the main purposes of writing an article analysis paper is to learn how to effectively paraphrase and use your own words to summarize a scholarly research study and to explain what the research means to you. Using and citing a direct quote from the article should only be done to help emphasize a key point or to underscore an important concept or idea.

Business: The Article Analysis . Fred Meijer Center for Writing, Grand Valley State University; Bachiochi, Peter et al. "Using Empirical Article Analysis to Assess Research Methods Courses." Teaching of Psychology 38 (2011): 5-9; Brosowsky, Nicholaus P. et al. “Teaching Undergraduate Students to Read Empirical Articles: An Evaluation and Revision of the QALMRI Method.” PsyArXi Preprints , 2020; Holster, Kristin. “Article Evaluation Assignment”. TRAILS: Teaching Resources and Innovations Library for Sociology . Washington DC: American Sociological Association, 2016; Kershaw, Trina C., Jennifer Fugate, and Aminda J. O'Hare. "Teaching Undergraduates to Understand Published Research through Structured Practice in Identifying Key Research Concepts." Scholarship of Teaching and Learning in Psychology . Advance online publication, 2020; Franco, Josue. “Introducing the Analysis of Journal Articles.” Prepared for presentation at the American Political Science Association’s 2020 Teaching and Learning Conference, February 7-9, 2020, Albuquerque, New Mexico; Reviewer's Guide . SAGE Reviewer Gateway, SAGE Journals; Sego, Sandra A. and Anne E. Stuart. "Learning to Read Empirical Articles in General Psychology." Teaching of Psychology 43 (2016): 38-42; Kershaw, Trina C., Jordan P. Lippman, and Jennifer Fugate. "Practice Makes Proficient: Teaching Undergraduate Students to Understand Published Research." Instructional Science 46 (2018): 921-946; Gyuris, Emma, and Laura Castell. "To Tell Them or Show Them? How to Improve Science Students’ Skills of Critical Reading." International Journal of Innovation in Science and Mathematics Education 21 (2013): 70-80; Woodward-Kron, Robyn. "Critical Analysis and the Journal Article Review Assignment." Prospect 18 (August 2003): 20-36; MacMillan, Margy and Allison MacKenzie. "Strategies for Integrating Information Literacy and Academic Literacy: Helping Undergraduate Students Make the Most of Scholarly Articles." Library Management 33 (2012): 525-535.

Writing Tip

Not All Scholarly Journal Articles Can Be Critically Analyzed

There are a variety of articles published in scholarly journals that do not fit within the guidelines of an article analysis assignment. This is because the work cannot be empirically examined or it does not generate new knowledge in a way which can be critically analyzed.

If you are required to locate a research study on your own, avoid selecting these types of journal articles:

- Theoretical essays which discuss concepts, assumptions, and propositions, but report no empirical research;

- Statistical or methodological papers that may analyze data, but the bulk of the work is devoted to refining a new measurement, statistical technique, or modeling procedure;

- Articles that review, analyze, critique, and synthesize prior research, but do not report any original research;

- Brief essays devoted to research methods and findings;

- Articles written by scholars in popular magazines or industry trade journals;

- Academic commentary that discusses research trends or emerging concepts and ideas, but does not contain citations to sources; and

- Pre-print articles that have been posted online, but may undergo further editing and revision by the journal's editorial staff before final publication. An indication that an article is a pre-print is that it has no volume, issue, or page numbers assigned to it.

Journal Analysis Assignment - Myers . Writing@CSU, Colorado State University; Franco, Josue. “Introducing the Analysis of Journal Articles.” Prepared for presentation at the American Political Science Association’s 2020 Teaching and Learning Conference, February 7-9, 2020, Albuquerque, New Mexico; Woodward-Kron, Robyn. "Critical Analysis and the Journal Article Review Assignment." Prospect 18 (August 2003): 20-36.

- << Previous: Annotated Bibliography

- Next: Giving an Oral Presentation >>

- Last Updated: Jun 3, 2024 9:44 AM

- URL: https://libguides.usc.edu/writingguide/assignments

ON YOUR 1ST ORDER

How To Critically Analyse An Article – Become A Savvy Reader

By Laura Brown on 22nd September 2023

In the current academic scenario, knowing how to analyse an article critically is essential to attain stability and strength. It’s about reading between the lines, questioning what you encounter, and forming informed opinions based on evidence and sound reasoning.

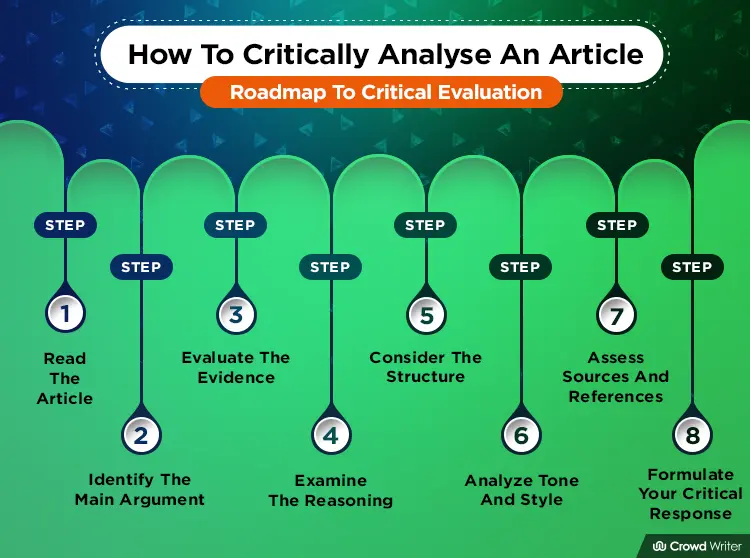

- To critically analyse an article, read it thoroughly to grasp the author’s main points.

- Evaluate the evidence and arguments presented, checking for credibility and logical consistency.

- Consider the article’s structure, tone, and style while also assessing its sources.

- Formulate your critical response by synthesising your analysis and constructing a well-supported argument.

Have you ever wondered how to tell if an article is good or not? It’s important when it comes to your academic superiority. Critical analysis of an article is like being a detective. You check the article closely to see if it makes sense, if the facts are correct, and if the writer is trying to trick you.

But it’s not just something for school, college or university; it’s a superpower for everyday life. It helps you find the important stuff in an article, spot when someone is trying to persuade you and understand what the writer really thinks.

Think of it as a special skill that lets you dig deep into an article, like a treasure hunt. You uncover hidden biases, find the truth, and see how the writer tries to convince you. It’s a bit like being a detective and a wizard at the same time.

Get ready to become a smart reader. This guide will show you how to use this superpower to make sense of the information around us in just 8 simple steps.

Step 1: Read the Article

Before embarking on the journey to analyse an article critically, it is paramount to begin with the foundational step of reading the article itself. This step lays the groundwork for a comprehensive understanding of the material, enabling you to effectively evaluate its merits and demerits.

Reading an article critically starts with setting aside distractions and immersing yourself in the text. Instead of skimming through it hurriedly, take the time to read it meticulously.

To truly grasp the article’s essence, you must consider both its content and context. Content refers to the information and ideas presented within the article, while context encompasses the circumstances in which it was written.

- Why was this article written?

- Who is the intended audience?

- When was it published, and what was happening in the world at that time?

- What is the author’s background or expertise in the subject matter?

As you read, do not rely solely on your memory to retain key points and insights. Taking notes is an invaluable practice during this phase. Record significant ideas, quotes, and statistics that catch your attention.

Your initial impressions of the article can offer valuable insights into your subjective response. If a particular passage elicits a strong emotional reaction, make a note of it. Identifying your emotional responses can help you later in the analysis process when considering your own biases and reactions to the author’s arguments.

Step 2: Identify the Main Argument

While you are up to critically analyse an article, pinpointing the central argument is akin to finding the North Star guiding you through the article’s content. Every well-crafted article should possess a clear and concise main argument or thesis, which serves as the nucleus of the author’s message. Typically situated in the article’s introduction or abstract , this argument not only encapsulates the author’s viewpoint but also functions as a roadmap for the reader, outlining what to expect in the subsequent sections.

Identifying the main argument necessitates a discerning eye. Delve into the introductory paragraphs, abstract, or the initial sections of the article to locate this pivotal statement. This argument may be explicit, explicitly stated by the author, or implicit, inferred through careful examination of the content. Once you’ve grasped the main argument, keep it at the forefront of your mind as you proceed with your analysis, it will serve as the cornerstone against which all other elements are evaluated.

Step 3: Evaluate the Evidence

In order to solely understand how to analyse an article critically, it is imperative to know that an article’s persuasive power hinges on the quality of evidence presented to substantiate its main argument. In this critical step, it’s imperative to scrutinise the evidence with a discerning eye. Look beyond the surface to assess the data, statistics, examples, and citations provided by the author. You can run it through Turnitin for a plagiarism check. These elements serve as the pillars upon which the argument stands or crumbles.

Begin by evaluating the credibility and relevance of the sources used to support the argument. Are they authoritative and trustworthy? Are they current and pertinent to the subject matter? Assess the quality of evidence by considering the reliability of the data, the objectivity of the sources, and the breadth of examples. Moreover, consider the quantity of evidence; is there enough to convincingly underpin the thesis, or does it appear lacking or selective? A well-supported argument should be built upon a solid foundation of robust evidence.

Step 4: Examine the Reasoning

Critical analysis doesn’t stop at identifying the argument and assessing the evidence; it extends to examining the underlying reasoning that connects these elements. In this step, delve deeper into the author’s logic and the structure of the argument. The goal is to identify any logical fallacies or weak assumptions that might undermine the article’s credibility.

Scrutinise the coherence and consistency of the author’s reasoning. Are there any gaps in the argument, or does it flow logically from point to point? Identify any potential biases, emotional appeals, or rhetorical strategies employed by the author. Assess whether the argument is grounded in sound principles and reasoning.

Be on the lookout for flawed deductive or inductive reasoning, and question whether the evidence truly supports the conclusions drawn . Critical thinking is pivotal here, as it allows you to gauge the strength of the article’s argumentation and identify areas where it may be lacking or vulnerable to critique.

Step 5: Consider the Structure

The structure of an article is not merely a cosmetic feature but a fundamental aspect that can profoundly influence its overall effectiveness in conveying its message. A well-organised article possesses the power to captivate readers, enhance comprehension, and amplify its impact. To harness this power effectively, it’s crucial to pay close attention to various structural elements.

- Headings and Subheadings: Examine headings and subheadings to understand the article’s structure and main themes.

- Transitions Between Sections: Observe how transitions between sections maintain or disrupt the flow of ideas.

- Logical Progression: Assess if the article logically builds upon concepts or feels disjointed.

- Use of Visual Aids: Evaluate the integration and effectiveness of visual aids like graphs and charts.

- Paragraph Organisation: Analyse paragraph structure, including clear topic sentences.

- Conclusion and Summary: Review the conclusion for a strong reiteration of the main argument and key takeaways.

In essence, the structure of an article serves as the blueprint that shapes the reader’s journey. A thoughtfully organised article not only makes it easier for readers to navigate the content but also enhances their overall comprehension and retention. By paying attention to these structural elements, you can gain a deeper understanding of the author’s message and how it is effectively conveyed to the audience.

Step 6: Analyse Tone and Style

Exploring the tone and style of an article is like deciphering the author’s hidden intentions and underlying biases. It involves looking closely at how the author has crafted their words, examining their choice of language, tone, and use of rhetorical devices . Is the tone even-handed and impartial, or can you detect signs of favouritism or prejudice? Understanding the author’s perspective in this way allows you to place their argument within a broader context, helping you see beyond the surface of the text.

When you analyse tone, consider whether the author’s language carries any emotional weight. Are they using words that evoke strong feelings, or do they maintain an objective and rational tone throughout? Furthermore, observe how the author addresses counterarguments. Are they respectful and considerate, or do they employ ad hominem attacks? Evaluating tone and style can offer valuable insights into the author’s intentions and their ability to construct a persuasive argument.

Step 7: Assess Sources and References

A critical analysis wouldn’t be complete without examining the sources and references cited within the article. These citations form the foundation upon which the author’s arguments rest. To assess the credibility of the author’s research, it’s essential to scrutinise the origins of these sources. Are they drawn from reputable, well-established journals, books, or widely recognised and trusted websites? High-quality sources reflect positively on the author’s research and strengthen the overall validity of the argument.

While staying on the journey of how to critically analyse an article, be vigilant when encountering articles that heavily rely on sources that might be considered unreliable or biased. Investigate whether the author has balanced their sources and considered diverse perspectives. A well-researched article should draw upon a variety of reputable sources to provide a well-rounded view of the topic. By assessing the sources and references, you can gauge the robustness of the author’s supporting evidence.

Step 8: Formulate Your Critical Response

Having navigated through the previous steps, it’s now your turn to construct a critical response to the article. This step involves summarising your analysis by identifying the strengths and weaknesses within the article. Do you find yourself in agreement with the main argument, or do you have reservations? Highlight the evidence that you found compelling and areas where you believe the article falls short. Your critical response serves as a valuable contribution to the ongoing discourse surrounding the topic, adding your unique perspective to the conversation. Remember that constructive criticism can lead to deeper understanding and improved future discourse.

Now, let’s be specific on two of the most analysed articles, i.e. research articles and journal articles.

How To Critically Analyse A Research Article?

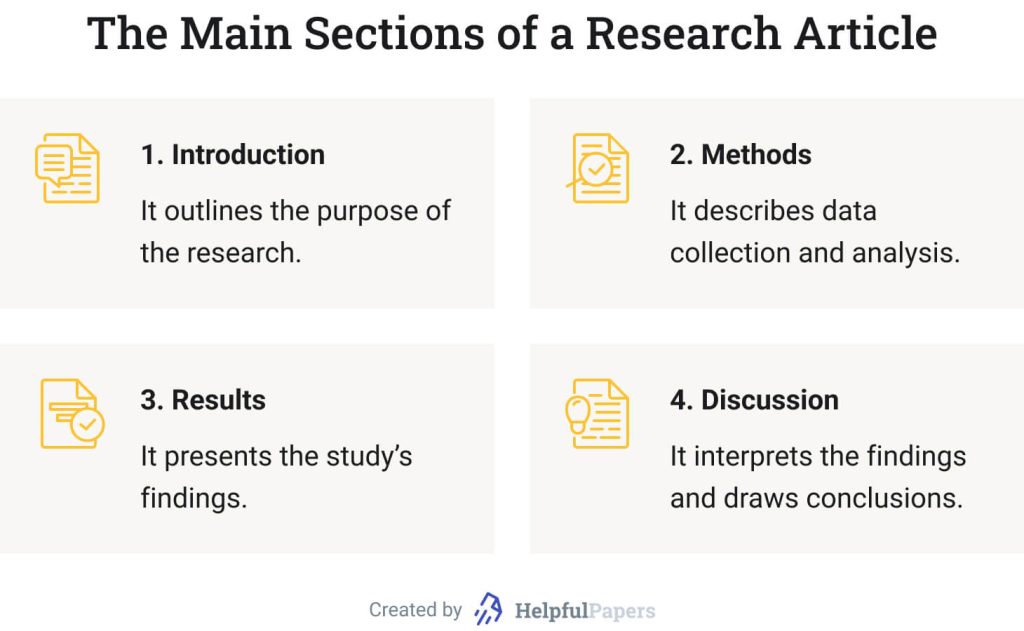

A research article is a scholarly document that presents the findings of original research conducted by the author(s) and is typically published in academic journals. It follows a structured format, including sections such as an abstract, introduction, methods, results, discussion, and references. To critically analyse a research article, you may go through the following six steps.

- Scrutinise the research question’s clarity and significance.

- Examine the appropriateness of research methods.

- Assess sample quality and data reliability.

- Evaluate the accuracy and significance of results.

- Review the discussion for supported conclusions.

- Check references for relevant and high-quality sources.

Never hesitate to ask our customer support for examples and relevant guides as you face any challenges while critically analysing a research paper .

How To Critically Analyse A Journal Article?

A journal article is a scholarly publication that presents research findings, analyses, or discussions within a specific academic or scientific field. These articles typically follow a structured format and are subject to peer review before publication. In order to critically analyse a journal article, take the following steps.

- Evaluate the article’s clarity and relevance.

- Examine the research methods and their suitability.

- Assess the credibility of data and sources.

- Scrutinise the presentation of results.

- Analyse the conclusions drawn.

- Consider the quality of references and citations.

If you have any difficulty conducting a good critical analysis, you can always ask our research paper service for help and relevant examples.

Concluding Upon How To Analyse An Article Critically

Mastering the art of analysing an article critically is a valuable skill that empowers you to navigate the vast sea of information with confidence. By following these eight steps, you can dissect articles effectively, separating reliable information from biased or poorly supported claims. Remember, critical analysis is not about tearing an article apart but understanding it deeply and thoughtfully. With practice, you’ll become a more discerning and informed reader, researcher, or student.

Laura Brown, a senior content writer who writes actionable blogs at Crowd Writer.

Research Paper Analysis: How to Analyze a Research Article + Example

Why might you need to analyze research? First of all, when you analyze a research article, you begin to understand your assigned reading better. It is also the first step toward learning how to write your own research articles and literature reviews. However, if you have never written a research paper before, it may be difficult for you to analyze one. After all, you may not know what criteria to use to evaluate it. But don’t panic! We will help you figure it out!

In this article, our team has explained how to analyze research papers quickly and effectively. At the end, you will also find a research analysis paper example to see how everything works in practice.

- 🔤 Research Analysis Definition

📊 How to Analyze a Research Article

✍️ how to write a research analysis.

- 📝 Analysis Example

- 🔎 More Examples

🔗 References

🔤 research paper analysis: what is it.

A research paper analysis is an academic writing assignment in which you analyze a scholarly article’s methodology, data, and findings. In essence, “to analyze” means to break something down into components and assess each of them individually and in relation to each other. The goal of an analysis is to gain a deeper understanding of a subject. So, when you analyze a research article, you dissect it into elements like data sources , research methods, and results and evaluate how they contribute to the study’s strengths and weaknesses.

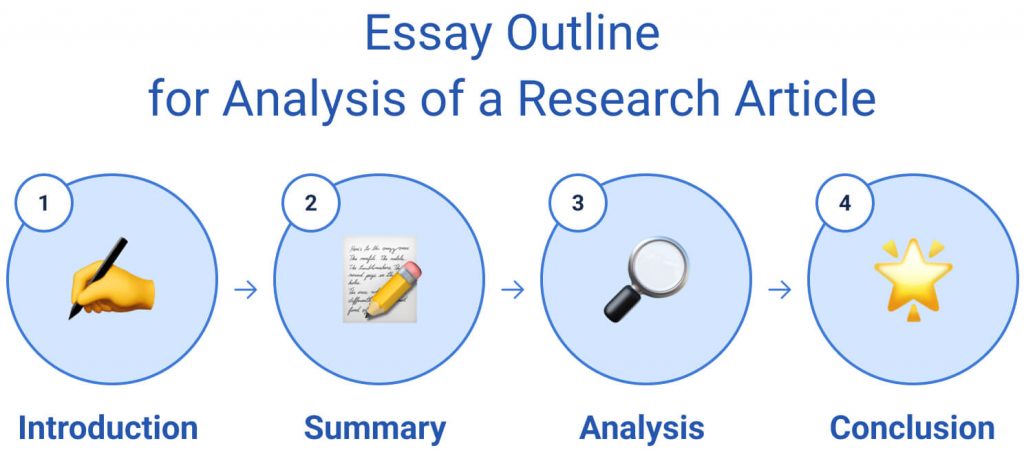

📋 Research Analysis Format

A research analysis paper has a pretty straightforward structure. Check it out below!

| This section should state the analyzed article’s title and author and outline its main idea. The introduction should end with a strong , presenting your conclusions about the article’s strengths, weaknesses, or scientific value. | |

| Here, you need to summarize the major concepts presented in your research article. This section should be brief. | |

| The analysis should contain your evaluation of the paper. It should explain whether the research meets its intentions and purpose and whether it provides a clear and valid interpretation of results. | |

| The closing paragraph should include a rephrased thesis, a summary of core ideas, and an explanation of the analyzed article’s relevance and importance. | |

| At the end of your work, you should add a reference list. It should include the analyzed article’s citation in your required format (APA, MLA, etc.). If you’ve cited other sources in your paper, they must also be indicated in the list. |

Research articles usually include the following sections: introduction, methods, results, and discussion. In the following paragraphs, we will discuss how to analyze a scientific article with a focus on each of its parts.

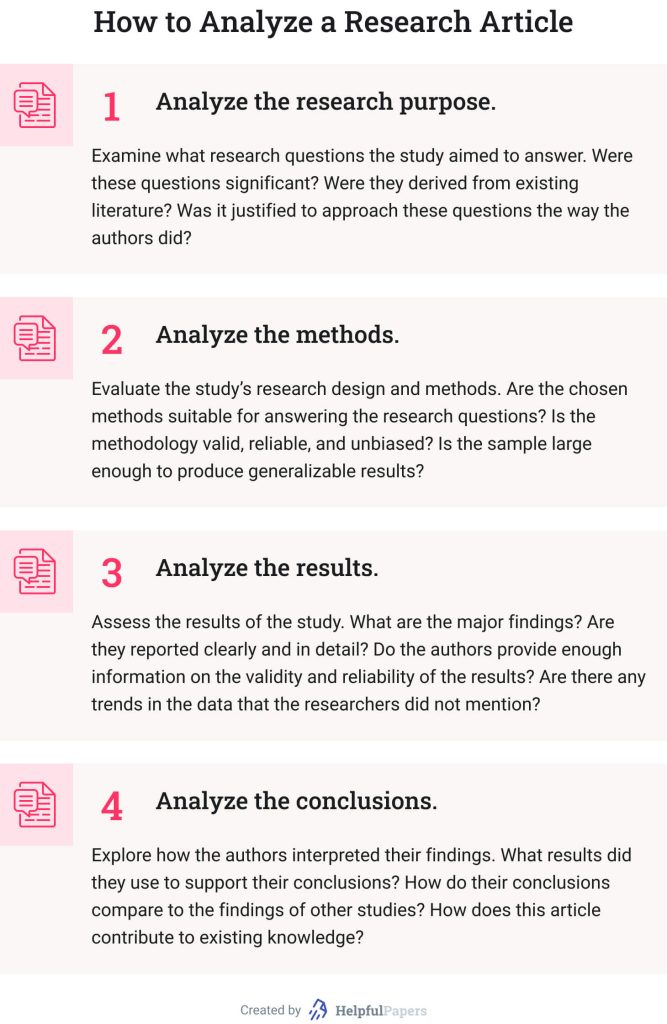

How to Analyze a Research Paper: Purpose

The purpose of the study is usually outlined in the introductory section of the article. Analyzing the research paper’s objectives is critical to establish the context for the rest of your analysis.

When analyzing the research aim, you should evaluate whether it was justified for the researchers to conduct the study. In other words, you should assess whether their research question was significant and whether it arose from existing literature on the topic.

Here are some questions that may help you analyze a research paper’s purpose:

- Why was the research carried out?

- What gaps does it try to fill, or what controversies to settle?

- How does the study contribute to its field?

- Do you agree with the author’s justification for approaching this particular question in this way?

How to Analyze a Paper: Methods

When analyzing the methodology section , you should indicate the study’s research design (qualitative, quantitative, or mixed) and methods used (for example, experiment, case study, correlational research, survey, etc.). After that, you should assess whether these methods suit the research purpose. In other words, do the chosen methods allow scholars to answer their research questions within the scope of their study?

For example, if scholars wanted to study US students’ average satisfaction with their higher education experience, they could conduct a quantitative survey . However, if they wanted to gain an in-depth understanding of the factors influencing US students’ satisfaction with higher education, qualitative interviews would be more appropriate.

When analyzing methods, you should also look at the research sample . Did the scholars use randomization to select study participants? Was the sample big enough for the results to be generalizable to a larger population?

You can also answer the following questions in your methodology analysis:

- Is the methodology valid? In other words, did the researchers use methods that accurately measure the variables of interest?

- Is the research methodology reliable? A research method is reliable if it can produce stable and consistent results under the same circumstances.

- Is the study biased in any way?

- What are the limitations of the chosen methodology?

How to Analyze Research Articles’ Results

You should start the analysis of the article results by carefully reading the tables, figures, and text. Check whether the findings correspond to the initial research purpose. See whether the results answered the author’s research questions or supported the hypotheses stated in the introduction.

To analyze the results section effectively, answer the following questions:

- What are the major findings of the study?

- Did the author present the results clearly and unambiguously?

- Are the findings statistically significant ?

- Does the author provide sufficient information on the validity and reliability of the results?

- Have you noticed any trends or patterns in the data that the author did not mention?

How to Analyze Research: Discussion

Finally, you should analyze the authors’ interpretation of results and its connection with research objectives. Examine what conclusions the authors drew from their study and whether these conclusions answer the original question.

You should also pay attention to how the authors used findings to support their conclusions. For example, you can reflect on why their findings support that particular inference and not another one. Moreover, more than one conclusion can sometimes be made based on the same set of results. If that’s the case with your article, you should analyze whether the authors addressed other interpretations of their findings .

Here are some useful questions you can use to analyze the discussion section:

- What findings did the authors use to support their conclusions?

- How do the researchers’ conclusions compare to other studies’ findings?

- How does this study contribute to its field?

- What future research directions do the authors suggest?

- What additional insights can you share regarding this article? For example, do you agree with the results? What other questions could the researchers have answered?

Now, you know how to analyze an article that presents research findings. However, it’s just a part of the work you have to do to complete your paper. So, it’s time to learn how to write research analysis! Check out the steps below!

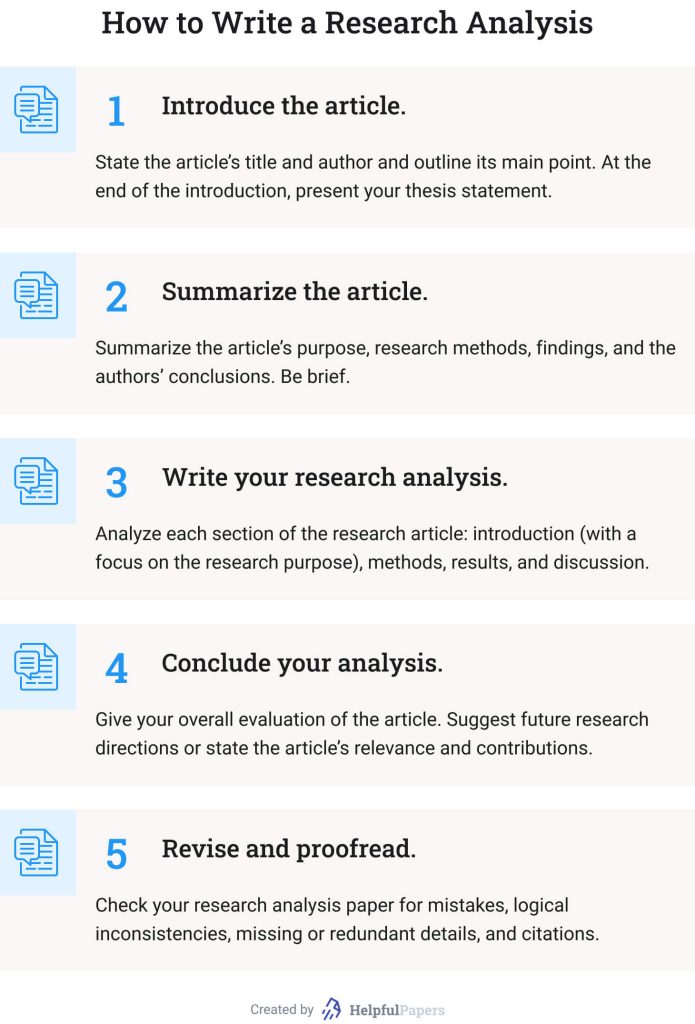

1. Introduce the Article

As with most academic assignments, you should start your research article analysis with an introduction. Here’s what it should include:

- The article’s publication details . Specify the title of the scholarly work you are analyzing, its authors, and publication date. Remember to enclose the article’s title in quotation marks and write it in title case .

- The article’s main point . State what the paper is about. What did the authors study, and what was their major finding?

- Your thesis statement . End your introduction with a strong claim summarizing your evaluation of the article. Consider briefly outlining the research paper’s strengths, weaknesses, and significance in your thesis.

Keep your introduction brief. Save the word count for the “meat” of your paper — that is, for the analysis.

2. Summarize the Article

Now, you should write a brief and focused summary of the scientific article. It should be shorter than your analysis section and contain all the relevant details about the research paper.

Here’s what you should include in your summary:

- The research purpose . Briefly explain why the research was done. Identify the authors’ purpose and research questions or hypotheses .

- Methods and results . Summarize what happened in the study. State only facts, without the authors’ interpretations of them. Avoid using too many numbers and details; instead, include only the information that will help readers understand what happened.

- The authors’ conclusions . Outline what conclusions the researchers made from their study. In other words, describe how the authors explained the meaning of their findings.

If you need help summarizing an article, you can use our free summary generator .

3. Write Your Research Analysis

The analysis of the study is the most crucial part of this assignment type. Its key goal is to evaluate the article critically and demonstrate your understanding of it.

We’ve already covered how to analyze a research article in the section above. Here’s a quick recap:

- Analyze whether the study’s purpose is significant and relevant.

- Examine whether the chosen methodology allows for answering the research questions.

- Evaluate how the authors presented the results.

- Assess whether the authors’ conclusions are grounded in findings and answer the original research questions.

Although you should analyze the article critically, it doesn’t mean you only should criticize it. If the authors did a good job designing and conducting their study, be sure to explain why you think their work is well done. Also, it is a great idea to provide examples from the article to support your analysis.

4. Conclude Your Analysis of Research Paper

A conclusion is your chance to reflect on the study’s relevance and importance. Explain how the analyzed paper can contribute to the existing knowledge or lead to future research. Also, you need to summarize your thoughts on the article as a whole. Avoid making value judgments — saying that the paper is “good” or “bad.” Instead, use more descriptive words and phrases such as “This paper effectively showed…”

Need help writing a compelling conclusion? Try our free essay conclusion generator !

5. Revise and Proofread

Last but not least, you should carefully proofread your paper to find any punctuation, grammar, and spelling mistakes. Start by reading your work out loud to ensure that your sentences fit together and sound cohesive. Also, it can be helpful to ask your professor or peer to read your work and highlight possible weaknesses or typos.

📝 Research Paper Analysis Example

We have prepared an analysis of a research paper example to show how everything works in practice.

No Homework Policy: Research Article Analysis Example

This paper aims to analyze the research article entitled “No Assignment: A Boon or a Bane?” by Cordova, Pagtulon-an, and Tan (2019). This study examined the effects of having and not having assignments on weekends on high school students’ performance and transmuted mean scores. This article effectively shows the value of homework for students, but larger studies are needed to support its findings.

Cordova et al. (2019) conducted a descriptive quantitative study using a sample of 115 Grade 11 students of the Central Mindanao University Laboratory High School in the Philippines. The sample was divided into two groups: the first received homework on weekends, while the second didn’t. The researchers compared students’ performance records made by teachers and found that students who received assignments performed better than their counterparts without homework.

The purpose of this study is highly relevant and justified as this research was conducted in response to the debates about the “No Homework Policy” in the Philippines. Although the descriptive research design used by the authors allows to answer the research question, the study could benefit from an experimental design. This way, the authors would have firm control over variables. Additionally, the study’s sample size was not large enough for the findings to be generalized to a larger population.

The study results are presented clearly, logically, and comprehensively and correspond to the research objectives. The researchers found that students’ mean grades decreased in the group without homework and increased in the group with homework. Based on these findings, the authors concluded that homework positively affected students’ performance. This conclusion is logical and grounded in data.

This research effectively showed the importance of homework for students’ performance. Yet, since the sample size was relatively small, larger studies are needed to ensure the authors’ conclusions can be generalized to a larger population.

🔎 More Research Analysis Paper Examples

Do you want another research analysis example? Check out the best analysis research paper samples below:

- Gracious Leadership Principles for Nurses: Article Analysis

- Effective Mental Health Interventions: Analysis of an Article

- Nursing Turnover: Article Analysis

- Nursing Practice Issue: Qualitative Research Article Analysis

- Quantitative Article Critique in Nursing

- LIVE Program: Quantitative Article Critique

- Evidence-Based Practice Beliefs and Implementation: Article Critique

- “Differential Effectiveness of Placebo Treatments”: Research Paper Analysis

- “Family-Based Childhood Obesity Prevention Interventions”: Analysis Research Paper Example

- “Childhood Obesity Risk in Overweight Mothers”: Article Analysis

- “Fostering Early Breast Cancer Detection” Article Analysis

- Space and the Atom: Article Analysis

- “Democracy and Collective Identity in the EU and the USA”: Article Analysis

- China’s Hegemonic Prospects: Article Review

- Article Analysis: Fear of Missing Out

- Codependence, Narcissism, and Childhood Trauma: Analysis of the Article

- Relationship Between Work Intensity, Workaholism, Burnout, and MSC: Article Review

We hope that our article on research paper analysis has been helpful. If you liked it, please share this article with your friends!

- Analyzing Research Articles: A Guide for Readers and Writers | Sam Mathews

- Summary and Analysis of Scientific Research Articles | San José State University Writing Center

- Analyzing Scholarly Articles | Texas A&M University

- Article Analysis Assignment | University of Wisconsin-Madison

- How to Summarize a Research Article | University of Connecticut

- Critique/Review of Research Articles | University of Calgary

- Art of Reading a Journal Article: Methodically and Effectively | PubMed Central

- Write a Critical Review of a Scientific Journal Article | McLaughlin Library

- How to Read and Understand a Scientific Paper: A Guide for Non-scientists | LSE

- How to Analyze Journal Articles | Classroom

How to Write an Animal Testing Essay: Tips for Argumentative & Persuasive Papers

Descriptive essay topics: examples, outline, & more.

- Essay Topic Generator

- Summary Generator

- Thesis Maker Academic

- Sentence Rephraser

- Read My Paper

- Hypothesis Generator

- Cover Page Generator

- Text Compactor

- Essay Scrambler

- Essay Plagiarism Checker

- Hook Generator

- AI Writing Checker

- Notes Maker

- Overnight Essay Writing

- Topic Ideas

- Writing Tips

- Essay Writing (by Genre)

- Essay Writing (by Topic)

How to Analyze a Research Article: Guide & Analysis Examples

Analyzing a scientific paper is a complicated task that requires knowledge and systematic preparation. This process favors patience over haste and offers a deep and nuanced exploration of the presented research. Fully understanding the peculiarities of content analysis may seem complicated, especially when it comes to evaluating the results.

Our team has developed a thorough guide to make your writing process more comfortable and focused. We offer helpful tips and examples to help you create a paper worthy of admiration from college professors. This guide has everything for a stellar and in-depth argument analysis.

🤔 What Is a Research Article Analysis?

🎯 analysis of a paper: main goals.

- 📑 How to Analyze a Research Article

📝 Research Analysis Template: Essay Outline

- ✨ 5 Tips for Writing an Analysis

- ✒️ 3 Research Paper Analysis Examples

🔗 References

A research genre analysis is a genre of academic writing that lets students assess issues and arguments presented in research articles. They review the text’s content and determine its validity through critical thinking . It involves a great deal of topic analysis. After studying the source, they provide an assessment of the claims with evidence that support or disprove them. It’s their job to establish which of the statements are flawed and which are valid. Students also summarize the article and explain its relevance to the field of study.

| A good research article analysis gives the readers a comprehensive understanding of the topic. To achieve this, provide a clear summary of the work (which you can try to do using ) and enough details to get people to understand the topic. Ensure that you have a good grasp of the subject, or you won’t be able to explain it properly. | |

| Your writing should motivate others to research the subject beyond the content of the analyzed work. Write an interesting paper and make people reflect on how the research applies to them. | |

| Aside from being educational and informative, a research article analysis encourages people to think about the content of the claims. You must evaluate how well the author presented their arguments through facts and logic. Ultimately, the reader should be persuaded to side with your assessment. |

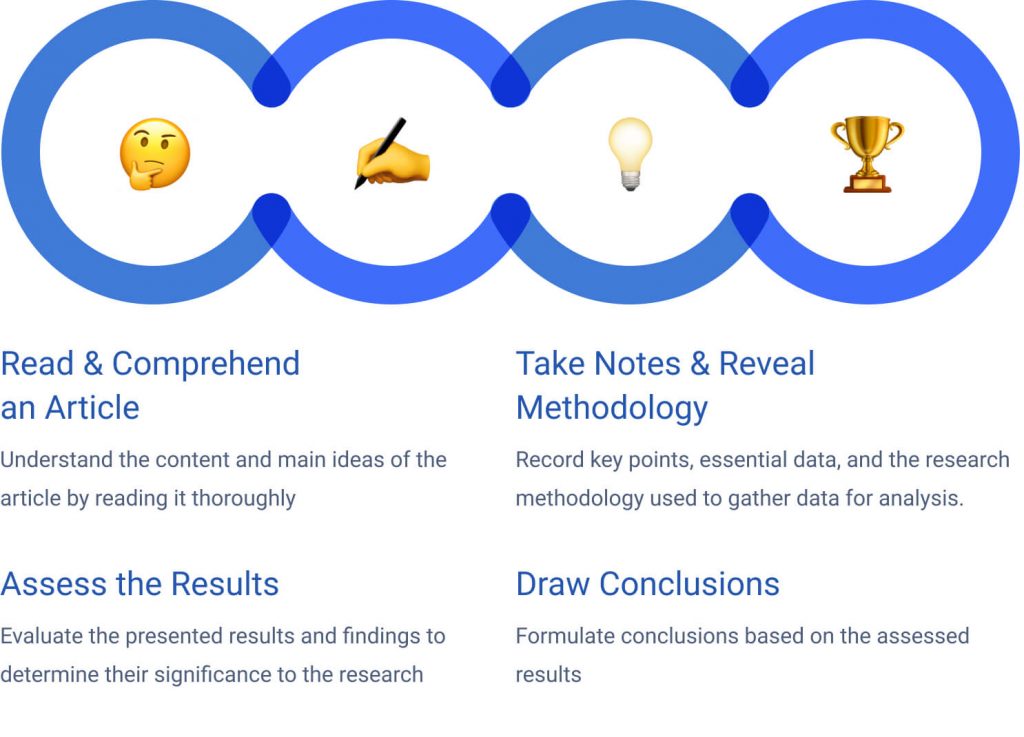

📑 How to Analyze a Research Article – 4 Key Steps

Research paper analysis is often both educational and complicated. Taking a structured approach to this task makes it a lot easier. This section of our guide discloses the four stages that help better understand the content of a scientific paper. Follow our analysis plan to learn how to write about things like results and methodology analysis .

Research Paper Analysis: Read an Article & Take Notes

You can’t critique a research piece without reading the source material first. Skimming over the paper won’t do, as you’ll miss crucial details for your analysis. Follow these steps to ensure you get the most out of the paper while critically evaluating its contents.

- Read the paper once to have a general understanding of what it’s about. Ensure you are familiar with the topic beyond elementary knowledge. You may also read up on related literature beforehand.

- Read the second time and take notes. Read the entire research paper carefully, paying attention to the results, discussion, and conclusion sections. It’s better to take detailed notes as you read, summarizing each section in your own words.

- Outline the results of the study. Write a short rundown of what the author found during the search.

Research Paper Analysis: Comprehend & Reveal the Methodology

Once you’re done with the first step, it’s time to move on to the second stage of research paper analysis: comprehending and revealing the methodology. You can’t possibly remember everything about the article so that you can revise your notes.

- Explore key terms and concepts of an article . If you don’t fully understand them, take the time to familiarize yourself with them.

- Find the hypothesis or the research question the author decided to tackle . Check the facts, arguments, and logic behind their thinking to view the paper critically.

- Look at the validity of the arguments . Research sources used in the article and assess the credibility of the supporting evidence.

- Identify the study’s methodology . Find out how the author approached the research and what its results were. Once you establish the methodology, evaluate the quality of the information, including the methods of data gathering and analysis.

Research Paper Analysis: Assess the Results

After completing the second part of the analysis, it’s time to assess the research results. Establish if the findings support the paper’s hypothesis and their statistical relevance. Address any limitations of the study . For example, a writing on psychology that uses a small group of participants that cannot be considered representative of a larger population. It may be true that the researcher did this intentionally to skew their findings. However, it’s best to be balanced in your article critique and talk about the strong sides of the paper as well. Look at similar studies and see if they came to the same conclusions.

Research Paper Analysis: Draw Conclusions

After you’ve completed the previous steps, you’re ready to make conclusions about the research paper as a whole. Don’t forget to include the following information when working on this part of the analysis:

- Determine the theoretical and practical meaning of the results. For example, if the paper found a particular treatment effective, emphasize that it should be used more often.

- Consider the applications of the research results. Try to find out how they can be applied to the broader field and what ethical implications they may lead to.

- Show how they relate to the big picture. Finally, your analysis should show how the article expands the knowledge of the subject and what it means to further studies.

Writing a research paper analysis can be demanding. These papers have several characteristics that set them apart from other types of academic writing. We’ve created this simple template to explain the content each part of your assessment should have.

| Introduction | This segment introduces both the article and your critical evaluation of it. You should name the assessed work, its author or authors, and publication date here. Explain the main ideas of the paper and state its thesis. Develop your statement and briefly explain your thoughts about the piece. Keep this section short and informative. |

| Summary | Give a rundown of the main ideas from the research article. It should answer five questions: what, why, who, when, and how. Additionally, it would help if you talked about the paper’s structure, point of view, and style. Ensure that you properly identify the research methods used in the study. Also, be sure to use topic sentences to navigate your body paragraphs, just like you would in a regular essay. |

| Analysis | Describe your attitude toward the assessed paper in this segment of your work. Write down what you liked about it and what you didn’t. with specific examples from the piece. Finally, debate whether the author was successful in their research. |

| Conclusion | The final part of your work is where you restate your paper’s thesis using different wording. Summarize presented ideas and the most critical points. Feel free to praise if the research based on facts and logic, or criticize if there is insufficient evidence and argumentation. Don’t let bias get in the way of your evaluation. |

✨ 5 Tips for Writing an Analysis of Paper

Mastering the art of writing a research paper analysis takes time and practice. We’ve decided to provide five great tips that will make this process easier and more enjoyable:

- Take the time to establish the right angle for your analysis. If you can choose its direction, study the paper first and choose the best subject for your work. You can develop several ideas and select the right one later.

- Connect all evidence to your argument. When conducting the analysis, find facts and data supporting your point of view. Explain the connection of each piece of evidence to your statement and showcase what makes it particularly significant.

- Stay balanced. A good analysis covers all facts and looks at them objectively. When confronted with data that clashes with your stance, check it and use evidence to bolster the credibility of your arguments.

- Work on an outline . Good analysis is often grounded in well-structured outline. Write down ideas and topic sentences that connect to various parts of the research sample and its general idea.

- Evaluate all evidence. All evidence has a place in your analysis. Use all of it, even if you come across contradictory facts. Include information that doesn’t fully support the main idea of your analysis and build compelling counterarguments.

️ ✒️ 3 Great Research Paper Analysis Examples

Seeing an example of a finished article can point you in the right direction. Our team has collected seven excellent essays to inspire your subsequent work and show how to approach its structure correctly.

- Analysis of The Five Dysfunctions of a Team Article . This paper concerns the pitfalls of team building in a business environment. In particular, how to overcome this environment’s five most common dysfunctions.

- When Altruism Isn’t Moral by Sally Satel : Article Analysis. The author demonstrates altruistic behavior is not always sufficient to explain human interaction. It discusses what drives people to help others for free or for money.

- Article Analysis Perceptions of ADHD Among Diagnosed Children and Their Parents. In this work, the author evaluates the findings on the perception of ADHD-diagnosed children in developed countries.

Research Article Analysis Topics

Now that you understand how to write a research paper analysis, it’s time to find the right topic for your paper. Below, you’ll find fifteen exciting ideas to inspire your analytical pursuits.

- Review a research paper on the effects of carbon emissions.

- Explore a recent research article on the use of opioids in American healthcare.

- Assess a research paper on the applications of the Large Hadron Collider.

- Discuss quantitative research about mental health rates in developed countries.

- Examine a scientific article about the problems of space exploration .

- Investigate a recent research paper about the rise of surveillance technology.

- Analyze a qualitative research paper on the effectiveness of biofuels as an alternative energy source.

- Study quantitative methods of research on the housing market in Canada.

- Scrutinize a research article about the use of pesticides in food production.

- Evaluate a paper on the efficiency of anti-climate change measures.

- Assess a recent study on the dangers of sugar consumption.

- Survey the latest research on fast food advertising tactics.

- Consider a paper on the most recent developments in string theory .

- Address a recent research article about artificial intelligence.

- Cover research about the benefits of using nuclear power.

We did our best to cover all crucial points of research article analysis and prepare you for this kind of academic work. When you have the time, share our guide with fellow students who can benefit from the content of our work!

- Analyzing Scholarly Articles. – University Writing Center

- How to Write an Analysis (With Examples and Tips). – Indeed

- Complete Guide on Article Analysis (with 1 Analysis Example). – Nerdify, Medium

- How to Read and Understand a Scientific Paper: A Guide for Non-scientists. – Jennifer Raff, LSE

- How to Review a Journal Article. – The Board of Trustees of the University of Illinois

- Critique/Review of Research Articles. – University of Calgary

- How to Summarize a Research Article. – University of Connecticut

- A Guide for Critique of Research Articles – California State Universite, Long Beach

How to conduct a meta-analysis in eight steps: a practical guide

- Open access

- Published: 30 November 2021

- Volume 72 , pages 1–19, ( 2022 )

Cite this article

You have full access to this open access article

- Christopher Hansen 1 ,

- Holger Steinmetz 2 &

- Jörn Block 3 , 4 , 5

173k Accesses

59 Citations

156 Altmetric

Explore all metrics

Avoid common mistakes on your manuscript.

1 Introduction

“Scientists have known for centuries that a single study will not resolve a major issue. Indeed, a small sample study will not even resolve a minor issue. Thus, the foundation of science is the cumulation of knowledge from the results of many studies.” (Hunter et al. 1982 , p. 10)

Meta-analysis is a central method for knowledge accumulation in many scientific fields (Aguinis et al. 2011c ; Kepes et al. 2013 ). Similar to a narrative review, it serves as a synopsis of a research question or field. However, going beyond a narrative summary of key findings, a meta-analysis adds value in providing a quantitative assessment of the relationship between two target variables or the effectiveness of an intervention (Gurevitch et al. 2018 ). Also, it can be used to test competing theoretical assumptions against each other or to identify important moderators where the results of different primary studies differ from each other (Aguinis et al. 2011b ; Bergh et al. 2016 ). Rooted in the synthesis of the effectiveness of medical and psychological interventions in the 1970s (Glass 2015 ; Gurevitch et al. 2018 ), meta-analysis is nowadays also an established method in management research and related fields.

The increasing importance of meta-analysis in management research has resulted in the publication of guidelines in recent years that discuss the merits and best practices in various fields, such as general management (Bergh et al. 2016 ; Combs et al. 2019 ; Gonzalez-Mulé and Aguinis 2018 ), international business (Steel et al. 2021 ), economics and finance (Geyer-Klingeberg et al. 2020 ; Havranek et al. 2020 ), marketing (Eisend 2017 ; Grewal et al. 2018 ), and organizational studies (DeSimone et al. 2020 ; Rudolph et al. 2020 ). These articles discuss existing and trending methods and propose solutions for often experienced problems. This editorial briefly summarizes the insights of these papers; provides a workflow of the essential steps in conducting a meta-analysis; suggests state-of-the art methodological procedures; and points to other articles for in-depth investigation. Thus, this article has two goals: (1) based on the findings of previous editorials and methodological articles, it defines methodological recommendations for meta-analyses submitted to Management Review Quarterly (MRQ); and (2) it serves as a practical guide for researchers who have little experience with meta-analysis as a method but plan to conduct one in the future.

2 Eight steps in conducting a meta-analysis

2.1 step 1: defining the research question.

The first step in conducting a meta-analysis, as with any other empirical study, is the definition of the research question. Most importantly, the research question determines the realm of constructs to be considered or the type of interventions whose effects shall be analyzed. When defining the research question, two hurdles might develop. First, when defining an adequate study scope, researchers must consider that the number of publications has grown exponentially in many fields of research in recent decades (Fortunato et al. 2018 ). On the one hand, a larger number of studies increases the potentially relevant literature basis and enables researchers to conduct meta-analyses. Conversely, scanning a large amount of studies that could be potentially relevant for the meta-analysis results in a perhaps unmanageable workload. Thus, Steel et al. ( 2021 ) highlight the importance of balancing manageability and relevance when defining the research question. Second, similar to the number of primary studies also the number of meta-analyses in management research has grown strongly in recent years (Geyer-Klingeberg et al. 2020 ; Rauch 2020 ; Schwab 2015 ). Therefore, it is likely that one or several meta-analyses for many topics of high scholarly interest already exist. However, this should not deter researchers from investigating their research questions. One possibility is to consider moderators or mediators of a relationship that have previously been ignored. For example, a meta-analysis about startup performance could investigate the impact of different ways to measure the performance construct (e.g., growth vs. profitability vs. survival time) or certain characteristics of the founders as moderators. Another possibility is to replicate previous meta-analyses and test whether their findings can be confirmed with an updated sample of primary studies or newly developed methods. Frequent replications and updates of meta-analyses are important contributions to cumulative science and are increasingly called for by the research community (Anderson & Kichkha 2017 ; Steel et al. 2021 ). Consistent with its focus on replication studies (Block and Kuckertz 2018 ), MRQ therefore also invites authors to submit replication meta-analyses.

2.2 Step 2: literature search

2.2.1 search strategies.

Similar to conducting a literature review, the search process of a meta-analysis should be systematic, reproducible, and transparent, resulting in a sample that includes all relevant studies (Fisch and Block 2018 ; Gusenbauer and Haddaway 2020 ). There are several identification strategies for relevant primary studies when compiling meta-analytical datasets (Harari et al. 2020 ). First, previous meta-analyses on the same or a related topic may provide lists of included studies that offer a good starting point to identify and become familiar with the relevant literature. This practice is also applicable to topic-related literature reviews, which often summarize the central findings of the reviewed articles in systematic tables. Both article types likely include the most prominent studies of a research field. The most common and important search strategy, however, is a keyword search in electronic databases (Harari et al. 2020 ). This strategy will probably yield the largest number of relevant studies, particularly so-called ‘grey literature’, which may not be considered by literature reviews. Gusenbauer and Haddaway ( 2020 ) provide a detailed overview of 34 scientific databases, of which 18 are multidisciplinary or have a focus on management sciences, along with their suitability for literature synthesis. To prevent biased results due to the scope or journal coverage of one database, researchers should use at least two different databases (DeSimone et al. 2020 ; Martín-Martín et al. 2021 ; Mongeon & Paul-Hus 2016 ). However, a database search can easily lead to an overload of potentially relevant studies. For example, key term searches in Google Scholar for “entrepreneurial intention” and “firm diversification” resulted in more than 660,000 and 810,000 hits, respectively. Footnote 1 Therefore, a precise research question and precise search terms using Boolean operators are advisable (Gusenbauer and Haddaway 2020 ). Addressing the challenge of identifying relevant articles in the growing number of database publications, (semi)automated approaches using text mining and machine learning (Bosco et al. 2017 ; O’Mara-Eves et al. 2015 ; Ouzzani et al. 2016 ; Thomas et al. 2017 ) can also be promising and time-saving search tools in the future. Also, some electronic databases offer the possibility to track forward citations of influential studies and thereby identify further relevant articles. Finally, collecting unpublished or undetected studies through conferences, personal contact with (leading) scholars, or listservs can be strategies to increase the study sample size (Grewal et al. 2018 ; Harari et al. 2020 ; Pigott and Polanin 2020 ).

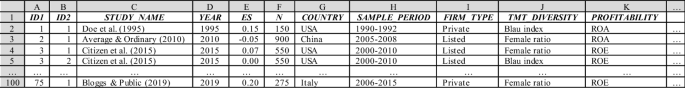

2.2.2 Study inclusion criteria and sample composition

Next, researchers must decide which studies to include in the meta-analysis. Some guidelines for literature reviews recommend limiting the sample to studies published in renowned academic journals to ensure the quality of findings (e.g., Kraus et al. 2020 ). For meta-analysis, however, Steel et al. ( 2021 ) advocate for the inclusion of all available studies, including grey literature, to prevent selection biases based on availability, cost, familiarity, and language (Rothstein et al. 2005 ), or the “Matthew effect”, which denotes the phenomenon that highly cited articles are found faster than less cited articles (Merton 1968 ). Harrison et al. ( 2017 ) find that the effects of published studies in management are inflated on average by 30% compared to unpublished studies. This so-called publication bias or “file drawer problem” (Rosenthal 1979 ) results from the preference of academia to publish more statistically significant and less statistically insignificant study results. Owen and Li ( 2020 ) showed that publication bias is particularly severe when variables of interest are used as key variables rather than control variables. To consider the true effect size of a target variable or relationship, the inclusion of all types of research outputs is therefore recommended (Polanin et al. 2016 ). Different test procedures to identify publication bias are discussed subsequently in Step 7.

In addition to the decision of whether to include certain study types (i.e., published vs. unpublished studies), there can be other reasons to exclude studies that are identified in the search process. These reasons can be manifold and are primarily related to the specific research question and methodological peculiarities. For example, studies identified by keyword search might not qualify thematically after all, may use unsuitable variable measurements, or may not report usable effect sizes. Furthermore, there might be multiple studies by the same authors using similar datasets. If they do not differ sufficiently in terms of their sample characteristics or variables used, only one of these studies should be included to prevent bias from duplicates (Wood 2008 ; see this article for a detection heuristic).

In general, the screening process should be conducted stepwise, beginning with a removal of duplicate citations from different databases, followed by abstract screening to exclude clearly unsuitable studies and a final full-text screening of the remaining articles (Pigott and Polanin 2020 ). A graphical tool to systematically document the sample selection process is the PRISMA flow diagram (Moher et al. 2009 ). Page et al. ( 2021 ) recently presented an updated version of the PRISMA statement, including an extended item checklist and flow diagram to report the study process and findings.

2.3 Step 3: choice of the effect size measure

2.3.1 types of effect sizes.

The two most common meta-analytical effect size measures in management studies are (z-transformed) correlation coefficients and standardized mean differences (Aguinis et al. 2011a ; Geyskens et al. 2009 ). However, meta-analyses in management science and related fields may not be limited to those two effect size measures but rather depend on the subfield of investigation (Borenstein 2009 ; Stanley and Doucouliagos 2012 ). In economics and finance, researchers are more interested in the examination of elasticities and marginal effects extracted from regression models than in pure bivariate correlations (Stanley and Doucouliagos 2012 ). Regression coefficients can also be converted to partial correlation coefficients based on their t-statistics to make regression results comparable across studies (Stanley and Doucouliagos 2012 ). Although some meta-analyses in management research have combined bivariate and partial correlations in their study samples, Aloe ( 2015 ) and Combs et al. ( 2019 ) advise researchers not to use this practice. Most importantly, they argue that the effect size strength of partial correlations depends on the other variables included in the regression model and is therefore incomparable to bivariate correlations (Schmidt and Hunter 2015 ), resulting in a possible bias of the meta-analytic results (Roth et al. 2018 ). We endorse this opinion. If at all, we recommend separate analyses for each measure. In addition to these measures, survival rates, risk ratios or odds ratios, which are common measures in medical research (Borenstein 2009 ), can be suitable effect sizes for specific management research questions, such as understanding the determinants of the survival of startup companies. To summarize, the choice of a suitable effect size is often taken away from the researcher because it is typically dependent on the investigated research question as well as the conventions of the specific research field (Cheung and Vijayakumar 2016 ).

2.3.2 Conversion of effect sizes to a common measure

After having defined the primary effect size measure for the meta-analysis, it might become necessary in the later coding process to convert study findings that are reported in effect sizes that are different from the chosen primary effect size. For example, a study might report only descriptive statistics for two study groups but no correlation coefficient, which is used as the primary effect size measure in the meta-analysis. Different effect size measures can be harmonized using conversion formulae, which are provided by standard method books such as Borenstein et al. ( 2009 ) or Lipsey and Wilson ( 2001 ). There also exist online effect size calculators for meta-analysis. Footnote 2

2.4 Step 4: choice of the analytical method used

Choosing which meta-analytical method to use is directly connected to the research question of the meta-analysis. Research questions in meta-analyses can address a relationship between constructs or an effect of an intervention in a general manner, or they can focus on moderating or mediating effects. There are four meta-analytical methods that are primarily used in contemporary management research (Combs et al. 2019 ; Geyer-Klingeberg et al. 2020 ), which allow the investigation of these different types of research questions: traditional univariate meta-analysis, meta-regression, meta-analytic structural equation modeling, and qualitative meta-analysis (Hoon 2013 ). While the first three are quantitative, the latter summarizes qualitative findings. Table 1 summarizes the key characteristics of the three quantitative methods.

2.4.1 Univariate meta-analysis

In its traditional form, a meta-analysis reports a weighted mean effect size for the relationship or intervention of investigation and provides information on the magnitude of variance among primary studies (Aguinis et al. 2011c ; Borenstein et al. 2009 ). Accordingly, it serves as a quantitative synthesis of a research field (Borenstein et al. 2009 ; Geyskens et al. 2009 ). Prominent traditional approaches have been developed, for example, by Hedges and Olkin ( 1985 ) or Hunter and Schmidt ( 1990 , 2004 ). However, going beyond its simple summary function, the traditional approach has limitations in explaining the observed variance among findings (Gonzalez-Mulé and Aguinis 2018 ). To identify moderators (or boundary conditions) of the relationship of interest, meta-analysts can create subgroups and investigate differences between those groups (Borenstein and Higgins 2013 ; Hunter and Schmidt 2004 ). Potential moderators can be study characteristics (e.g., whether a study is published vs. unpublished), sample characteristics (e.g., study country, industry focus, or type of survey/experiment participants), or measurement artifacts (e.g., different types of variable measurements). The univariate approach is thus suitable to identify the overall direction of a relationship and can serve as a good starting point for additional analyses. However, due to its limitations in examining boundary conditions and developing theory, the univariate approach on its own is currently oftentimes viewed as not sufficient (Rauch 2020 ; Shaw and Ertug 2017 ).

2.4.2 Meta-regression analysis